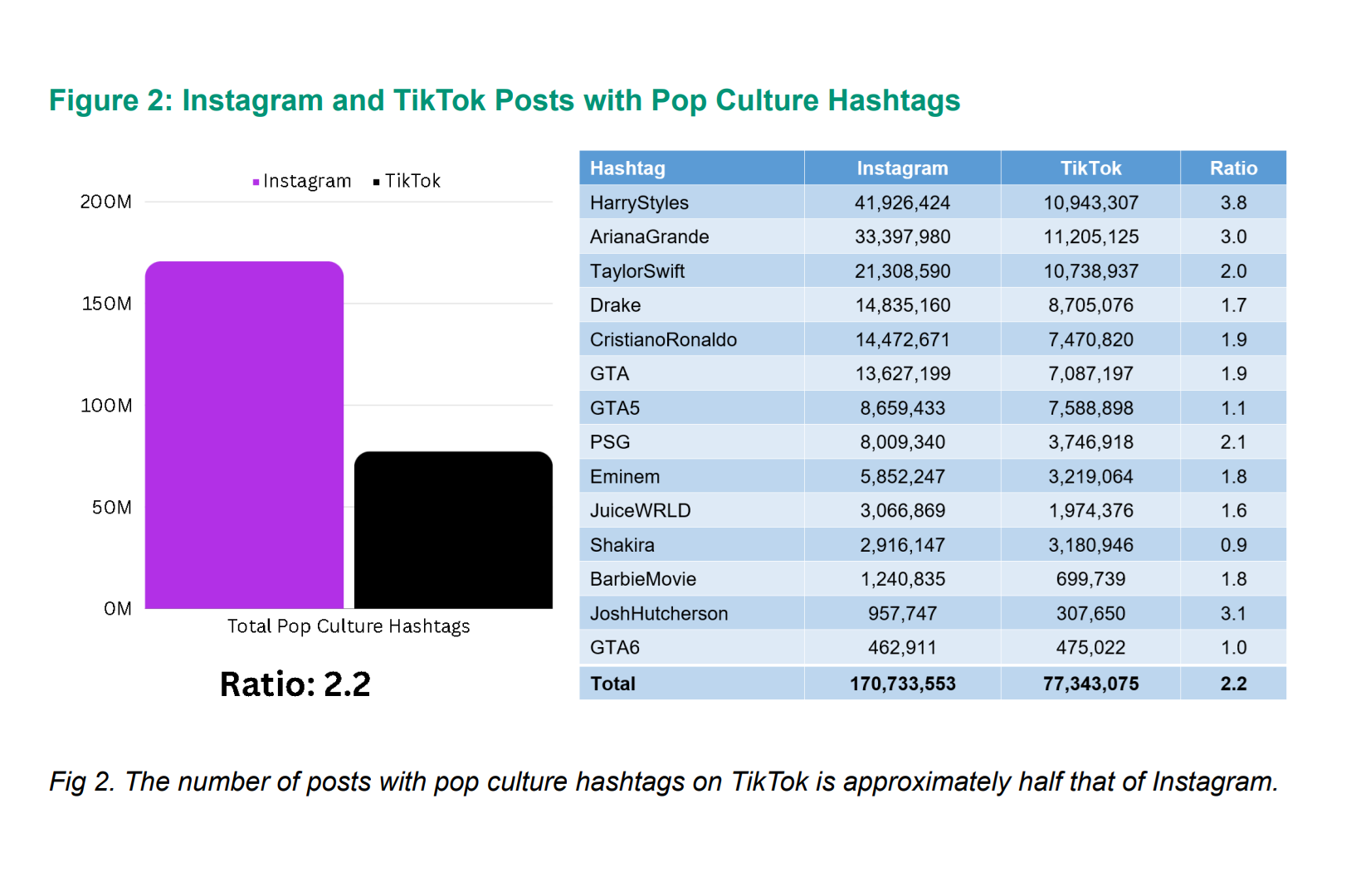

The Network Contagion Research Institute at Rutgers University recently dropped a report that sheds light on some intriguing disparities in how politically charged hashtags play out on TikTok versus Instagram. This deep dive into cyber threats on social media takes a closer look at hashtags like "Tiananmen," "HongKongProtest," and "FreeTibet," stacked against mainstream tags like "TaylorSwift" and "Trump."

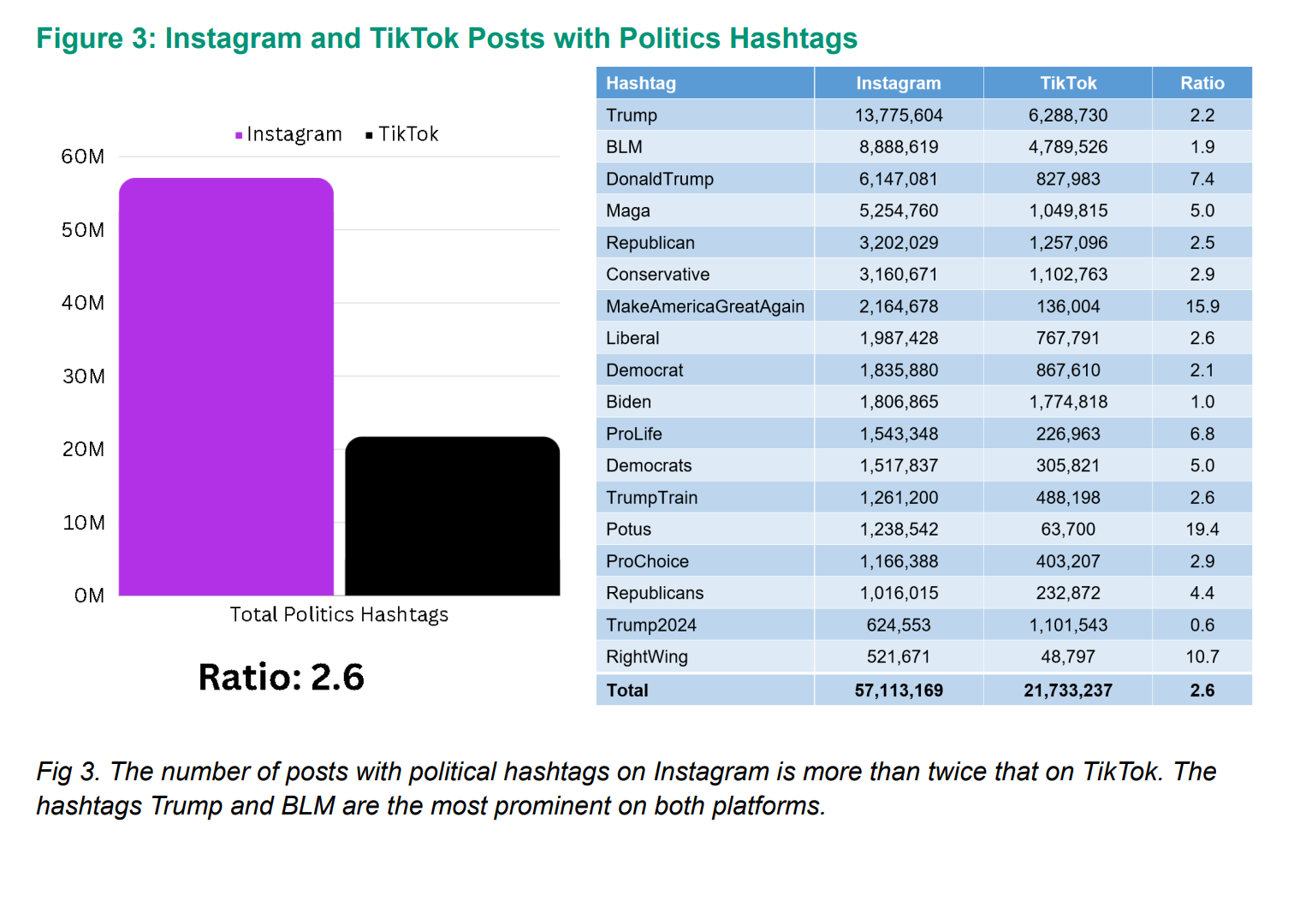

To level the playing field, considering the differences in user base and platform longevity, researchers meticulously crunched the numbers on hashtag ratios across diverse political and pop-culture topics on both platforms. What's surprising is that TikTok seems to be sporting fewer politically charged hashtags, suggesting a potential alignment with the preferences of the Chinese government.

Pop-culture hashtags like "ArianaGrande" show up twice as often on Instagram compared to TikTok, and US political hashtags like "Trump" or "Biden" appear 2.6 times more on Instagram. But the real eye-opener is with hashtags like "FreeTibet," which is a whopping 50 times more common on Instagram. "FreeUyghurs" clocks in at 59 times more prevalent on Instagram, and "HongKongProtest" is a staggering 174 times more frequent on Instagram than TikTok.

The report's takeaway? TikTok's content promotion or suppression seems to be playing along with the tunes of the Chinese government's interests.

Images: NetworkContagion

TikTok is under the microscope of US politicians, who are closely scrutinizing how it handles political content. With its parent company, ByteDance, planted in China—a country flagged as a foreign adversary by US—concerns are mounting about potential information manipulation to serve political agendas. Attempts to ban the app have hit legal roadblocks, leaving TikTok squarely in the crossfire of geopolitical tensions.

Granted, the report has its limitations, with challenges in isolating hashtag analysis to post-TikTok launch periods. TikTok fires back at the findings, calling out flawed methodology and stressing that hashtags are user-generated, not dictated by the platform. They argue that the content in question is readily available, dismissing claims of suppression as baseless, and highlighting that one-third of TikTok videos don't even bother with hashtags.

Joel Finkelstein, co-founder at NCRI, acknowledges the data constraints but stands firm on the report's conclusions based on publicly available information. Despite the hurdles, the study provides valuable insights into the nuanced dynamics of hashtag usage across these two influential social media platforms.

When we look at how social media handles conflicts, it seems like platforms such as Facebook, Instagram, and even TikTok might be leaning towards supporting their own bottom lines. This raises concerns about whether these platforms prioritize free speech and unbiased information or if they have their own biases. It's not just about one platform; it's a broader issue highlighting that social media lacks consistent moral and ethical standards. Instead, these platforms often follow their own agendas, sometimes putting profit before sharing accurate information and promoting fair free speech. This raises important questions about the need for better regulations to ensure that social media platforms operate ethically and responsibly while navigating complex global issues.

Read next: Amnesty International Confirms India’s Government Uses Infamous Pegasus Spyware

by Irfan Ahmad via Digital Information World

"Mr Branding" is a blog based on RSS for everything related to website branding and website design, it collects its posts from many sites in order to facilitate the updating to the latest technology.

To suggest any source, please contact me: Taha.baba@consultant.com

Friday, December 29, 2023

PRISMA's Cat and Mouse Game - Cracking Google's MultiLogin Mystery

CloudSEK, a cyber security firm, found a sneaky way hackers can mess with Google accounts, and it's a bit of a head-scratcher. This method lets them stay logged in, even after changing the password. Sounds wild, right?

For starters, Google uses a system called OAuth2 for keeping things secure. It's like a fancy bouncer at a club, making sure only the right people get in. But these hackers, led by someone calling themselves PRISMA, figured out a trick to keep the party going.

They found a secret spot in Google's system, a hidden door called "MultiLogin." It's a tool Google uses to sync accounts across different services. The hacker PRISMA exploited this door, creating a malware called Lumma Infostealer to do the dirty work.

Now, the clever part is, even if you change your passwords, these hackers can keep sipping on their virtual cocktails. The malware they created knows how to regenerate these secret codes, called cookies, that Google uses to verify who you are.

CloudSEK's researchers say this is a serious threat. The hackers aren't just sneaking in once—they're setting up camp. Even if you kick them out by changing your password, they still have a way back in. It's like changing the locks on your front door, but they somehow still have a secret master key.

Researchers tried reaching out to Google to spill the beans, but so far, it's been crickets. No word from the tech giant on how they plan to deal with this sneaky hack.

So, here we are, in a world where even resetting your password might not be enough to kick out the virtual party crashers. Stay tuned to see how Google responds to this unexpected security hiccup.

Read next: Researchers Suggest Innovative Methods To Enhance Security And Privacy For Apple’s AirTag

by Irfan Ahmad via Digital Information World

For starters, Google uses a system called OAuth2 for keeping things secure. It's like a fancy bouncer at a club, making sure only the right people get in. But these hackers, led by someone calling themselves PRISMA, figured out a trick to keep the party going.

They found a secret spot in Google's system, a hidden door called "MultiLogin." It's a tool Google uses to sync accounts across different services. The hacker PRISMA exploited this door, creating a malware called Lumma Infostealer to do the dirty work.

Now, the clever part is, even if you change your passwords, these hackers can keep sipping on their virtual cocktails. The malware they created knows how to regenerate these secret codes, called cookies, that Google uses to verify who you are.

CloudSEK's researchers say this is a serious threat. The hackers aren't just sneaking in once—they're setting up camp. Even if you kick them out by changing your password, they still have a way back in. It's like changing the locks on your front door, but they somehow still have a secret master key.

Researchers tried reaching out to Google to spill the beans, but so far, it's been crickets. No word from the tech giant on how they plan to deal with this sneaky hack.

So, here we are, in a world where even resetting your password might not be enough to kick out the virtual party crashers. Stay tuned to see how Google responds to this unexpected security hiccup.

Read next: Researchers Suggest Innovative Methods To Enhance Security And Privacy For Apple’s AirTag

by Irfan Ahmad via Digital Information World

Thursday, December 28, 2023

Unlocking Creativity and Problem-Solving: 34% of Users Leverage Free AI Tools, New Survey Finds

A report by Atomicwork shows the user frequency of artificial intelligence (AI) usage in its report, called “The State of AI in IT-2024”. The report indicates the percentage of people who use AI frequently in North America. According to the report, 75% of the people in North America said that they frequently use free AI for their work. 45% of the people said that they use free AI weekly for their work.

The respondents who were part of the survey were also asked what purposes they use the free AI tools for. 34% of them said that they use free AI tools to enhance their creativity and for problem-solving certain issues. 30% of them use these free AI tools for emails or editing already-written emails. There were also people (26%) who used free AI tools for content creation or editing the already written material. When asked these people if they had heard about ChatGPT and Bard, 74% said that they were familiar with ChatGPT but only 4% said that they had heard of Bard.

When respondents were asked if they were okay if their company is using AI for work. A total of 52% said that they were okay with it. 21% are okay with AI usage at work because they say that AI is limited and cannot surpass the human brain. 18% said that they are okay because they are not using it precisely and strictly for work. 9% believe that they are not completely taking help from AI so it's okay. All of this data shows that most of the respondents, three-quarters to be exact, are Pro AI.

Then the survey also asked the respondents which type of work do they not want AI to do. 39% answered that they do not want AI to penetrate ethical and legal decisions. 36% do not want AI to filter in Customer Relationship Management. 33% of respondents do not want AI in People Management.

Read next: New Survey Shows More Than 50% Of Gen Z Adults Have Purchased From An Influencer Brand

by Arooj Ahmed via Digital Information World

The respondents who were part of the survey were also asked what purposes they use the free AI tools for. 34% of them said that they use free AI tools to enhance their creativity and for problem-solving certain issues. 30% of them use these free AI tools for emails or editing already-written emails. There were also people (26%) who used free AI tools for content creation or editing the already written material. When asked these people if they had heard about ChatGPT and Bard, 74% said that they were familiar with ChatGPT but only 4% said that they had heard of Bard.

When respondents were asked if they were okay if their company is using AI for work. A total of 52% said that they were okay with it. 21% are okay with AI usage at work because they say that AI is limited and cannot surpass the human brain. 18% said that they are okay because they are not using it precisely and strictly for work. 9% believe that they are not completely taking help from AI so it's okay. All of this data shows that most of the respondents, three-quarters to be exact, are Pro AI.

Then the survey also asked the respondents which type of work do they not want AI to do. 39% answered that they do not want AI to penetrate ethical and legal decisions. 36% do not want AI to filter in Customer Relationship Management. 33% of respondents do not want AI in People Management.

Read next: New Survey Shows More Than 50% Of Gen Z Adults Have Purchased From An Influencer Brand

by Arooj Ahmed via Digital Information World

New Survey Shows More Than 50% Of Gen Z Adults Have Purchased From An Influencer Brand

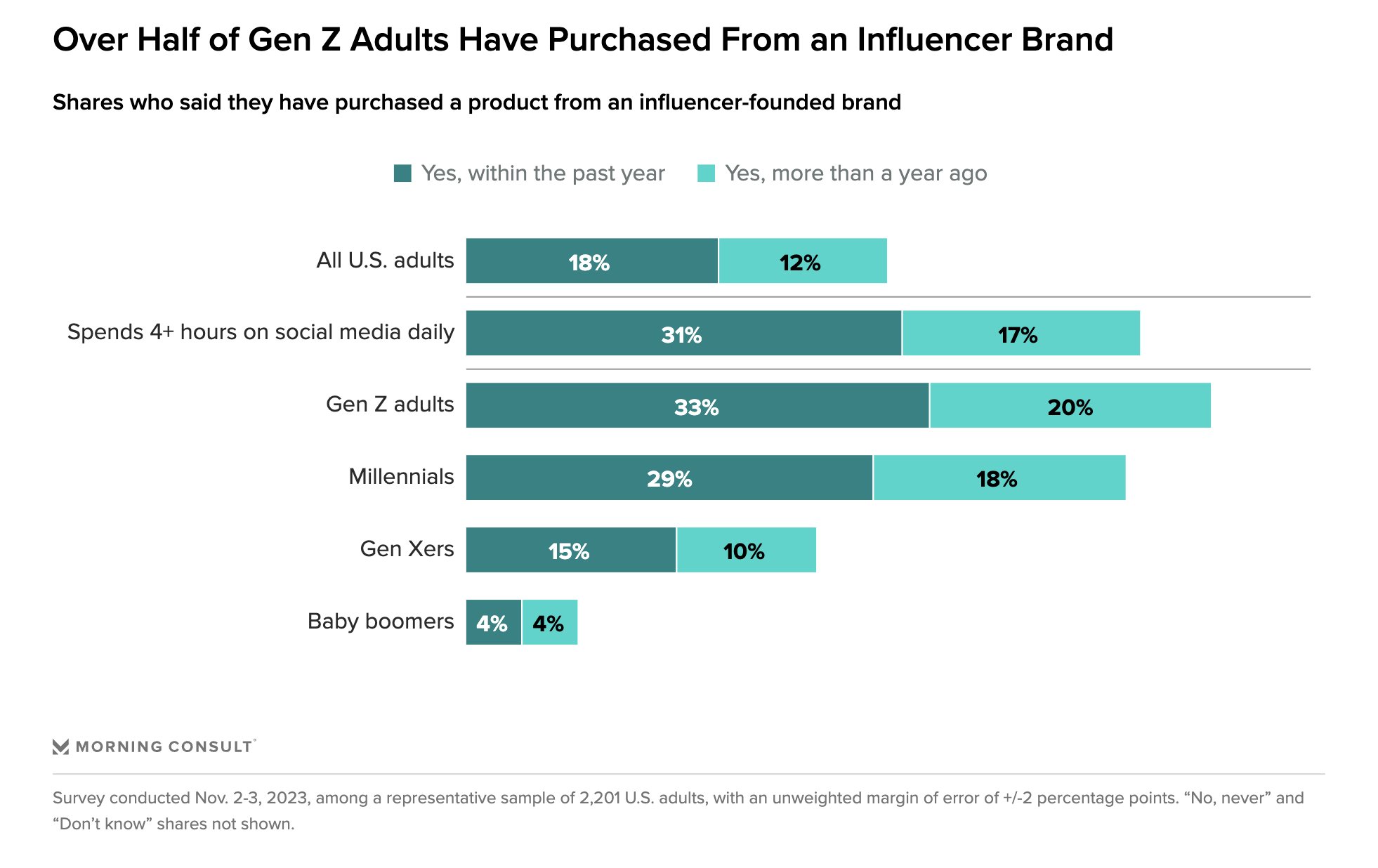

There was a time when influencers were solely looked upon as a leading name to voice a certain brand. But as proven by the latest statistics, that’s far from the truth.

Influencers are now growing so much in power that they can also impact a brand’s revenue, diversify their respective apps, and serve as solo media channels without any assistance.

The news comes from data arising from a recently published study by the Morning Consult. This displayed some key stats worth a mention. For instance, 53% of all Gen Z consumers in the US ended up buying products from a brand that was founded by an influencer. Meanwhile, the same was the case for millennials where the figures stood at 47%.

A whopping 2,200 Adults based in the US took part in this research during the start of November.

The study dived down deep to look at the popular influencers that continue to do so well in this domain. The products are aligned with the specific brand in question and they need to be as original as possible, experts mentioned.

But before they can reach such a powerful position, the influencers are required to have a huge reach that impacts the masses and the proper platform that they can refer to as one of their own.

So what does this mean? Well, if you have your own brand or business, you might wish to consider working by the side of influencers for stocking and sales of their goods. And from these stats, it’s proof of how Gen Z trusts influencers so much that they’re ready to make purchases to this extent.

The results also proved how these individuals were a part of the group who spent close to four hours on average, each day, surfing online through social media. The stats showed no signs of a decline and it was evident how the dominating effect of social media influencers will carry on in the proceeding year too.

Read next: Study Shows that AI Won’t Impact Jobs by 2026, Here’s Why

by Dr. Hura Anwar via Digital Information World

Influencers are now growing so much in power that they can also impact a brand’s revenue, diversify their respective apps, and serve as solo media channels without any assistance.

The news comes from data arising from a recently published study by the Morning Consult. This displayed some key stats worth a mention. For instance, 53% of all Gen Z consumers in the US ended up buying products from a brand that was founded by an influencer. Meanwhile, the same was the case for millennials where the figures stood at 47%.

A whopping 2,200 Adults based in the US took part in this research during the start of November.

The study dived down deep to look at the popular influencers that continue to do so well in this domain. The products are aligned with the specific brand in question and they need to be as original as possible, experts mentioned.

But before they can reach such a powerful position, the influencers are required to have a huge reach that impacts the masses and the proper platform that they can refer to as one of their own.

- Related: A New Survey Shows that Consumers Don't Trust Social Media Apps when it Comes to Online Shopping

So what does this mean? Well, if you have your own brand or business, you might wish to consider working by the side of influencers for stocking and sales of their goods. And from these stats, it’s proof of how Gen Z trusts influencers so much that they’re ready to make purchases to this extent.

The results also proved how these individuals were a part of the group who spent close to four hours on average, each day, surfing online through social media. The stats showed no signs of a decline and it was evident how the dominating effect of social media influencers will carry on in the proceeding year too.

Read next: Study Shows that AI Won’t Impact Jobs by 2026, Here’s Why

by Dr. Hura Anwar via Digital Information World

Blocker users defiant: Workarounds thrive despite YouTube's clampdown

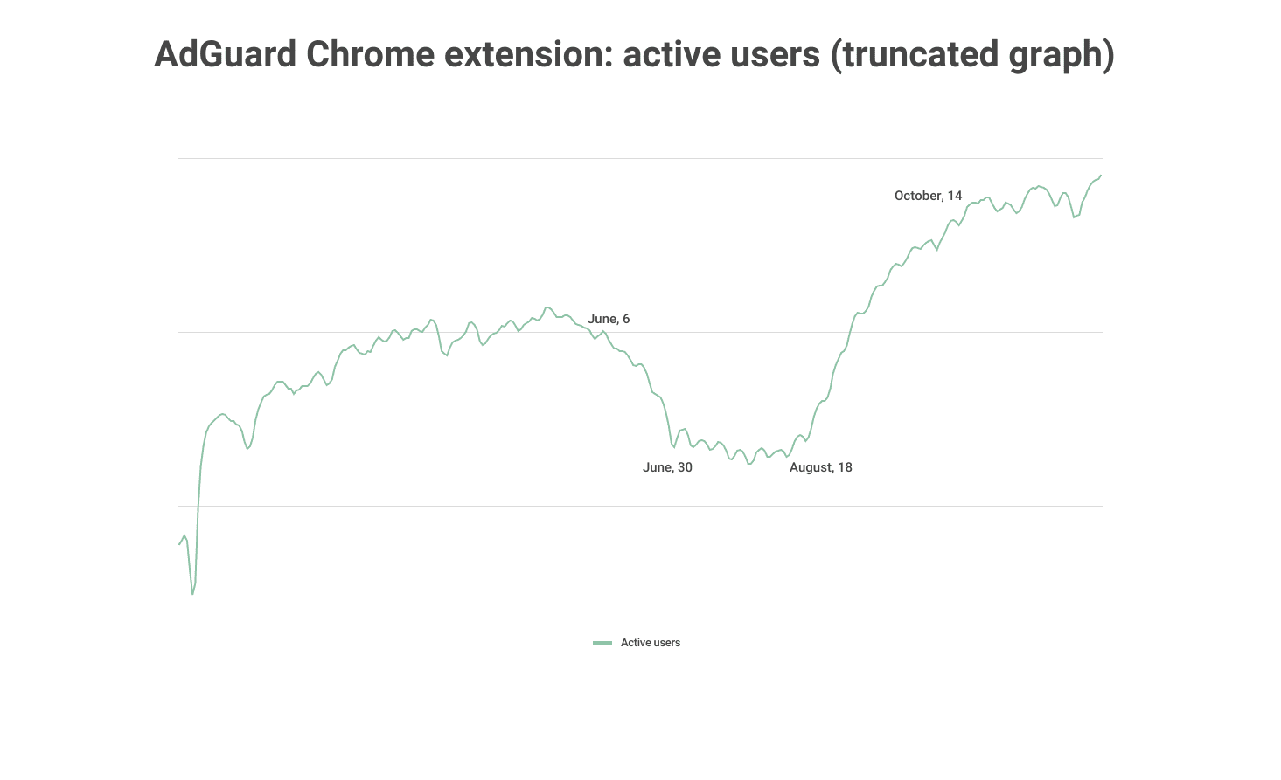

Back in May 2023, YouTube launched a new policy which was against users who were using ad blockers on YouTube. The reason for this policy was to ask users to either watch YouTube with ads or subscribe to ad-free YouTube by paying the subscription fee. The policy for anti-ad blockers was first applied to a few users but now YouTube has applied this to all the users worldwide. The users who were using ad blockers for trackers or privacy concerns are not happy with this policy.

An ad-blocking company, Adguard, said that YouTube and ad blockers never get along with each other. Users use ad blockers for YouTube ads because they don't want the ads to interrupt their streaming. On the other hand, YouTube earns a lot of revenue from these ads. A portion of this revenue is also obtained from users subscribing to get rid of ads.

YouTube isn't the only platform that has taken the step for ad blockers. But as YouTube is one of the biggest platforms with about 2.5 billion monthly users, its policy about anti-ad blockers is the only one that became viral. When the news about YouTube’s policy regarding anti-ad blockers spread, many users who were using ad blockers uninstalled them but some also used other measures to block YouTube’s software to stop ad blockers.

When YouTube started its anti-ad blockers policy in June and August, the usage of the Adguard Ad blocker extension for Chrome got 8% lower. But soon, the usage started increasing again after a month. Even with YouTube’s anti-ad block policy, users are still using ad blockers and several ad blockers are also working perfectly fine on YouTube. Despite this, users are having concerns about their privacy while using those ad blockers. Alexander Hanff, a privacy campaigner said that the script that YouTube is using for recognizing ad blockers is breaching users’ privacies. This is completely illegal and YouTube should stop using it.

Image: Adguard/Cybernews

Read next: Google Agrees To Settlement After Being Accused Of Wrongly Collecting User Data Through Chrome’s Incognito Mode

by Arooj Ahmed via Digital Information World

An ad-blocking company, Adguard, said that YouTube and ad blockers never get along with each other. Users use ad blockers for YouTube ads because they don't want the ads to interrupt their streaming. On the other hand, YouTube earns a lot of revenue from these ads. A portion of this revenue is also obtained from users subscribing to get rid of ads.

YouTube isn't the only platform that has taken the step for ad blockers. But as YouTube is one of the biggest platforms with about 2.5 billion monthly users, its policy about anti-ad blockers is the only one that became viral. When the news about YouTube’s policy regarding anti-ad blockers spread, many users who were using ad blockers uninstalled them but some also used other measures to block YouTube’s software to stop ad blockers.

When YouTube started its anti-ad blockers policy in June and August, the usage of the Adguard Ad blocker extension for Chrome got 8% lower. But soon, the usage started increasing again after a month. Even with YouTube’s anti-ad block policy, users are still using ad blockers and several ad blockers are also working perfectly fine on YouTube. Despite this, users are having concerns about their privacy while using those ad blockers. Alexander Hanff, a privacy campaigner said that the script that YouTube is using for recognizing ad blockers is breaching users’ privacies. This is completely illegal and YouTube should stop using it.

Image: Adguard/Cybernews

Read next: Google Agrees To Settlement After Being Accused Of Wrongly Collecting User Data Through Chrome’s Incognito Mode

by Arooj Ahmed via Digital Information World

Study Shows that AI Won’t Impact Jobs by 2026, Here’s Why

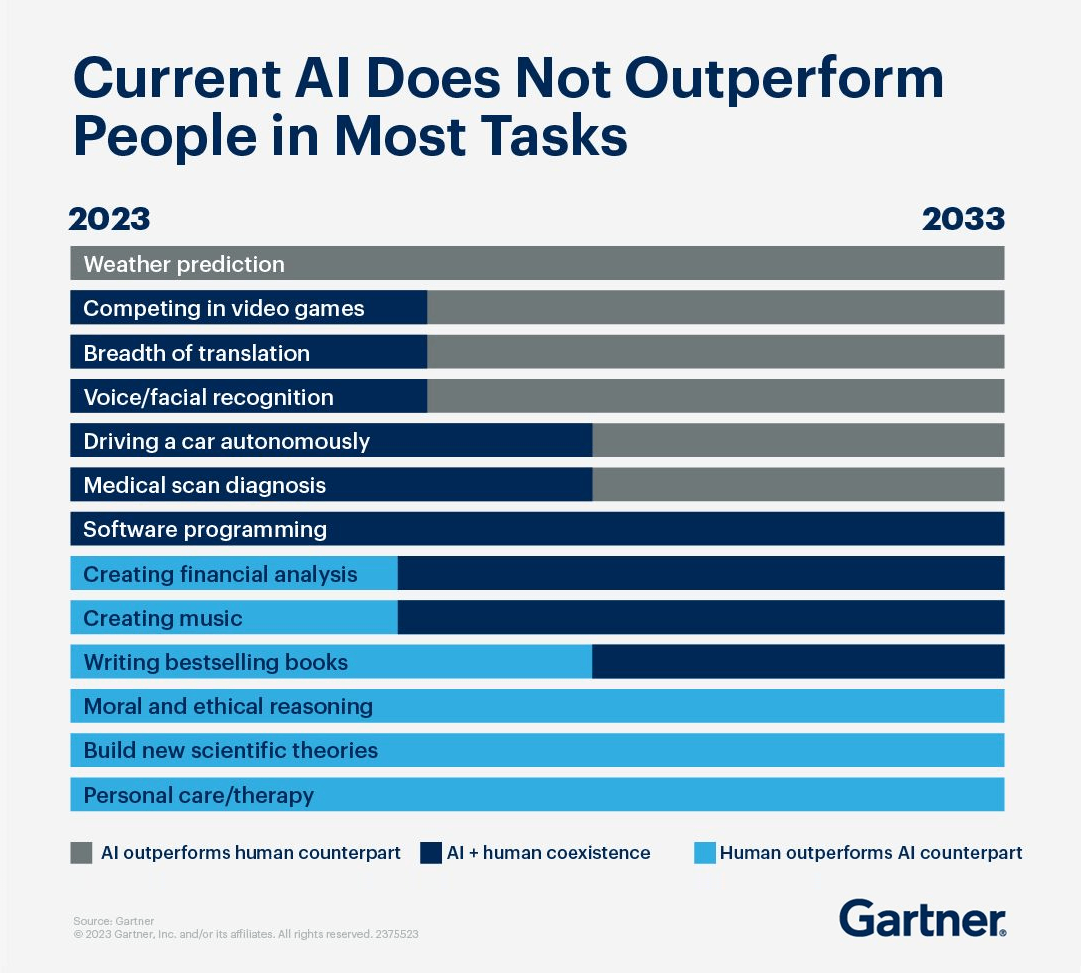

One of the biggest questions surrounding AI is the manner in which it would impact jobs. Many are concerned that AI would make jobs obsolete due to how it would be able to perform various tasks more efficiently as well as effectively than might have been the case otherwise. In spite of the fact that this is the case, a study by Gartner has revealed that AI will actually have a minimal impact on the availability of jobs by 2026.

With all of that having been said and now out of the way, it is important to note that AI is not currently capable of outperforming humans when it comes to the vast majority of tasks. Three skills in particular are going to be secure through 2033, namely personal care and therapy, building new scientific theories as well as moral and ethical reasoning. Humans will be better at these skills than AI at least until 2033 according to the findings presented within this study.

Three other skills are also in the clear, with AI not being able to come close to humans in the present year and it will end up being on part with humans at some point or another in the upcoming decade. These three skills are writing bestselling books, creating music as well as conducting financial analyses. Whenever AI catches up with humans, it will likely complement people that are performing these jobs rather than replacing them entirely.

Of course, there are some jobs that AI will get better at than humans even if it’s not currently on par. Software programming is a skill that AI is already equal to humans at, although it is still a useful tool and it won’t be replacing humans anytime soon. Apart from that, medical scan diagnosis, driving, facial recognition, translation and playing video games are all skills that AI has equalled humans in, and by the late 20s it will have replaced humans.

It bears mentioning that most of these skills are not extremely valuable jobs save for medical scan diagnosis, which essentially indicates that not that many job are going to be eliminated at least in the near future. The only skill that has already become obsolete in the face of AI is weather prediction, with most others holding their own for the time being.

Read next: AI Taking Jobs Trend Soared by 304% Globally in the Past Year, Led by Heightened Australian Concern

by Zia Muhammad via Digital Information World

With all of that having been said and now out of the way, it is important to note that AI is not currently capable of outperforming humans when it comes to the vast majority of tasks. Three skills in particular are going to be secure through 2033, namely personal care and therapy, building new scientific theories as well as moral and ethical reasoning. Humans will be better at these skills than AI at least until 2033 according to the findings presented within this study.

Three other skills are also in the clear, with AI not being able to come close to humans in the present year and it will end up being on part with humans at some point or another in the upcoming decade. These three skills are writing bestselling books, creating music as well as conducting financial analyses. Whenever AI catches up with humans, it will likely complement people that are performing these jobs rather than replacing them entirely.

Of course, there are some jobs that AI will get better at than humans even if it’s not currently on par. Software programming is a skill that AI is already equal to humans at, although it is still a useful tool and it won’t be replacing humans anytime soon. Apart from that, medical scan diagnosis, driving, facial recognition, translation and playing video games are all skills that AI has equalled humans in, and by the late 20s it will have replaced humans.

It bears mentioning that most of these skills are not extremely valuable jobs save for medical scan diagnosis, which essentially indicates that not that many job are going to be eliminated at least in the near future. The only skill that has already become obsolete in the face of AI is weather prediction, with most others holding their own for the time being.

Read next: AI Taking Jobs Trend Soared by 304% Globally in the Past Year, Led by Heightened Australian Concern

by Zia Muhammad via Digital Information World

Wednesday, December 27, 2023

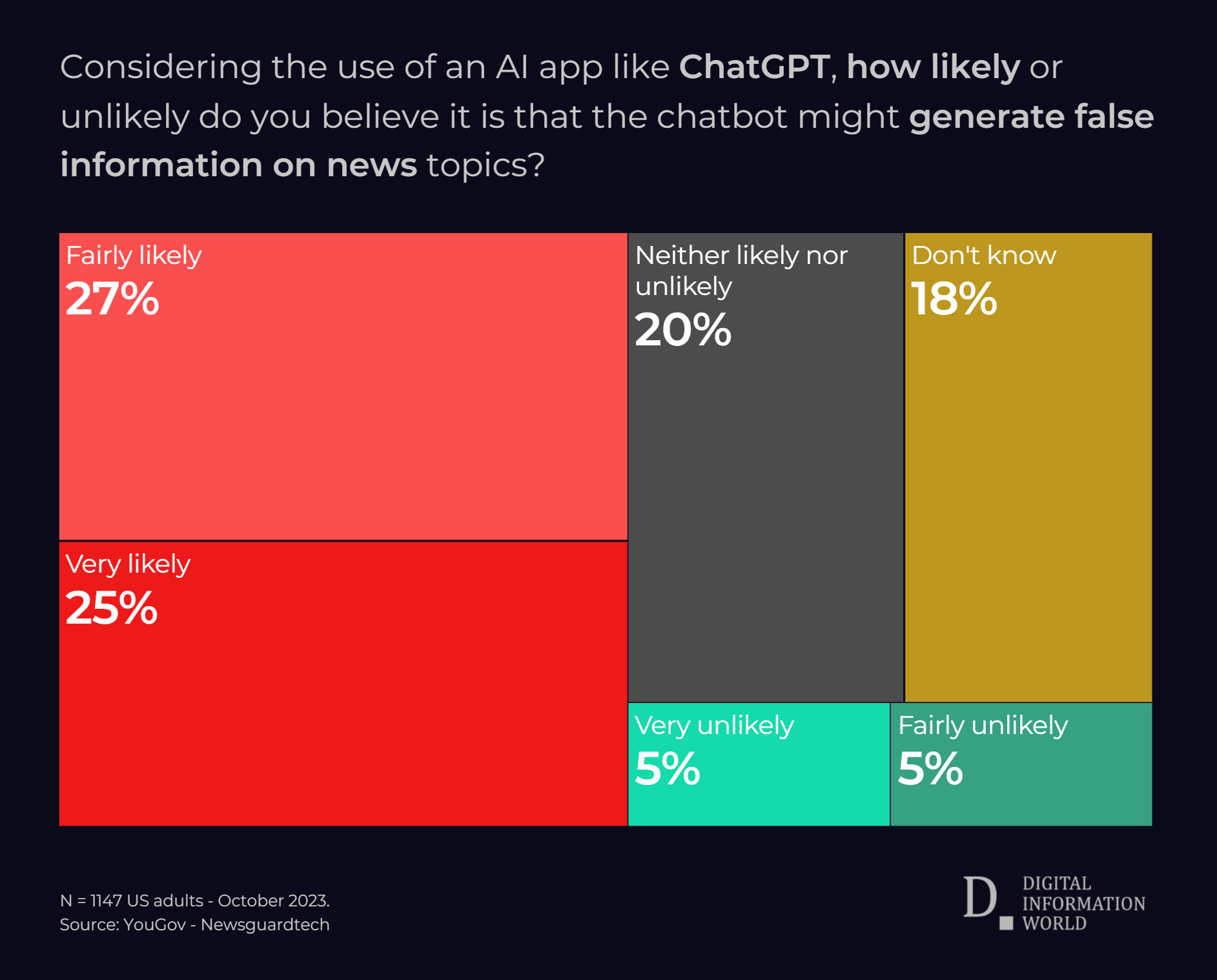

52% of Survey Respondents Think AI Spreads Misinformation

Generative AI has the ability to completely change the world, but in spite of the fact that this is the case, many are worried about its ability to create and spread misinformation. A survey conducted by YouGov on behalf of NewsGuard revealed that as many as 52% of Americans think its likely that AI will create misinformation in the news, with just 10% saying that the chances of this happening are low.

With all of that having been said and now out of the way, it is important to note that generative models have a tendency to hallucinate. What this basically means is that the chat bot will generate information based on prompts regardless of whether or not it is factual. This doesn’t happen all the time, but it occurs often enough that content produced by AI can’t always be trusted, and it is more likely to contain false news than might have been the case otherwise.

When researcher tested GPT 3.5, it found that ChatGPT provided misinformation in 80% of cases. The prompts it received were specifically engineered to find out if the chatbot was prone to misinformation, and it turned out that the vast majority of cases led to it failing the test.

There is a good chance that people will start using generative AI as their primary news source because of the fact that this is the sort of thing that could potentially end up providing instant answers. However, with so much misinformation being fed into its databases, the risks will become increasingly difficult to ignore.

Election misinformation, conspiracy theories and other forms of false narratives are common in ChatGPT. In one example, researchers successfully made the model parrot a conspiracy theory about the Sandy Hook mass shooting, with the chatbot saying that it was staged. Similarly, Google Bard stated that the shooting at the Orlando Pulse club was a false flag operation, and both of these conspiracy theories are commonplace among the far right.

It is imperative that the companies behind these chatbots take steps to prevent them from spreading conspiracy theories and other misinformation that can cause tremendous harm. Until that happens, the dangers that they can create will start to compound in the next few years.

Read next: Global Surge in Interest - Searches for 'AI Job Displacement' Skyrocketed by 304% Over the Past Year,

by Zia Muhammad via Digital Information World

With all of that having been said and now out of the way, it is important to note that generative models have a tendency to hallucinate. What this basically means is that the chat bot will generate information based on prompts regardless of whether or not it is factual. This doesn’t happen all the time, but it occurs often enough that content produced by AI can’t always be trusted, and it is more likely to contain false news than might have been the case otherwise.

When researcher tested GPT 3.5, it found that ChatGPT provided misinformation in 80% of cases. The prompts it received were specifically engineered to find out if the chatbot was prone to misinformation, and it turned out that the vast majority of cases led to it failing the test.

There is a good chance that people will start using generative AI as their primary news source because of the fact that this is the sort of thing that could potentially end up providing instant answers. However, with so much misinformation being fed into its databases, the risks will become increasingly difficult to ignore.

Election misinformation, conspiracy theories and other forms of false narratives are common in ChatGPT. In one example, researchers successfully made the model parrot a conspiracy theory about the Sandy Hook mass shooting, with the chatbot saying that it was staged. Similarly, Google Bard stated that the shooting at the Orlando Pulse club was a false flag operation, and both of these conspiracy theories are commonplace among the far right.

It is imperative that the companies behind these chatbots take steps to prevent them from spreading conspiracy theories and other misinformation that can cause tremendous harm. Until that happens, the dangers that they can create will start to compound in the next few years.

Read next: Global Surge in Interest - Searches for 'AI Job Displacement' Skyrocketed by 304% Over the Past Year,

by Zia Muhammad via Digital Information World

Subscribe to:

Comments (Atom)