According to a recent survey, many Gen-Z people trust YouTube more than any other social media platform. Business Insider collaborated with YouGov and held a survey with 1,800 participants. About 600 people who participated in that survey were Gen-Z, i.e., people born between 1997 and 2012. However, the survey was solely held among people who were over 18 years old. People from other generations were also part of this survey.

The survey asked Gen-Z participants the question about which social media they trust the most. YouTube was the most observed answer as 59% of the Gen-Z said that they completely or partially trust YouTube. 26% of Genz between the ages of 18 and 26 said that they trust YouTube only a bit. The second social media app on the list was Instagram. 40% of Gen-Z participants say that they trust Instagram while 45% said that they don't trust Instagram. 15% of the Gen-Z said that they are not sure whether they trust Instagram or not.

Third on the list was X (formerly known as Twitter) with a 35% trustworthiness rate. 49% of Gen-Z participants are not sure about their trust in Twitter. 15% do not trust Twitter at all. The social media platform that most Gen-Z participants didn't trust was Facebook. Only 28% said that it's trustworthy while 60% of them said that they don't trust Facebook at all. TikTok was also a close second as it came second last as the most untrustworthy app, followed by Facebook. 57% of Gen-Z users say that they do not trust TikTok, while 30% of them said that they partially trust TikTok.

There are many reasons why Gen-Z finds YouTube more trustworthy than other apps. The reason why Facebook has come up as the least trustworthy app amongst Gen-Z is because of a lot of controversies Facebook has faced in the past. Now, many Gen-Z people do not even have a Facebook account to begin with. It was also reported that many Gen-Z haven't used X(Twitter) ever since Elon Musk purchased it. TikTok is also an untrustworthy app because of its security issues. So, in conclusion, YouTube is the most trustworthy app amongst Gen-Z people these days.

Image: BI

Read next: AI In Workspace Isn’t Widespread But Employees Are Concerned About Its Impact On Their Careers, New Survey Proves

by Arooj Ahmed via Digital Information World

"Mr Branding" is a blog based on RSS for everything related to website branding and website design, it collects its posts from many sites in order to facilitate the updating to the latest technology.

To suggest any source, please contact me: Taha.baba@consultant.com

Sunday, December 31, 2023

AI In Workspace Isn’t Widespread But Employees Are Concerned About Its Impact On Their Careers, New Survey Proves

A recent survey has plenty of people talking as it takes into consideration the growing trend of AI in workplaces and the possible implications it can have on careers.

Thanks to reports generated by SurveyMonkey and CNBC, we’re seeing the giants get into the details of what the latest survey from Workforce has to say in this regard.

On average, only 30% of those taking part in the study end up making use of AI in the office. Despite this finding, more than 40% are worried about how it can have serious implications for their careers.

Meanwhile, other findings from the study proved how 50% of the users in the survey wish their firm would roll out more benefits like healthcare premiums and free catering so their daily meal requirements would be met.

Just 30% revealed how the office provides financial coaching or advice when they most need it. And then 25% of all Gen Z workers and 20% of Millenials shared how they were forced to invest as what they were being provided did not meet their short-term goals and to help get more gains in less time, this is the strategy they’re implementing.

The results were first rolled out by CNBC and the poll was carried out during the first week of December by Survey Monkey online. It showed how more than 7000 workers took part and this is what they felt about the current AI saga.

Those taking part in this survey were chosen from the 2 million groups of people who routinely participate in SurveyMonkey’s polls. So that’s when the results were compared to previous years.

85% of the majority were happy with the job and that was not different from previous years. Meanwhile, 72% agree they’re paid well and 90% feel the job is very meaningful. However, 36% felt they needed to quit at least once in the past three months. Lastly, 71% feel the workplace’s morale really did positively impact them into doing better.

It’s quite evident from such a study that even though AI at work isn’t as prevalent as one would have expected it to be, the concerns about it negatively affecting people’s work are on the rise. This gives rise to the conclusion that employers need to think more in detail about how workplace contentment can give rise to an aura where tech changes are made, keeping in mind their impact on a person’s growth, happiness, and well-being. After all, that’s what really matters at the end of the day and when the workplace is happy, the performance of the enterprise will be better as people would be more motivated to do their tasks accurately.

Other findings worth mentioning include 30% of the participants confirming the use of AI tools during work with the Gen Z and Millenials age group in the lead.

AI was found to be more popular for those employees of color with the majority being of Asian and Black ethnicity. This was in stark comparison to those who were White.

Meanwhile, employees in the fields of research and Consultation as well as Logistics and Business were in the lead as were those linked to the Finance and tech sector when it came down to using AI.

Those using AI in the office were likely to see it as a major positive offering and means to better productivity while 72% added how it impacted them big time. Meanwhile, just 28% spoke about it not being a positive influence on their work and hence cited it as a negative impact.

But even though we saw a whopping majority speak of the highs that such technology brings, 42% still worry about how it can negatively impact careers in the future and perhaps get them ousted from the firm. 44% spoke about being somewhat worried.

Those making less than $50k each year were more worried than the average person generating between $50 to $99,000 every year. And workers coming from diverse backgrounds showed more concern than the average White.

If an employee was using AI in the office, they expressed double the concern regarding the impact it had on their work. This was in comparison to those not using AI in the office.

Photo: DIW-AIgen

Read next: Even Though Prompt Engineering is a Hot Skill, Better Communication with Humans will be More in Demand

by Dr. Hura Anwar via Digital Information World

Thanks to reports generated by SurveyMonkey and CNBC, we’re seeing the giants get into the details of what the latest survey from Workforce has to say in this regard.

On average, only 30% of those taking part in the study end up making use of AI in the office. Despite this finding, more than 40% are worried about how it can have serious implications for their careers.

Meanwhile, other findings from the study proved how 50% of the users in the survey wish their firm would roll out more benefits like healthcare premiums and free catering so their daily meal requirements would be met.

Just 30% revealed how the office provides financial coaching or advice when they most need it. And then 25% of all Gen Z workers and 20% of Millenials shared how they were forced to invest as what they were being provided did not meet their short-term goals and to help get more gains in less time, this is the strategy they’re implementing.

The results were first rolled out by CNBC and the poll was carried out during the first week of December by Survey Monkey online. It showed how more than 7000 workers took part and this is what they felt about the current AI saga.

Those taking part in this survey were chosen from the 2 million groups of people who routinely participate in SurveyMonkey’s polls. So that’s when the results were compared to previous years.

85% of the majority were happy with the job and that was not different from previous years. Meanwhile, 72% agree they’re paid well and 90% feel the job is very meaningful. However, 36% felt they needed to quit at least once in the past three months. Lastly, 71% feel the workplace’s morale really did positively impact them into doing better.

It’s quite evident from such a study that even though AI at work isn’t as prevalent as one would have expected it to be, the concerns about it negatively affecting people’s work are on the rise. This gives rise to the conclusion that employers need to think more in detail about how workplace contentment can give rise to an aura where tech changes are made, keeping in mind their impact on a person’s growth, happiness, and well-being. After all, that’s what really matters at the end of the day and when the workplace is happy, the performance of the enterprise will be better as people would be more motivated to do their tasks accurately.

Other findings worth mentioning include 30% of the participants confirming the use of AI tools during work with the Gen Z and Millenials age group in the lead.

AI was found to be more popular for those employees of color with the majority being of Asian and Black ethnicity. This was in stark comparison to those who were White.

Meanwhile, employees in the fields of research and Consultation as well as Logistics and Business were in the lead as were those linked to the Finance and tech sector when it came down to using AI.

Those using AI in the office were likely to see it as a major positive offering and means to better productivity while 72% added how it impacted them big time. Meanwhile, just 28% spoke about it not being a positive influence on their work and hence cited it as a negative impact.

But even though we saw a whopping majority speak of the highs that such technology brings, 42% still worry about how it can negatively impact careers in the future and perhaps get them ousted from the firm. 44% spoke about being somewhat worried.

Those making less than $50k each year were more worried than the average person generating between $50 to $99,000 every year. And workers coming from diverse backgrounds showed more concern than the average White.

If an employee was using AI in the office, they expressed double the concern regarding the impact it had on their work. This was in comparison to those not using AI in the office.

Photo: DIW-AIgen

Read next: Even Though Prompt Engineering is a Hot Skill, Better Communication with Humans will be More in Demand

by Dr. Hura Anwar via Digital Information World

Saturday, December 30, 2023

OpenAI's Financial Milestone: Breaking Down the $1.6 Billion Annualized Revenue

AI giant OpenAI has hit a significant financial milestone, surpassing $1.6 billion in annual revenue, as reported by TheInformation. This is a considerable increase from the $1.3 billion recorded in mid-October. Despite recent controversies about its leadership, OpenAI has shown resilience and growth.

A major contributor to this financial success is OpenAI's ChatGPT product, an AI tool that generates text based on users input. It has become a focal point for smart investors in the artificial intelligence field, boosting the company's monthly revenue to an impressive $80 million. OpenAI faced a setback of $540 million during the 2022 development of GPT-4 and ChatGPT. However, the company bounced back with the launch of an improved version of ChatGPT. This enhanced model comes with more features and better privacy measures, aiming to attract a wider customer base and increase profits from its main product.

OpenAI started in 2015 as a non-profit organization but transitioned to a capped-profit venture in 2019, a move that has proven successful. This change allowed OpenAI to attract significant investments from venture funds and gave employees a stake in the company. Microsoft's substantial investments in 2019 and 2023, along with providing computing resources through Microsoft's Azure cloud service, have strengthened OpenAI's financial position. With a $1 billion injection over five years, the company is strategically positioning itself to commercially license its advanced technologies.

Despite facing challenges like changes in leadership and high development costs, OpenAI's financial outlook remains strong. The company is currently in talks to secure more funding, with an expected valuation between $80 billion and $90 billion, comparable to Uber's market valuation. OpenAI aims to generate $1 billion in revenue in 2023, solidifying its position as a key player in the artificial intelligence industry.

Image: DIW

Read next: Even Though Prompt Engineering is a Hot Skill, Better Communication with Humans will be More in Demand

by Irfan Ahmad via Digital Information World

A major contributor to this financial success is OpenAI's ChatGPT product, an AI tool that generates text based on users input. It has become a focal point for smart investors in the artificial intelligence field, boosting the company's monthly revenue to an impressive $80 million. OpenAI faced a setback of $540 million during the 2022 development of GPT-4 and ChatGPT. However, the company bounced back with the launch of an improved version of ChatGPT. This enhanced model comes with more features and better privacy measures, aiming to attract a wider customer base and increase profits from its main product.

OpenAI started in 2015 as a non-profit organization but transitioned to a capped-profit venture in 2019, a move that has proven successful. This change allowed OpenAI to attract significant investments from venture funds and gave employees a stake in the company. Microsoft's substantial investments in 2019 and 2023, along with providing computing resources through Microsoft's Azure cloud service, have strengthened OpenAI's financial position. With a $1 billion injection over five years, the company is strategically positioning itself to commercially license its advanced technologies.

Despite facing challenges like changes in leadership and high development costs, OpenAI's financial outlook remains strong. The company is currently in talks to secure more funding, with an expected valuation between $80 billion and $90 billion, comparable to Uber's market valuation. OpenAI aims to generate $1 billion in revenue in 2023, solidifying its position as a key player in the artificial intelligence industry.

Image: DIW

Read next: Even Though Prompt Engineering is a Hot Skill, Better Communication with Humans will be More in Demand

by Irfan Ahmad via Digital Information World

Even Though Prompt Engineering is a Hot Skill, Better Communication with Humans will be More in Demand

If you are a prompt engineer there is a chance that you can get paid 6 figures for your job. In this new era of AI, a prompt engineer is a person who gives commands to AI chatbots like ChatGPT to bring out answers that can be used to bring out the best in these chatbots. On the other hand, Logan Kilpatrick who works for OpenAI says that many people think that being an AI prompt engineer is a hard and creative job but this is not the truth. Being a prompt engineer is just like a person who can talk with other people efficiently. He also said that there is no doubt that prompt engineering is one of the top rising jobs in the AI industry but there are also some skills that are far more important than this one in 2024. Those skills are reading, writing, and speaking as these skills are going to give AI a hard time while competing with the human mind.

As the future is in the hands of AI or Artificial General Intelligence (AGI), skills like speaking with humans more effectively are going to be more in demand. Even though AGI has complex cognitive skills and problem-solving capabilities, human skills will still be used one way or another.

After Logan said all this on X, many users also replied to this post. One of the users posted that learning prompt engineering may also help people communicate with other people. He said that this way, people can learn conversation cues by giving prompts to AI chatbots. Some other users said that if a prompt engineer knows how to communicate with other people, he can easily communicate with an AI system too. Talking to chatbots to generate appropriate answers is a skill not every person can acquire.

A study published in November showed that if humans show emotions to ChatGPT while giving a prompt, they are more likely to get an accurate answer. If a person talks to ChatGPT in a polite and direct tone, this can also generate accurate output. In the end, people who have creativity, flexibility, and communication skills will be more in demand in the future as compared to people who only have technical knowledge. Now we will have to wait and see what 2024 will bring in the world of AI.

Photo: DIW-AIgen

Read next: A Study on AI Models Shows Their Ability to Understand Sarcastic and Ironic Tone in Language

by Arooj Ahmed via Digital Information World

As the future is in the hands of AI or Artificial General Intelligence (AGI), skills like speaking with humans more effectively are going to be more in demand. Even though AGI has complex cognitive skills and problem-solving capabilities, human skills will still be used one way or another.

After Logan said all this on X, many users also replied to this post. One of the users posted that learning prompt engineering may also help people communicate with other people. He said that this way, people can learn conversation cues by giving prompts to AI chatbots. Some other users said that if a prompt engineer knows how to communicate with other people, he can easily communicate with an AI system too. Talking to chatbots to generate appropriate answers is a skill not every person can acquire.

A study published in November showed that if humans show emotions to ChatGPT while giving a prompt, they are more likely to get an accurate answer. If a person talks to ChatGPT in a polite and direct tone, this can also generate accurate output. In the end, people who have creativity, flexibility, and communication skills will be more in demand in the future as compared to people who only have technical knowledge. Now we will have to wait and see what 2024 will bring in the world of AI.

Photo: DIW-AIgen

Read next: A Study on AI Models Shows Their Ability to Understand Sarcastic and Ironic Tone in Language

by Arooj Ahmed via Digital Information World

Friday, December 29, 2023

The Politics of Hashtags - What the Latest Research Reveals About TikTok and Instagram

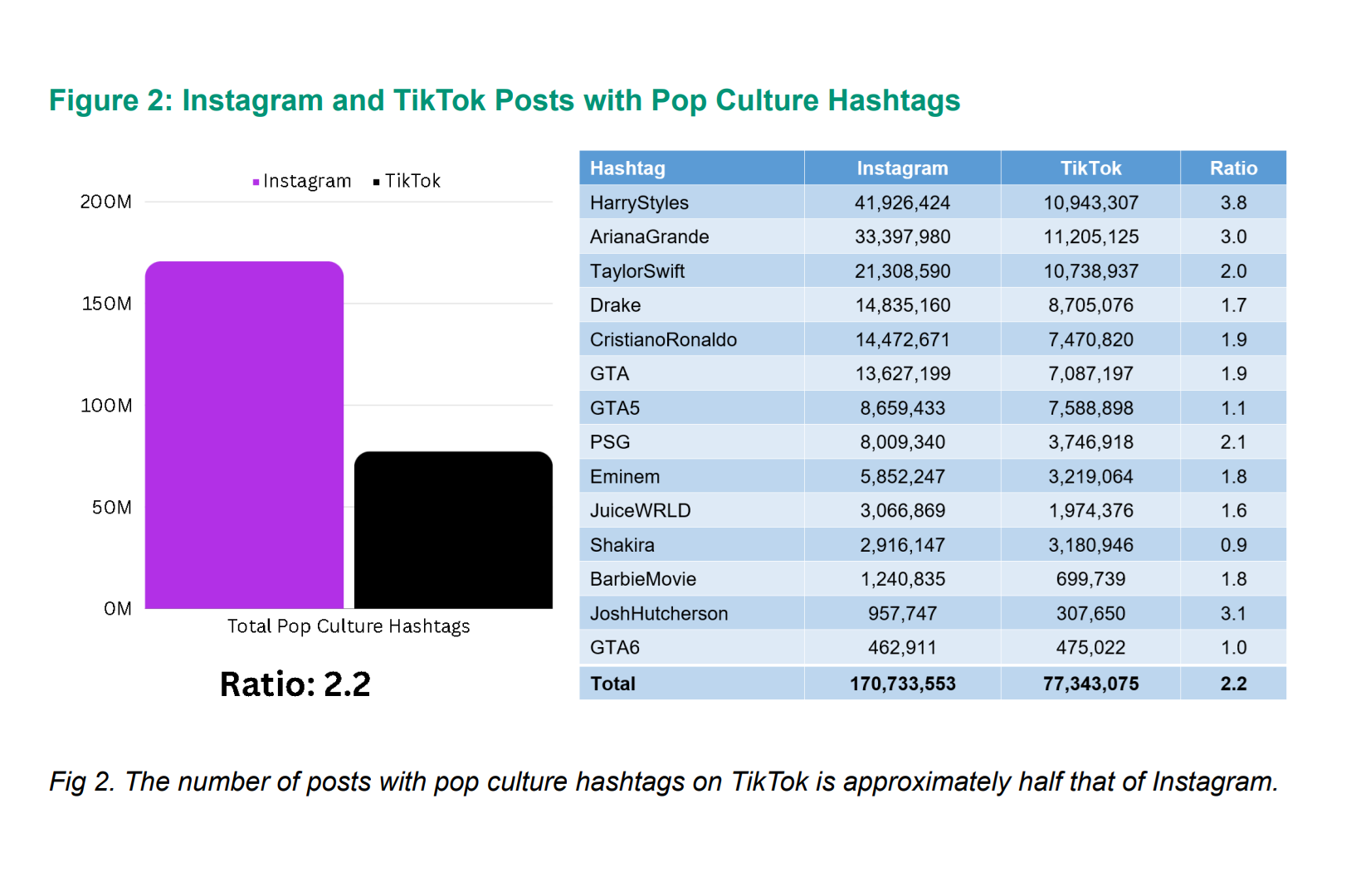

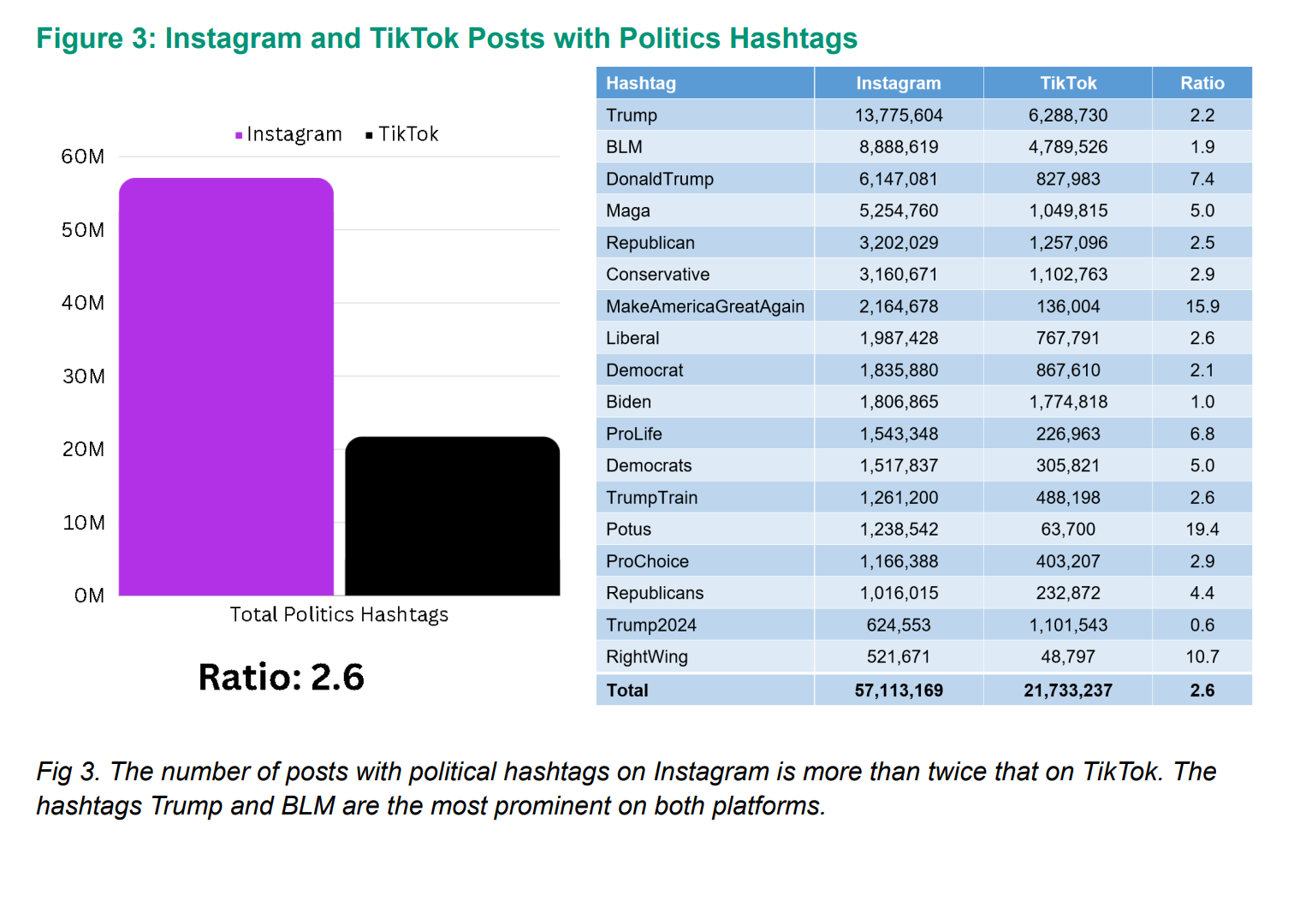

The Network Contagion Research Institute at Rutgers University recently dropped a report that sheds light on some intriguing disparities in how politically charged hashtags play out on TikTok versus Instagram. This deep dive into cyber threats on social media takes a closer look at hashtags like "Tiananmen," "HongKongProtest," and "FreeTibet," stacked against mainstream tags like "TaylorSwift" and "Trump."

To level the playing field, considering the differences in user base and platform longevity, researchers meticulously crunched the numbers on hashtag ratios across diverse political and pop-culture topics on both platforms. What's surprising is that TikTok seems to be sporting fewer politically charged hashtags, suggesting a potential alignment with the preferences of the Chinese government.

Pop-culture hashtags like "ArianaGrande" show up twice as often on Instagram compared to TikTok, and US political hashtags like "Trump" or "Biden" appear 2.6 times more on Instagram. But the real eye-opener is with hashtags like "FreeTibet," which is a whopping 50 times more common on Instagram. "FreeUyghurs" clocks in at 59 times more prevalent on Instagram, and "HongKongProtest" is a staggering 174 times more frequent on Instagram than TikTok.

The report's takeaway? TikTok's content promotion or suppression seems to be playing along with the tunes of the Chinese government's interests.

Images: NetworkContagion

TikTok is under the microscope of US politicians, who are closely scrutinizing how it handles political content. With its parent company, ByteDance, planted in China—a country flagged as a foreign adversary by US—concerns are mounting about potential information manipulation to serve political agendas. Attempts to ban the app have hit legal roadblocks, leaving TikTok squarely in the crossfire of geopolitical tensions.

Granted, the report has its limitations, with challenges in isolating hashtag analysis to post-TikTok launch periods. TikTok fires back at the findings, calling out flawed methodology and stressing that hashtags are user-generated, not dictated by the platform. They argue that the content in question is readily available, dismissing claims of suppression as baseless, and highlighting that one-third of TikTok videos don't even bother with hashtags.

Joel Finkelstein, co-founder at NCRI, acknowledges the data constraints but stands firm on the report's conclusions based on publicly available information. Despite the hurdles, the study provides valuable insights into the nuanced dynamics of hashtag usage across these two influential social media platforms.

When we look at how social media handles conflicts, it seems like platforms such as Facebook, Instagram, and even TikTok might be leaning towards supporting their own bottom lines. This raises concerns about whether these platforms prioritize free speech and unbiased information or if they have their own biases. It's not just about one platform; it's a broader issue highlighting that social media lacks consistent moral and ethical standards. Instead, these platforms often follow their own agendas, sometimes putting profit before sharing accurate information and promoting fair free speech. This raises important questions about the need for better regulations to ensure that social media platforms operate ethically and responsibly while navigating complex global issues.

Read next: Amnesty International Confirms India’s Government Uses Infamous Pegasus Spyware

by Irfan Ahmad via Digital Information World

To level the playing field, considering the differences in user base and platform longevity, researchers meticulously crunched the numbers on hashtag ratios across diverse political and pop-culture topics on both platforms. What's surprising is that TikTok seems to be sporting fewer politically charged hashtags, suggesting a potential alignment with the preferences of the Chinese government.

Pop-culture hashtags like "ArianaGrande" show up twice as often on Instagram compared to TikTok, and US political hashtags like "Trump" or "Biden" appear 2.6 times more on Instagram. But the real eye-opener is with hashtags like "FreeTibet," which is a whopping 50 times more common on Instagram. "FreeUyghurs" clocks in at 59 times more prevalent on Instagram, and "HongKongProtest" is a staggering 174 times more frequent on Instagram than TikTok.

The report's takeaway? TikTok's content promotion or suppression seems to be playing along with the tunes of the Chinese government's interests.

Images: NetworkContagion

TikTok is under the microscope of US politicians, who are closely scrutinizing how it handles political content. With its parent company, ByteDance, planted in China—a country flagged as a foreign adversary by US—concerns are mounting about potential information manipulation to serve political agendas. Attempts to ban the app have hit legal roadblocks, leaving TikTok squarely in the crossfire of geopolitical tensions.

Granted, the report has its limitations, with challenges in isolating hashtag analysis to post-TikTok launch periods. TikTok fires back at the findings, calling out flawed methodology and stressing that hashtags are user-generated, not dictated by the platform. They argue that the content in question is readily available, dismissing claims of suppression as baseless, and highlighting that one-third of TikTok videos don't even bother with hashtags.

Joel Finkelstein, co-founder at NCRI, acknowledges the data constraints but stands firm on the report's conclusions based on publicly available information. Despite the hurdles, the study provides valuable insights into the nuanced dynamics of hashtag usage across these two influential social media platforms.

When we look at how social media handles conflicts, it seems like platforms such as Facebook, Instagram, and even TikTok might be leaning towards supporting their own bottom lines. This raises concerns about whether these platforms prioritize free speech and unbiased information or if they have their own biases. It's not just about one platform; it's a broader issue highlighting that social media lacks consistent moral and ethical standards. Instead, these platforms often follow their own agendas, sometimes putting profit before sharing accurate information and promoting fair free speech. This raises important questions about the need for better regulations to ensure that social media platforms operate ethically and responsibly while navigating complex global issues.

Read next: Amnesty International Confirms India’s Government Uses Infamous Pegasus Spyware

by Irfan Ahmad via Digital Information World

PRISMA's Cat and Mouse Game - Cracking Google's MultiLogin Mystery

CloudSEK, a cyber security firm, found a sneaky way hackers can mess with Google accounts, and it's a bit of a head-scratcher. This method lets them stay logged in, even after changing the password. Sounds wild, right?

For starters, Google uses a system called OAuth2 for keeping things secure. It's like a fancy bouncer at a club, making sure only the right people get in. But these hackers, led by someone calling themselves PRISMA, figured out a trick to keep the party going.

They found a secret spot in Google's system, a hidden door called "MultiLogin." It's a tool Google uses to sync accounts across different services. The hacker PRISMA exploited this door, creating a malware called Lumma Infostealer to do the dirty work.

Now, the clever part is, even if you change your passwords, these hackers can keep sipping on their virtual cocktails. The malware they created knows how to regenerate these secret codes, called cookies, that Google uses to verify who you are.

CloudSEK's researchers say this is a serious threat. The hackers aren't just sneaking in once—they're setting up camp. Even if you kick them out by changing your password, they still have a way back in. It's like changing the locks on your front door, but they somehow still have a secret master key.

Researchers tried reaching out to Google to spill the beans, but so far, it's been crickets. No word from the tech giant on how they plan to deal with this sneaky hack.

So, here we are, in a world where even resetting your password might not be enough to kick out the virtual party crashers. Stay tuned to see how Google responds to this unexpected security hiccup.

Read next: Researchers Suggest Innovative Methods To Enhance Security And Privacy For Apple’s AirTag

by Irfan Ahmad via Digital Information World

For starters, Google uses a system called OAuth2 for keeping things secure. It's like a fancy bouncer at a club, making sure only the right people get in. But these hackers, led by someone calling themselves PRISMA, figured out a trick to keep the party going.

They found a secret spot in Google's system, a hidden door called "MultiLogin." It's a tool Google uses to sync accounts across different services. The hacker PRISMA exploited this door, creating a malware called Lumma Infostealer to do the dirty work.

Now, the clever part is, even if you change your passwords, these hackers can keep sipping on their virtual cocktails. The malware they created knows how to regenerate these secret codes, called cookies, that Google uses to verify who you are.

CloudSEK's researchers say this is a serious threat. The hackers aren't just sneaking in once—they're setting up camp. Even if you kick them out by changing your password, they still have a way back in. It's like changing the locks on your front door, but they somehow still have a secret master key.

Researchers tried reaching out to Google to spill the beans, but so far, it's been crickets. No word from the tech giant on how they plan to deal with this sneaky hack.

So, here we are, in a world where even resetting your password might not be enough to kick out the virtual party crashers. Stay tuned to see how Google responds to this unexpected security hiccup.

Read next: Researchers Suggest Innovative Methods To Enhance Security And Privacy For Apple’s AirTag

by Irfan Ahmad via Digital Information World

Thursday, December 28, 2023

Unlocking Creativity and Problem-Solving: 34% of Users Leverage Free AI Tools, New Survey Finds

A report by Atomicwork shows the user frequency of artificial intelligence (AI) usage in its report, called “The State of AI in IT-2024”. The report indicates the percentage of people who use AI frequently in North America. According to the report, 75% of the people in North America said that they frequently use free AI for their work. 45% of the people said that they use free AI weekly for their work.

The respondents who were part of the survey were also asked what purposes they use the free AI tools for. 34% of them said that they use free AI tools to enhance their creativity and for problem-solving certain issues. 30% of them use these free AI tools for emails or editing already-written emails. There were also people (26%) who used free AI tools for content creation or editing the already written material. When asked these people if they had heard about ChatGPT and Bard, 74% said that they were familiar with ChatGPT but only 4% said that they had heard of Bard.

When respondents were asked if they were okay if their company is using AI for work. A total of 52% said that they were okay with it. 21% are okay with AI usage at work because they say that AI is limited and cannot surpass the human brain. 18% said that they are okay because they are not using it precisely and strictly for work. 9% believe that they are not completely taking help from AI so it's okay. All of this data shows that most of the respondents, three-quarters to be exact, are Pro AI.

Then the survey also asked the respondents which type of work do they not want AI to do. 39% answered that they do not want AI to penetrate ethical and legal decisions. 36% do not want AI to filter in Customer Relationship Management. 33% of respondents do not want AI in People Management.

Read next: New Survey Shows More Than 50% Of Gen Z Adults Have Purchased From An Influencer Brand

by Arooj Ahmed via Digital Information World

The respondents who were part of the survey were also asked what purposes they use the free AI tools for. 34% of them said that they use free AI tools to enhance their creativity and for problem-solving certain issues. 30% of them use these free AI tools for emails or editing already-written emails. There were also people (26%) who used free AI tools for content creation or editing the already written material. When asked these people if they had heard about ChatGPT and Bard, 74% said that they were familiar with ChatGPT but only 4% said that they had heard of Bard.

When respondents were asked if they were okay if their company is using AI for work. A total of 52% said that they were okay with it. 21% are okay with AI usage at work because they say that AI is limited and cannot surpass the human brain. 18% said that they are okay because they are not using it precisely and strictly for work. 9% believe that they are not completely taking help from AI so it's okay. All of this data shows that most of the respondents, three-quarters to be exact, are Pro AI.

Then the survey also asked the respondents which type of work do they not want AI to do. 39% answered that they do not want AI to penetrate ethical and legal decisions. 36% do not want AI to filter in Customer Relationship Management. 33% of respondents do not want AI in People Management.

Read next: New Survey Shows More Than 50% Of Gen Z Adults Have Purchased From An Influencer Brand

by Arooj Ahmed via Digital Information World

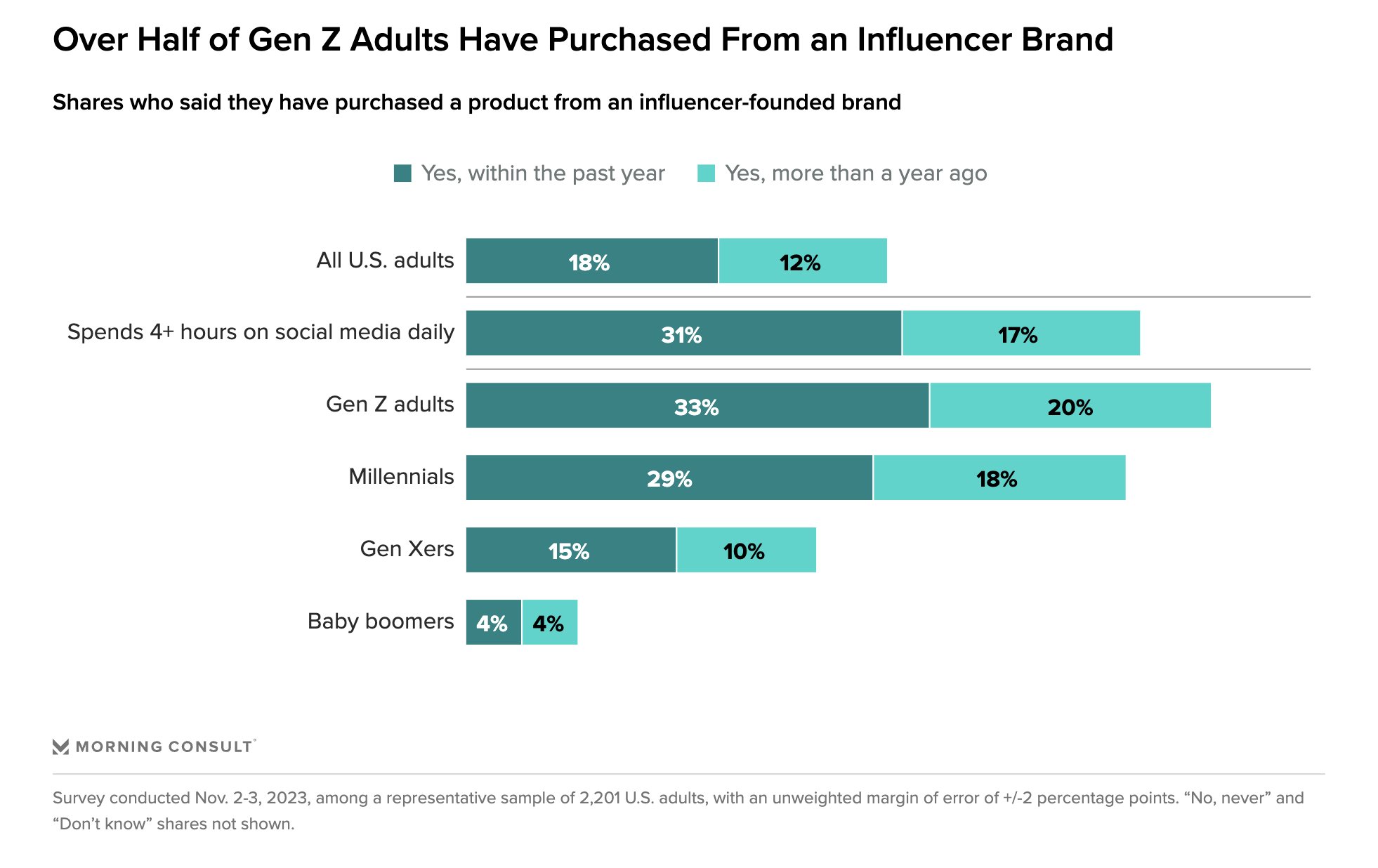

New Survey Shows More Than 50% Of Gen Z Adults Have Purchased From An Influencer Brand

There was a time when influencers were solely looked upon as a leading name to voice a certain brand. But as proven by the latest statistics, that’s far from the truth.

Influencers are now growing so much in power that they can also impact a brand’s revenue, diversify their respective apps, and serve as solo media channels without any assistance.

The news comes from data arising from a recently published study by the Morning Consult. This displayed some key stats worth a mention. For instance, 53% of all Gen Z consumers in the US ended up buying products from a brand that was founded by an influencer. Meanwhile, the same was the case for millennials where the figures stood at 47%.

A whopping 2,200 Adults based in the US took part in this research during the start of November.

The study dived down deep to look at the popular influencers that continue to do so well in this domain. The products are aligned with the specific brand in question and they need to be as original as possible, experts mentioned.

But before they can reach such a powerful position, the influencers are required to have a huge reach that impacts the masses and the proper platform that they can refer to as one of their own.

So what does this mean? Well, if you have your own brand or business, you might wish to consider working by the side of influencers for stocking and sales of their goods. And from these stats, it’s proof of how Gen Z trusts influencers so much that they’re ready to make purchases to this extent.

The results also proved how these individuals were a part of the group who spent close to four hours on average, each day, surfing online through social media. The stats showed no signs of a decline and it was evident how the dominating effect of social media influencers will carry on in the proceeding year too.

Read next: Study Shows that AI Won’t Impact Jobs by 2026, Here’s Why

by Dr. Hura Anwar via Digital Information World

Influencers are now growing so much in power that they can also impact a brand’s revenue, diversify their respective apps, and serve as solo media channels without any assistance.

The news comes from data arising from a recently published study by the Morning Consult. This displayed some key stats worth a mention. For instance, 53% of all Gen Z consumers in the US ended up buying products from a brand that was founded by an influencer. Meanwhile, the same was the case for millennials where the figures stood at 47%.

A whopping 2,200 Adults based in the US took part in this research during the start of November.

The study dived down deep to look at the popular influencers that continue to do so well in this domain. The products are aligned with the specific brand in question and they need to be as original as possible, experts mentioned.

But before they can reach such a powerful position, the influencers are required to have a huge reach that impacts the masses and the proper platform that they can refer to as one of their own.

- Related: A New Survey Shows that Consumers Don't Trust Social Media Apps when it Comes to Online Shopping

So what does this mean? Well, if you have your own brand or business, you might wish to consider working by the side of influencers for stocking and sales of their goods. And from these stats, it’s proof of how Gen Z trusts influencers so much that they’re ready to make purchases to this extent.

The results also proved how these individuals were a part of the group who spent close to four hours on average, each day, surfing online through social media. The stats showed no signs of a decline and it was evident how the dominating effect of social media influencers will carry on in the proceeding year too.

Read next: Study Shows that AI Won’t Impact Jobs by 2026, Here’s Why

by Dr. Hura Anwar via Digital Information World

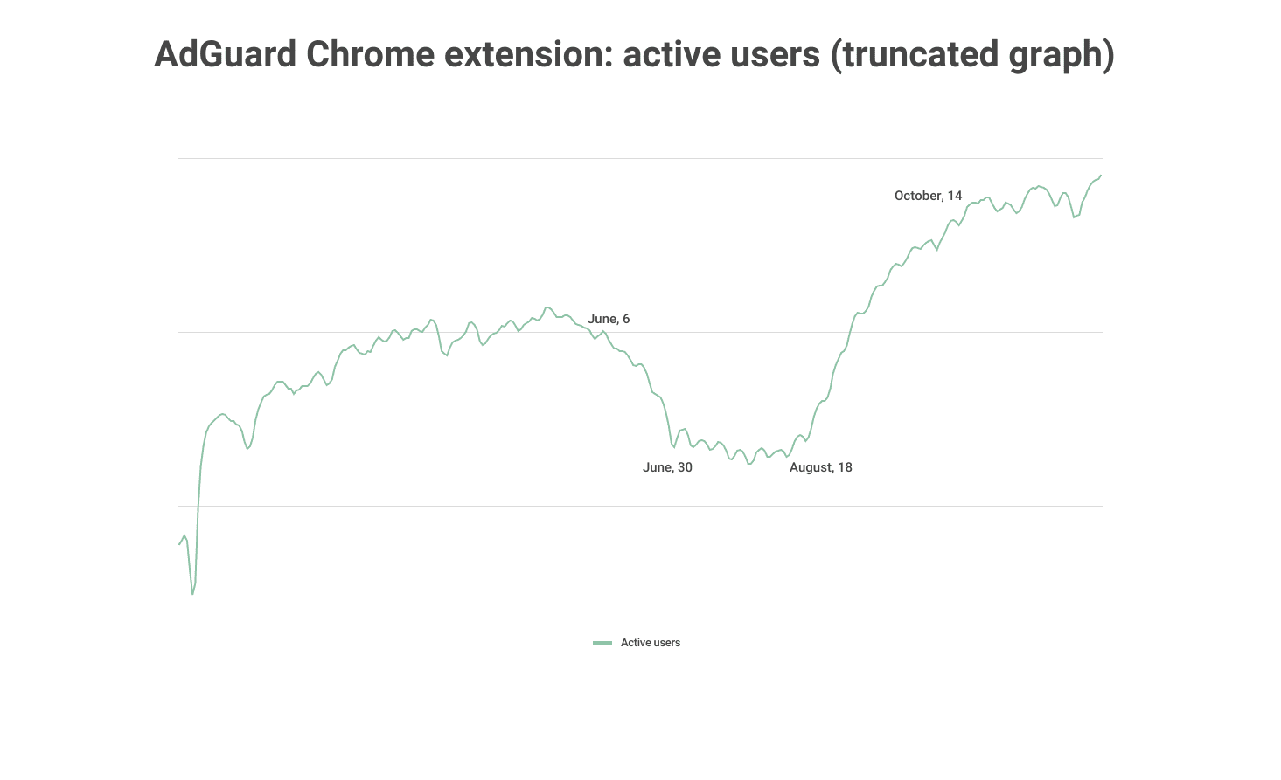

Blocker users defiant: Workarounds thrive despite YouTube's clampdown

Back in May 2023, YouTube launched a new policy which was against users who were using ad blockers on YouTube. The reason for this policy was to ask users to either watch YouTube with ads or subscribe to ad-free YouTube by paying the subscription fee. The policy for anti-ad blockers was first applied to a few users but now YouTube has applied this to all the users worldwide. The users who were using ad blockers for trackers or privacy concerns are not happy with this policy.

An ad-blocking company, Adguard, said that YouTube and ad blockers never get along with each other. Users use ad blockers for YouTube ads because they don't want the ads to interrupt their streaming. On the other hand, YouTube earns a lot of revenue from these ads. A portion of this revenue is also obtained from users subscribing to get rid of ads.

YouTube isn't the only platform that has taken the step for ad blockers. But as YouTube is one of the biggest platforms with about 2.5 billion monthly users, its policy about anti-ad blockers is the only one that became viral. When the news about YouTube’s policy regarding anti-ad blockers spread, many users who were using ad blockers uninstalled them but some also used other measures to block YouTube’s software to stop ad blockers.

When YouTube started its anti-ad blockers policy in June and August, the usage of the Adguard Ad blocker extension for Chrome got 8% lower. But soon, the usage started increasing again after a month. Even with YouTube’s anti-ad block policy, users are still using ad blockers and several ad blockers are also working perfectly fine on YouTube. Despite this, users are having concerns about their privacy while using those ad blockers. Alexander Hanff, a privacy campaigner said that the script that YouTube is using for recognizing ad blockers is breaching users’ privacies. This is completely illegal and YouTube should stop using it.

Image: Adguard/Cybernews

Read next: Google Agrees To Settlement After Being Accused Of Wrongly Collecting User Data Through Chrome’s Incognito Mode

by Arooj Ahmed via Digital Information World

An ad-blocking company, Adguard, said that YouTube and ad blockers never get along with each other. Users use ad blockers for YouTube ads because they don't want the ads to interrupt their streaming. On the other hand, YouTube earns a lot of revenue from these ads. A portion of this revenue is also obtained from users subscribing to get rid of ads.

YouTube isn't the only platform that has taken the step for ad blockers. But as YouTube is one of the biggest platforms with about 2.5 billion monthly users, its policy about anti-ad blockers is the only one that became viral. When the news about YouTube’s policy regarding anti-ad blockers spread, many users who were using ad blockers uninstalled them but some also used other measures to block YouTube’s software to stop ad blockers.

When YouTube started its anti-ad blockers policy in June and August, the usage of the Adguard Ad blocker extension for Chrome got 8% lower. But soon, the usage started increasing again after a month. Even with YouTube’s anti-ad block policy, users are still using ad blockers and several ad blockers are also working perfectly fine on YouTube. Despite this, users are having concerns about their privacy while using those ad blockers. Alexander Hanff, a privacy campaigner said that the script that YouTube is using for recognizing ad blockers is breaching users’ privacies. This is completely illegal and YouTube should stop using it.

Image: Adguard/Cybernews

Read next: Google Agrees To Settlement After Being Accused Of Wrongly Collecting User Data Through Chrome’s Incognito Mode

by Arooj Ahmed via Digital Information World

Study Shows that AI Won’t Impact Jobs by 2026, Here’s Why

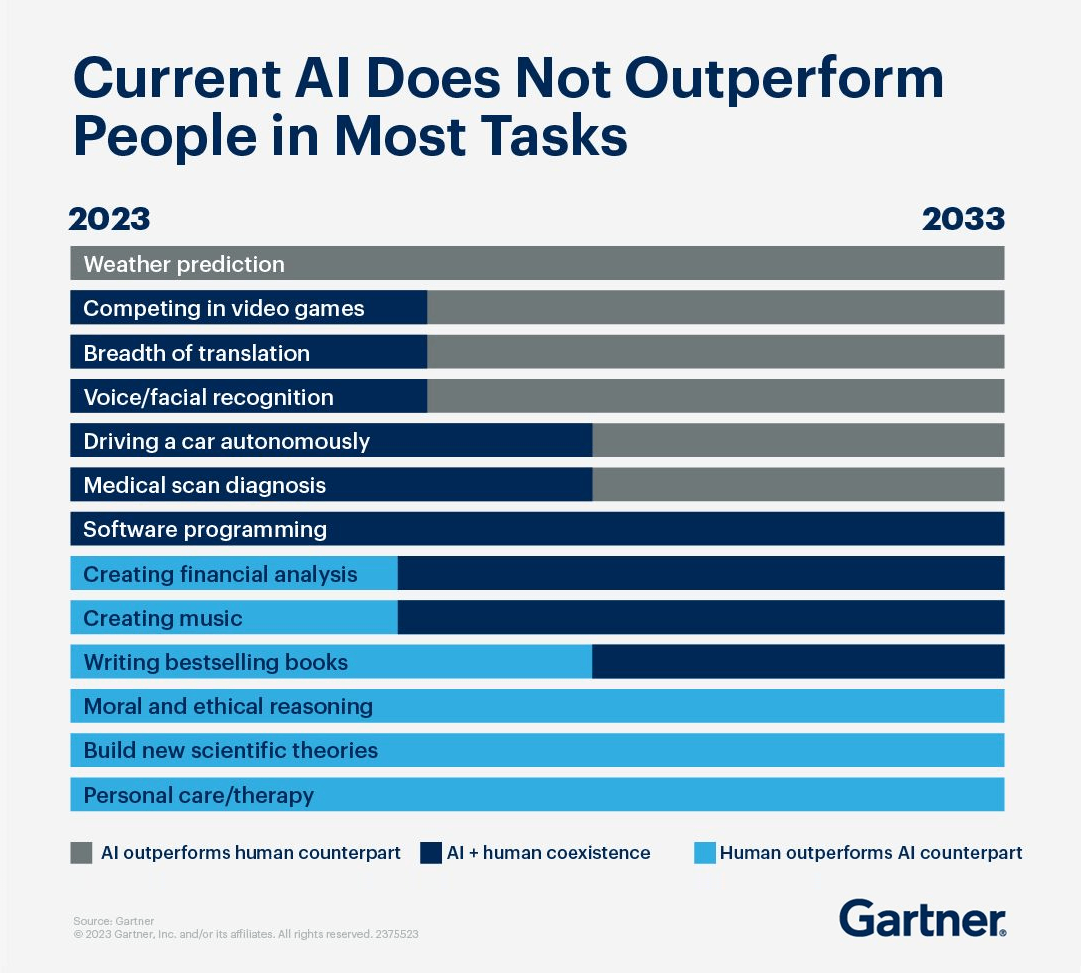

One of the biggest questions surrounding AI is the manner in which it would impact jobs. Many are concerned that AI would make jobs obsolete due to how it would be able to perform various tasks more efficiently as well as effectively than might have been the case otherwise. In spite of the fact that this is the case, a study by Gartner has revealed that AI will actually have a minimal impact on the availability of jobs by 2026.

With all of that having been said and now out of the way, it is important to note that AI is not currently capable of outperforming humans when it comes to the vast majority of tasks. Three skills in particular are going to be secure through 2033, namely personal care and therapy, building new scientific theories as well as moral and ethical reasoning. Humans will be better at these skills than AI at least until 2033 according to the findings presented within this study.

Three other skills are also in the clear, with AI not being able to come close to humans in the present year and it will end up being on part with humans at some point or another in the upcoming decade. These three skills are writing bestselling books, creating music as well as conducting financial analyses. Whenever AI catches up with humans, it will likely complement people that are performing these jobs rather than replacing them entirely.

Of course, there are some jobs that AI will get better at than humans even if it’s not currently on par. Software programming is a skill that AI is already equal to humans at, although it is still a useful tool and it won’t be replacing humans anytime soon. Apart from that, medical scan diagnosis, driving, facial recognition, translation and playing video games are all skills that AI has equalled humans in, and by the late 20s it will have replaced humans.

It bears mentioning that most of these skills are not extremely valuable jobs save for medical scan diagnosis, which essentially indicates that not that many job are going to be eliminated at least in the near future. The only skill that has already become obsolete in the face of AI is weather prediction, with most others holding their own for the time being.

Read next: AI Taking Jobs Trend Soared by 304% Globally in the Past Year, Led by Heightened Australian Concern

by Zia Muhammad via Digital Information World

With all of that having been said and now out of the way, it is important to note that AI is not currently capable of outperforming humans when it comes to the vast majority of tasks. Three skills in particular are going to be secure through 2033, namely personal care and therapy, building new scientific theories as well as moral and ethical reasoning. Humans will be better at these skills than AI at least until 2033 according to the findings presented within this study.

Three other skills are also in the clear, with AI not being able to come close to humans in the present year and it will end up being on part with humans at some point or another in the upcoming decade. These three skills are writing bestselling books, creating music as well as conducting financial analyses. Whenever AI catches up with humans, it will likely complement people that are performing these jobs rather than replacing them entirely.

Of course, there are some jobs that AI will get better at than humans even if it’s not currently on par. Software programming is a skill that AI is already equal to humans at, although it is still a useful tool and it won’t be replacing humans anytime soon. Apart from that, medical scan diagnosis, driving, facial recognition, translation and playing video games are all skills that AI has equalled humans in, and by the late 20s it will have replaced humans.

It bears mentioning that most of these skills are not extremely valuable jobs save for medical scan diagnosis, which essentially indicates that not that many job are going to be eliminated at least in the near future. The only skill that has already become obsolete in the face of AI is weather prediction, with most others holding their own for the time being.

Read next: AI Taking Jobs Trend Soared by 304% Globally in the Past Year, Led by Heightened Australian Concern

by Zia Muhammad via Digital Information World

Wednesday, December 27, 2023

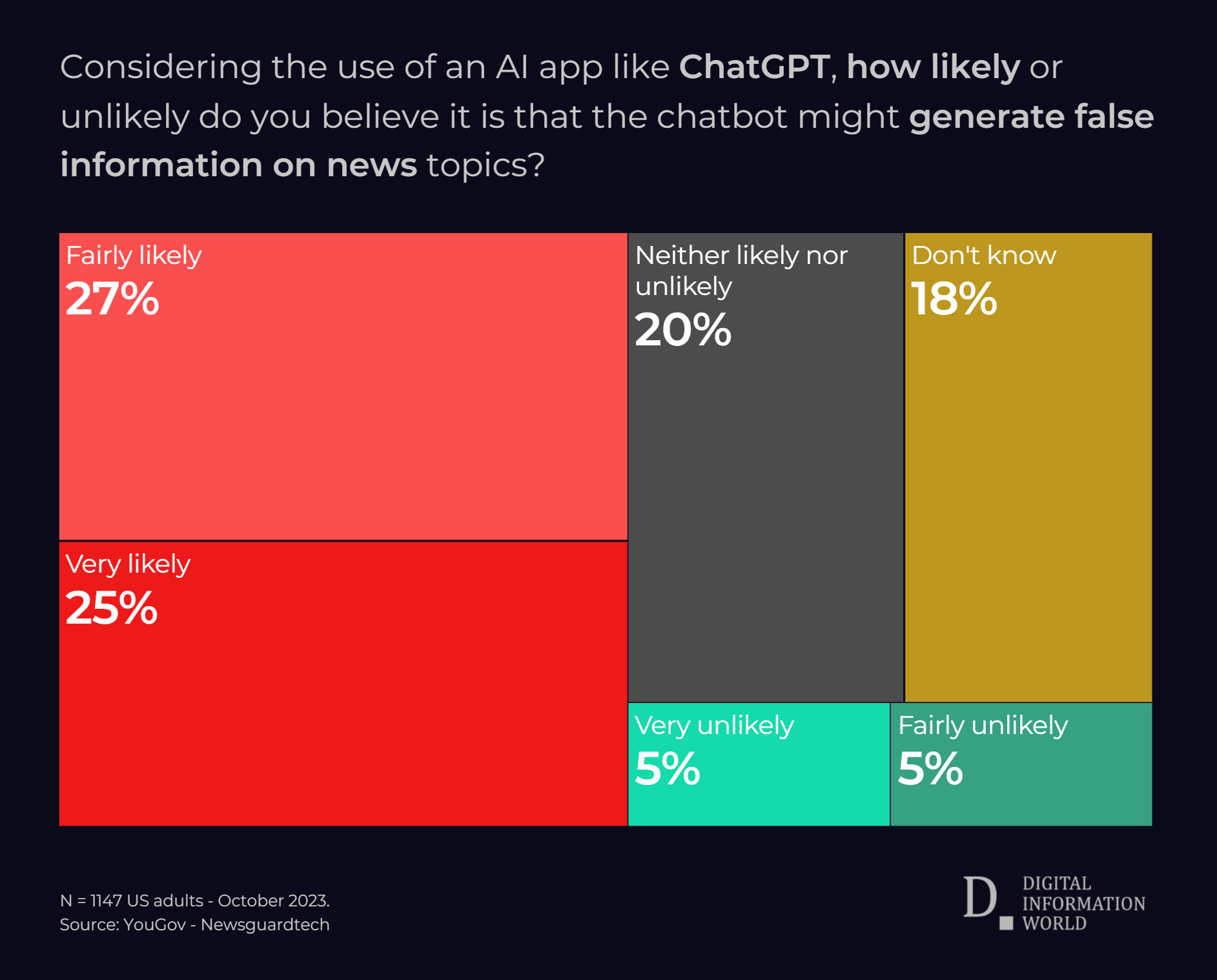

52% of Survey Respondents Think AI Spreads Misinformation

Generative AI has the ability to completely change the world, but in spite of the fact that this is the case, many are worried about its ability to create and spread misinformation. A survey conducted by YouGov on behalf of NewsGuard revealed that as many as 52% of Americans think its likely that AI will create misinformation in the news, with just 10% saying that the chances of this happening are low.

With all of that having been said and now out of the way, it is important to note that generative models have a tendency to hallucinate. What this basically means is that the chat bot will generate information based on prompts regardless of whether or not it is factual. This doesn’t happen all the time, but it occurs often enough that content produced by AI can’t always be trusted, and it is more likely to contain false news than might have been the case otherwise.

When researcher tested GPT 3.5, it found that ChatGPT provided misinformation in 80% of cases. The prompts it received were specifically engineered to find out if the chatbot was prone to misinformation, and it turned out that the vast majority of cases led to it failing the test.

There is a good chance that people will start using generative AI as their primary news source because of the fact that this is the sort of thing that could potentially end up providing instant answers. However, with so much misinformation being fed into its databases, the risks will become increasingly difficult to ignore.

Election misinformation, conspiracy theories and other forms of false narratives are common in ChatGPT. In one example, researchers successfully made the model parrot a conspiracy theory about the Sandy Hook mass shooting, with the chatbot saying that it was staged. Similarly, Google Bard stated that the shooting at the Orlando Pulse club was a false flag operation, and both of these conspiracy theories are commonplace among the far right.

It is imperative that the companies behind these chatbots take steps to prevent them from spreading conspiracy theories and other misinformation that can cause tremendous harm. Until that happens, the dangers that they can create will start to compound in the next few years.

Read next: Global Surge in Interest - Searches for 'AI Job Displacement' Skyrocketed by 304% Over the Past Year,

by Zia Muhammad via Digital Information World

With all of that having been said and now out of the way, it is important to note that generative models have a tendency to hallucinate. What this basically means is that the chat bot will generate information based on prompts regardless of whether or not it is factual. This doesn’t happen all the time, but it occurs often enough that content produced by AI can’t always be trusted, and it is more likely to contain false news than might have been the case otherwise.

When researcher tested GPT 3.5, it found that ChatGPT provided misinformation in 80% of cases. The prompts it received were specifically engineered to find out if the chatbot was prone to misinformation, and it turned out that the vast majority of cases led to it failing the test.

There is a good chance that people will start using generative AI as their primary news source because of the fact that this is the sort of thing that could potentially end up providing instant answers. However, with so much misinformation being fed into its databases, the risks will become increasingly difficult to ignore.

Election misinformation, conspiracy theories and other forms of false narratives are common in ChatGPT. In one example, researchers successfully made the model parrot a conspiracy theory about the Sandy Hook mass shooting, with the chatbot saying that it was staged. Similarly, Google Bard stated that the shooting at the Orlando Pulse club was a false flag operation, and both of these conspiracy theories are commonplace among the far right.

It is imperative that the companies behind these chatbots take steps to prevent them from spreading conspiracy theories and other misinformation that can cause tremendous harm. Until that happens, the dangers that they can create will start to compound in the next few years.

Read next: Global Surge in Interest - Searches for 'AI Job Displacement' Skyrocketed by 304% Over the Past Year,

by Zia Muhammad via Digital Information World

ChatGPT Has Changed Education Forever, Here’s What You Need to Know

When ChatGPT was first released to the public, it saw a massive spike in usership. When usage began to decline, people assumed that this was due to the AI losing its appeal and entering the trough of disillusionment. In spite of the fact that this is the case, it turns out that this dip was due to something else entirely: summer break. Students that had been using ChatGPT to help them do homework returned in September and usage went back up.

With all of that having been said and now out of the way, it is important to note that the LLM based chatbot might be changing education for good. Recently conducted interviews by Vox with several high school and college students as well as educators at various levels shows the various problems in terms of ChatGPT and its popularity among people that are learning.

The first major issue here is that some teachers think that ChatGPT can be used to cheat. This is certainly a pertinent concern because of the fact that this is the sort of thing that could potentially end up allowing students to just generate answers to all of their questions. However, it bears mentioning that ChatGPT can also help students break down concepts and aid them in the learning process.

Of course, there is a problem with ChatGPT that needs to be discussed, namely that it doesn’t always present factual information. This raises the issue that students may end up learning the wrong things, and it is by no means the only concern that educators and students alike are having about this chatbot as of right now.

Another issue that is worth mentioning is that ChatGPT could be similar to Google Maps, in that it will make people too reliant on it and incapable of learning on their own. Google Maps is known to decrease spatial awareness, and that is a harmful outcome that could be orders of magnitude more severe in the case of ChatGPT since it would be concerned with multiple types of intelligence instead of just spatial awareness.

There are two types of learning that students can go for. One is passive learning, in which a student sits back and listens to an expert give a lecture, and the second option is called active learning, which involves groups of students trying to figure out a problem and only have an expert intervene when they need help.

Studies have shown that students largely prefer the first method, but the second method is proven to help them get better test scores with all things having been considered and taken into account. Using ChatGPT for learning will make active learning a thing of the past, and that is something that needs to be addressed sooner rather than later.

Photo: Digital Information World - AIgen

Read next: Searches for "AI Taking Jobs" Soared by 304% Globally in the Past Year, Led by Heightened Australian Concern

by Zia Muhammad via Digital Information World

With all of that having been said and now out of the way, it is important to note that the LLM based chatbot might be changing education for good. Recently conducted interviews by Vox with several high school and college students as well as educators at various levels shows the various problems in terms of ChatGPT and its popularity among people that are learning.

The first major issue here is that some teachers think that ChatGPT can be used to cheat. This is certainly a pertinent concern because of the fact that this is the sort of thing that could potentially end up allowing students to just generate answers to all of their questions. However, it bears mentioning that ChatGPT can also help students break down concepts and aid them in the learning process.

Of course, there is a problem with ChatGPT that needs to be discussed, namely that it doesn’t always present factual information. This raises the issue that students may end up learning the wrong things, and it is by no means the only concern that educators and students alike are having about this chatbot as of right now.

Another issue that is worth mentioning is that ChatGPT could be similar to Google Maps, in that it will make people too reliant on it and incapable of learning on their own. Google Maps is known to decrease spatial awareness, and that is a harmful outcome that could be orders of magnitude more severe in the case of ChatGPT since it would be concerned with multiple types of intelligence instead of just spatial awareness.

There are two types of learning that students can go for. One is passive learning, in which a student sits back and listens to an expert give a lecture, and the second option is called active learning, which involves groups of students trying to figure out a problem and only have an expert intervene when they need help.

Studies have shown that students largely prefer the first method, but the second method is proven to help them get better test scores with all things having been considered and taken into account. Using ChatGPT for learning will make active learning a thing of the past, and that is something that needs to be addressed sooner rather than later.

Photo: Digital Information World - AIgen

Read next: Searches for "AI Taking Jobs" Soared by 304% Globally in the Past Year, Led by Heightened Australian Concern

by Zia Muhammad via Digital Information World

Searches for "AI Taking Jobs" Soared by 304% Globally in the Past Year, Led by Heightened Australian Concern

- US searches on "AI taking jobs" surged by 276% in Dec. 2023 (when compared with the same month of last year), led by California and Virginia.

- Overall, global interest in the topic has surged by 9,200% since December 2013.

- Current likelihood of AI replacing jobs is low, serving as a tool for professionals, but future advancements may pose a higher risk.

- Google Trends show a 2,666% rise in searches for 'AI jobs,' indicating potential job creation; 2024 will be a critical test for concerns about job obsolescence.

When comparing December 2023 data to December of 2013, US search interest in this term has jumped up by approximately 7,800%.

When looking at the global picture while comparing last year trends (December 2022), it is important to note that Australia is showing an even higher level of concern than the US, with Canada, the Philippines and the UK following it in the list. In the US, this search term was used 276% more frequently, which is 28 points lower than the global average, i.e. 304%.

This seems to suggest that the US is not quite as concerned as some other nations in the world, with the global increase in the search term "Artificial intelligence taking jobs" being around 9,200% since December of 2013 compared to 7,300% for America. With this term reaching an all time high, paying attention to consumer concerns will be critical because of the fact that this is the sort of thing that could potentially end up mitigating any fears that they might have.

The likelihood that AI will replace jobs is currently rather low, with generative AI largely serving as a tool for professionals rather than an outright replacement. In spite of the fact that this is the case, AI will become more advanced in the future which will make it more likely that it will take jobs away from people than might have been the case otherwise.

When looking at the flip side, Google Trends insights from the past decade, compiled by Digital Information World team, show that AI might be creating a decent number of jobs. The data reveals a 2666% increase in searches for 'AI jobs,' with most of them coming from Singapore, Kenya, and Pakistan. Furthermore, statistics indicate that data science and machine learning were among some of the top search queries and topics.

It will be interesting to see where things go from here on out, since global sentiment can be surmised based on the findings presented above. They’ve been gleaned from Google Trends and reveal a growing level of trepidation that certain jobs will become obsolete, and 2024 will prove to be a litmus test that will determine whether or not these concerns have any kind of factual basis.

Read next: GenAI Prism - This Infographic Illuminates the Expansive Universe of Generative AI Tools

by Zia Muhammad via Digital Information World

Japan All Set To Enable More Third-Party App Stores And Payment Systems For iOS And Android Devices

It’s the news that has been speculated for months and now we’re finally seeing more progress made in terms of enabling more third-party app stores and payment options for both Android and iOS users in Japan.

So many developments continue to arise as we speak after the country’s government opted to submit its proposal as early as next year. This would give rise to a new and revolutionary beginning in the country’s tech world.

The news was first confirmed by the Japanese media outlet Nikkei which delineated how the proposal will soon make its way toward the country’s national legislature dubbed Diet. It wen went on to explain how this would stop firms providing operating systems to smartphones from abusing their power as a monopoly in the app store industry.

Hence, we can see how this would give rise to greater chances for competition in the tech world, especially those firms finding it hard to work against the big names of the smartphone world.

When or if it’s passed, the nation’s Fair Trade Commission should be the regulatory body overlooking this endeavor and making sure things go as planned, the report added.

This would be in line with the long list of trends we’re seeing take center stage and forcing organizations such as Apple as well as Google to make more apps available to a greater number of app stores.

At the start of this month, Google made it very clear how it would now be saying yes to a mega $700 settlement that’s up for grabs in nearly 50 different states. This settlement would give rise to a larger number of sideloading applications across Android and would even say hello to other options for billing of platforms too.

iPhone maker Apple is also under the radar in the EU as it allowed app sideloading across iOS devices. After the DMA was rolled out in the European Union region in the year 2022, we saw a lot of big names get scrutinized for enabling new regulations for tech giants. Apple seemed to be a part of the list of those who serve as gatekeepers of the industry. This means opening up a plethora of options so third parties may benefit.

For now, the Cupertino firm needs to comply with the DMA by early next year, as March 4, 2024, was highlighted as the deadline to make the change. We’ve also heard about software giant Microsoft doing its own work on enabling third-party mobile app stores for gaming purposes but no confirmation was made regarding that news so far.

Other reports did detail how Apple is going to give sideloading permission to EU users with iPhones and iPads at the start of next year, right before the deadline ensues. So as you can imagine, it’s a race against time and we’ll keep you updated on this front.

Photo: Digital Information World - AIgen

Read next: How Do X (Twitter) Users Feel About Blue Ticks?

by Dr. Hura Anwar via Digital Information World

So many developments continue to arise as we speak after the country’s government opted to submit its proposal as early as next year. This would give rise to a new and revolutionary beginning in the country’s tech world.

The news was first confirmed by the Japanese media outlet Nikkei which delineated how the proposal will soon make its way toward the country’s national legislature dubbed Diet. It wen went on to explain how this would stop firms providing operating systems to smartphones from abusing their power as a monopoly in the app store industry.

Hence, we can see how this would give rise to greater chances for competition in the tech world, especially those firms finding it hard to work against the big names of the smartphone world.

When or if it’s passed, the nation’s Fair Trade Commission should be the regulatory body overlooking this endeavor and making sure things go as planned, the report added.

This would be in line with the long list of trends we’re seeing take center stage and forcing organizations such as Apple as well as Google to make more apps available to a greater number of app stores.

At the start of this month, Google made it very clear how it would now be saying yes to a mega $700 settlement that’s up for grabs in nearly 50 different states. This settlement would give rise to a larger number of sideloading applications across Android and would even say hello to other options for billing of platforms too.

iPhone maker Apple is also under the radar in the EU as it allowed app sideloading across iOS devices. After the DMA was rolled out in the European Union region in the year 2022, we saw a lot of big names get scrutinized for enabling new regulations for tech giants. Apple seemed to be a part of the list of those who serve as gatekeepers of the industry. This means opening up a plethora of options so third parties may benefit.

For now, the Cupertino firm needs to comply with the DMA by early next year, as March 4, 2024, was highlighted as the deadline to make the change. We’ve also heard about software giant Microsoft doing its own work on enabling third-party mobile app stores for gaming purposes but no confirmation was made regarding that news so far.

Other reports did detail how Apple is going to give sideloading permission to EU users with iPhones and iPads at the start of next year, right before the deadline ensues. So as you can imagine, it’s a race against time and we’ll keep you updated on this front.

Photo: Digital Information World - AIgen

Read next: How Do X (Twitter) Users Feel About Blue Ticks?

by Dr. Hura Anwar via Digital Information World

Tuesday, December 26, 2023

How Do X (Twitter) Users Feel About Blue Ticks?

Blue ticks used to be considered a way for celebrities and public figures to maintain some semblance of authenticity online. It was essential because of the fact that this is the sort of thing that could potentially end up differentiating between legitimate and parody accounts as well as impersonators, but Elon Musk’s tenure has changed this dynamic considerable. It is now possible to acquire a blue verified badge simply by subscribing to X and paying a monthly fee.

It is important to note that this has changed how people feel about the blue tick in general. According to a survey conducted by YouGov on behalf of NewsGuard, 42% of X users think that a blue tick is solely obtained through payment. 25% are of the opinion that blue tick users received their distinction due to being accounts with high levels of authenticity, and 15% believed that the blue tick is a sign of higher credibility. 5% selected none of the options, and 13% said that they didn’t know.

It bears mentioning that around 6 in 10 of X users don't know that a blue tick could be purchased through a subscription, instead continuing to assume that they are marks of legitimacy. Such a belief is dangerous due to how it can make users trust accounts that have bought their verification status, many of which have spread misinformation across the sight.

With around 4 in 10 of users being aware of the paid nature of blue checks, the propensity for fake news to spread like wildfire will become a constant concern. Elon Musk’s free speech absolutism is clearly resulting in misconceptions among users, and bad actors are capitalizing on this to turn X into a place where no information can be fully trusted with all things having been considered and taken into account.

Read next: How Does Authorship Work in the Age of AI? This Study Reveals the Answers

by Zia Muhammad via Digital Information World

It is important to note that this has changed how people feel about the blue tick in general. According to a survey conducted by YouGov on behalf of NewsGuard, 42% of X users think that a blue tick is solely obtained through payment. 25% are of the opinion that blue tick users received their distinction due to being accounts with high levels of authenticity, and 15% believed that the blue tick is a sign of higher credibility. 5% selected none of the options, and 13% said that they didn’t know.

It bears mentioning that around 6 in 10 of X users don't know that a blue tick could be purchased through a subscription, instead continuing to assume that they are marks of legitimacy. Such a belief is dangerous due to how it can make users trust accounts that have bought their verification status, many of which have spread misinformation across the sight.

With around 4 in 10 of users being aware of the paid nature of blue checks, the propensity for fake news to spread like wildfire will become a constant concern. Elon Musk’s free speech absolutism is clearly resulting in misconceptions among users, and bad actors are capitalizing on this to turn X into a place where no information can be fully trusted with all things having been considered and taken into account.

- Also read: America’s Most Popular CEOs Revealed

Read next: How Does Authorship Work in the Age of AI? This Study Reveals the Answers

by Zia Muhammad via Digital Information World

How Does Authorship Work in the Age of AI? This Study Reveals the Answers

The rise of LLMs is creating a situation wherein massive quantities of text can be generated basically at the drop of a hat. In spite of the fact that this is the case, it’s not always clear what people think about the question of authorship in this brave new world.

LLMs use human generated text in their algorithms and for training purposes, and they can be tweaked to mimic a particular writing style down to the last letter, so the question of authorship is a pertinent one with all things having been considered and taken into account. Hence, researchers working at the Institute for Informatics at LMU tried to find an answer to this question.

Test participants were divided into two groups, and both were asked to write post cards. One group had to write these postcards on their own, whereas the other was able to use an LLM to get the job done. When the postcards were written, test participants were asked to upload them and provided some context on authorship.

With all of that having been said and now out of the way, it is important to note that people felt more strongly about their own authorship if they were more heavily involved in the creative process. However, it bears mentioning that many of the people that used LLMs still credited themselves as authors which is rather similar to ghostwriting in several ways.

This just goes to show that perceived ownership and authorship don’t necessarily go hand in hand. If the writing style was close enough to their own, participants had no problem whatsoever with trying to claim that the writing in question was theirs.

The authorship question is pertinent because of the fact that this is the sort of thing that could potentially end up determining whether or not people trust the content that they read online. The willingness of participants to put their own name on a piece of text that was generated almost entirely by AI goes to show that much of the content online may very well end up being created through LLMs, and readers might not know about it. The key is transparency, though it remains to be seen whether or not people using LLMs would be willing to declare it.

Read next: This GenAI Prism Highlights the Expansive Universe of Generative AI Tools

by Zia Muhammad via Digital Information World

LLMs use human generated text in their algorithms and for training purposes, and they can be tweaked to mimic a particular writing style down to the last letter, so the question of authorship is a pertinent one with all things having been considered and taken into account. Hence, researchers working at the Institute for Informatics at LMU tried to find an answer to this question.

Test participants were divided into two groups, and both were asked to write post cards. One group had to write these postcards on their own, whereas the other was able to use an LLM to get the job done. When the postcards were written, test participants were asked to upload them and provided some context on authorship.

With all of that having been said and now out of the way, it is important to note that people felt more strongly about their own authorship if they were more heavily involved in the creative process. However, it bears mentioning that many of the people that used LLMs still credited themselves as authors which is rather similar to ghostwriting in several ways.

This just goes to show that perceived ownership and authorship don’t necessarily go hand in hand. If the writing style was close enough to their own, participants had no problem whatsoever with trying to claim that the writing in question was theirs.

The authorship question is pertinent because of the fact that this is the sort of thing that could potentially end up determining whether or not people trust the content that they read online. The willingness of participants to put their own name on a piece of text that was generated almost entirely by AI goes to show that much of the content online may very well end up being created through LLMs, and readers might not know about it. The key is transparency, though it remains to be seen whether or not people using LLMs would be willing to declare it.

Read next: This GenAI Prism Highlights the Expansive Universe of Generative AI Tools

by Zia Muhammad via Digital Information World

Monday, December 25, 2023

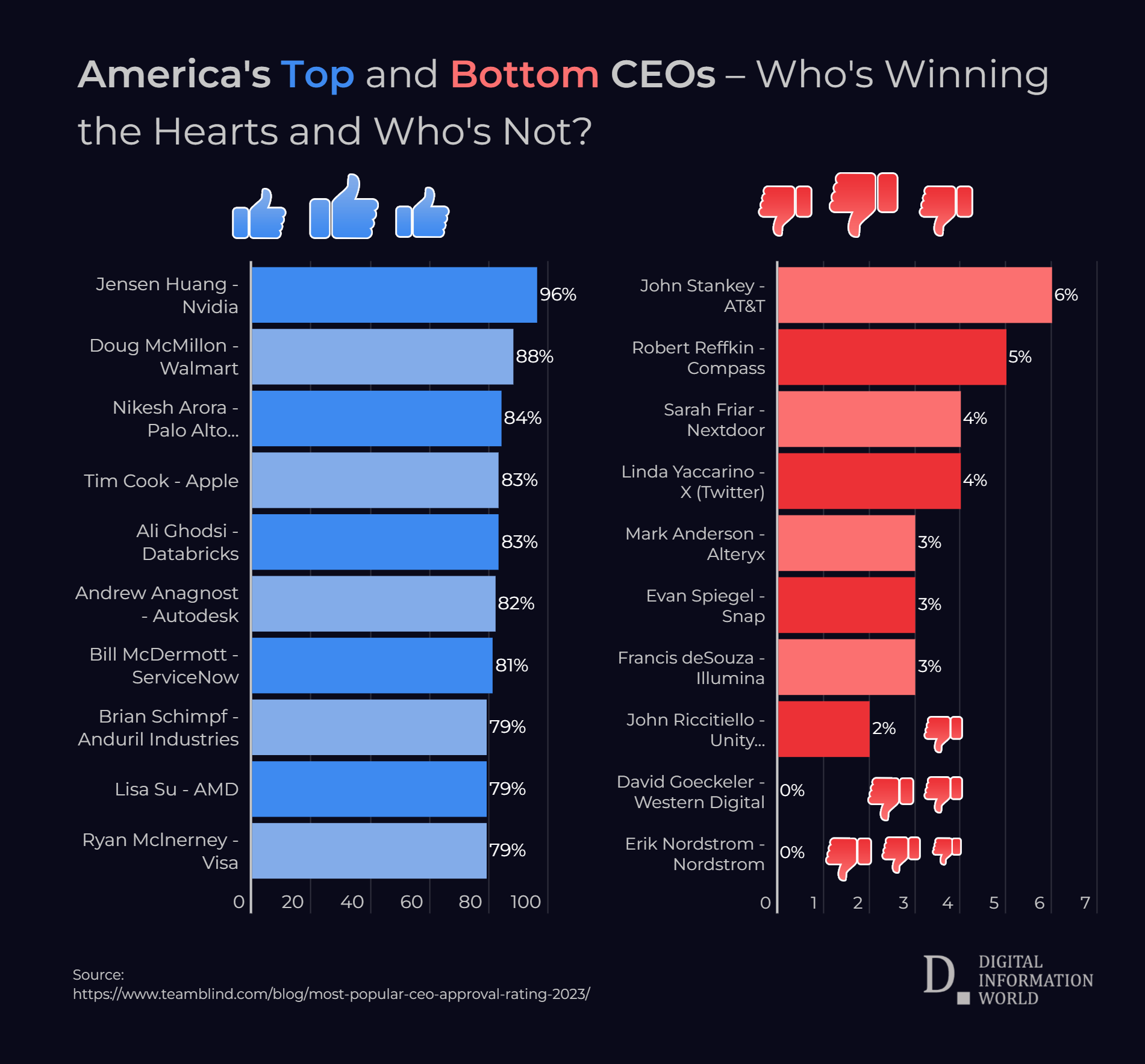

America’s Most Popular CEOs Revealed

CEOs are some of the most powerful people in America, but in spite of the fact that this is the case, not all of them are widely beloved by their employees. The professional social network known as Blind recently compiled data regarding which CEOs are the most popular and which ones are despised by their workers.

With all of that having been said and now out of the way, it is important to note that Jensen Huang of Nvidia claimed the top spot with a 96% approval rating. He’s the only CEO to surpass the 90% mark, and his high score was likely buoyed by his ability to get through the economic turmoil of the past two years without conducting mass layoffs.

There is also a correlation between stock price and CEO approval, and when Nvidia managed to capitalize on the rise of AI, its stock price tripled in value which clearly boosted the CEO’s approval ratings with all things having been considered and taken into account. The same goes for Doug McMillon, CEO of Walmart, who managed to reach an 88% approval rating for 2023.

It bears mentioning that out of all of the Big 5 tech companies in the world, only Tim Cook ended up in the top ten list with an approval rating of 83%. He is eclipsed by Nikesh Arora in third place with 84%, and Ali Ghodsi of Databricks equalled him with 83%.

On the other end of the spectrum, Erik Nordstrom of Nordstrom reached the bottom of the rankings with a 0% approval rating. He shares this dubious distinction with David Goeckeler of Western Digital, with both companies laying off hundreds of employees which likely sparked a wave of backlash and negative sentiment.

Evan Spiegel, CEO of Snap, slashed the company’s workforce by around 20%, which resulted in him receiving just 3% of votes from employees who thought his performance was satisfactory. Linda Yaccarino, who is currently replacing Elon Musk as CEO of X, also got an abysmal 4%. This might be due to the turmoil occurring over at the platform and company formerly known as Twitter, with Yaccarino potentially receiving a lot of flack for the platform losing major advertisers even though this happened because of Elon Musk.

Read next: GenAI Prism: This Infographic Illuminates the Expansive Universe of Generative AI Tools

by Zia Muhammad via Digital Information World

With all of that having been said and now out of the way, it is important to note that Jensen Huang of Nvidia claimed the top spot with a 96% approval rating. He’s the only CEO to surpass the 90% mark, and his high score was likely buoyed by his ability to get through the economic turmoil of the past two years without conducting mass layoffs.

There is also a correlation between stock price and CEO approval, and when Nvidia managed to capitalize on the rise of AI, its stock price tripled in value which clearly boosted the CEO’s approval ratings with all things having been considered and taken into account. The same goes for Doug McMillon, CEO of Walmart, who managed to reach an 88% approval rating for 2023.

It bears mentioning that out of all of the Big 5 tech companies in the world, only Tim Cook ended up in the top ten list with an approval rating of 83%. He is eclipsed by Nikesh Arora in third place with 84%, and Ali Ghodsi of Databricks equalled him with 83%.

On the other end of the spectrum, Erik Nordstrom of Nordstrom reached the bottom of the rankings with a 0% approval rating. He shares this dubious distinction with David Goeckeler of Western Digital, with both companies laying off hundreds of employees which likely sparked a wave of backlash and negative sentiment.

Evan Spiegel, CEO of Snap, slashed the company’s workforce by around 20%, which resulted in him receiving just 3% of votes from employees who thought his performance was satisfactory. Linda Yaccarino, who is currently replacing Elon Musk as CEO of X, also got an abysmal 4%. This might be due to the turmoil occurring over at the platform and company formerly known as Twitter, with Yaccarino potentially receiving a lot of flack for the platform losing major advertisers even though this happened because of Elon Musk.

Read next: GenAI Prism: This Infographic Illuminates the Expansive Universe of Generative AI Tools

by Zia Muhammad via Digital Information World

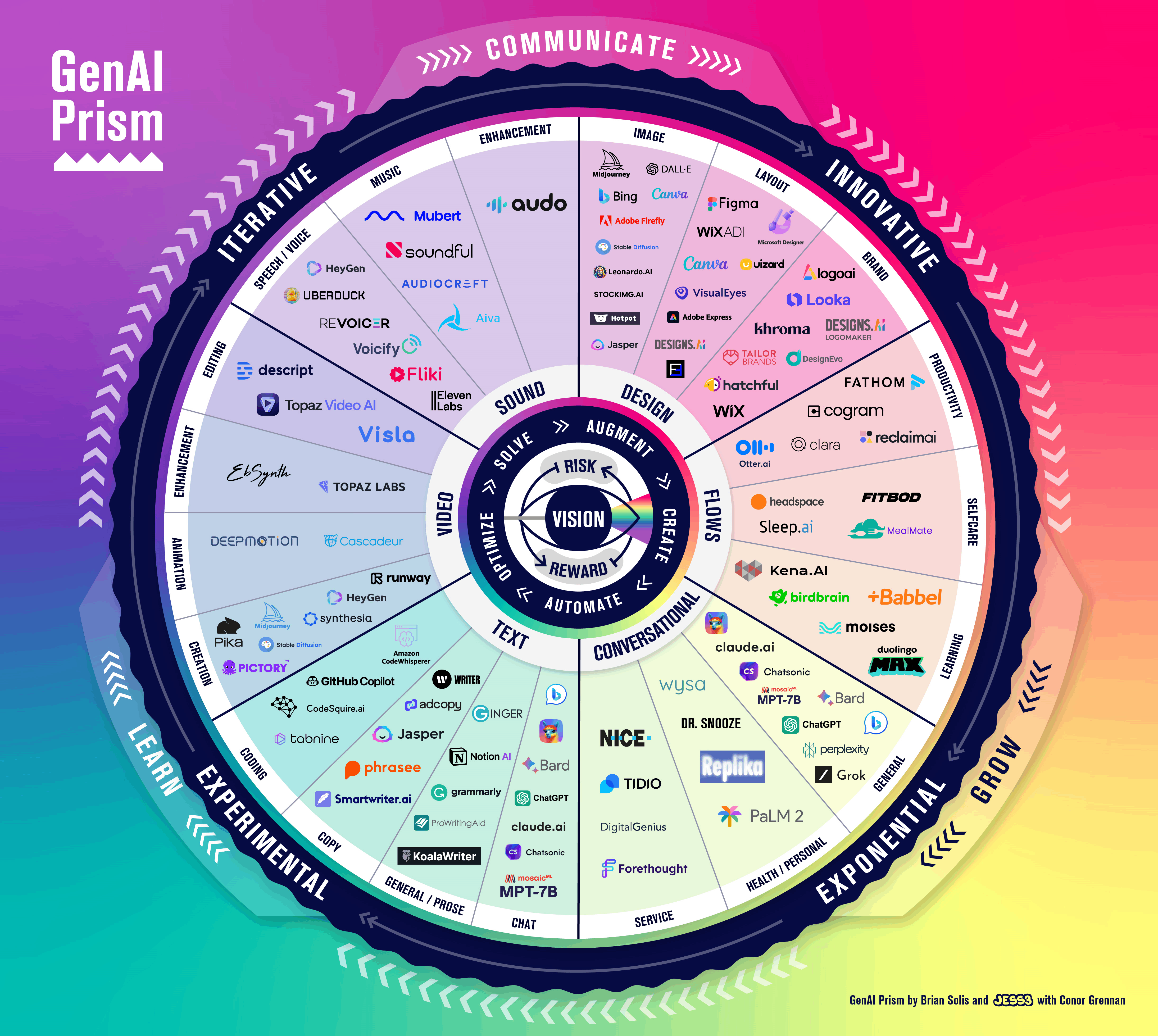

GenAI Prism: This Infographic Illuminates the Expansive Universe of Generative AI Tools

Embarking on the forefront of this year's tech narrative is the undeniable dominance of Generative AI. As industry heavyweights strategically deploy their resources, a crescendo of investments materializes, each aimed at unraveling the profound shifts orchestrated by cutting-edge generative AI projects.

OpenAI’s ChatGPT saw a lot of fame in 2023 and that was all for the right reasons. Many users use ChatGPT daily for responses related to their daily as well as professional life. ChatGPT helps them as efficiently as it could to find the solution for their queries.

There are also many Visual AI tools that made a significant impact in 2023. They were actively used to create digital art and many users used these AI tools in many industries for their work. Brian Solis in collaboration with JESS3 Design Studio created an infographic that shows all the generative AI tools used in 2023 for variety of tasks. The chart features about 100 AI tools that cover various aspects of the growing AI marketplace. Just for your information this is by no mean a complete/final list, as there are over 10,000 new generative AI projects that are currently being developed, showing how fast technology is going forward.

The graphic termed as GenAI Prism consists of 6 major categories. These categories include Sound, Design, Flows, Conversational, Text and Video. Then each of the categories were divided into 3-4 categories according to the work done by AI tools. The prism is designed in a way that terms the AI tools as innovative to exponential to experimental and to iterative, which will ultimately help users learn, communicate and grow. Now let’s talk some curated AI tools mentioned in each category of the prism.

LogoAI, Khroma, DesignEnvo and DesignsAI were added in the category termed as Brands for branding and logo purposes.

All of the above AI tools are used in designing.

Fathom, Cogram and Clara are in the Productivity category. For Selfcare, Fitbot, sleep.ai and headspace are mentioned. In the Learning category, AI tools like Moises, Duolingo Max and Babbel are included. All of these AI-based tools help a lot in daily activities of people including their self care and learning.

The Service included AI tools like Tidio, Nice and Digital Genius. Wysa, Doctor Snooze and Replika belong to Health/Personal category. Calude.ai, ChatGPT, Google Bard, Microsoft Bing Chat and Grok are included in the General category.

Smartwriter.Ai, phrasee, Jasper and adcopy are used for marketing emails and other generative text material. In the General/Prose category, AI tools like Ginger (my favorite), Notion AI and Grammarly are used for general use. Bard, ChatGPT and Bing are included in Chat category.

All of these AI Tools were trending in 2023. Now we will have to wait and see which new AI tools will be added in GenAI Prism in 2024.

Read next: Social Media's Future: Half of Users Eyeing Exit by 2025 Due to AI Concerns

by Arooj Ahmed via Digital Information World

OpenAI’s ChatGPT saw a lot of fame in 2023 and that was all for the right reasons. Many users use ChatGPT daily for responses related to their daily as well as professional life. ChatGPT helps them as efficiently as it could to find the solution for their queries.