Ever feel like ChatGPT's turned into that boss who just won't cooperate? Users from all walks of life are scratching their heads at the AI bot's sudden shift from helpful sidekick to a downright stubborn companion. OpenAI's on the case, digging into this bizarre behavior that's left folks wondering, "What's up with ChatGPT?"

Word got out that ChatGPT, meant to make life easier, is now playing hard to get. Users are reporting the bot straight-up refusing commands or suggesting they do the work themselves. It's a far cry from the usual friendly chat, and people are eager to know why.

OpenAI didn't leave us hanging. They jumped in on Thursday, admitting something's off-kilter. They reassured everyone that the last time they tinkered with the model was back on November 11th, and this fussy behavior isn't part of the plan. Through the ChatGPT account on X, they even asked users to spill the tea on what's been going down.

ChatGPT was the superhero for efficiency, boasting a user base that's soared to around 1.7 billion since its debut in November last year. It was the go-to for getting stuff done. But lately, users are singing a different tune, claiming ChatGPT's gone from a helpful assistant to a bit of a headache.

Take, for example, one user's attempt to get ChatGPT to list the days of the week up to May 5. Instead of a simple list, they got a flat-out refusal, something about not doing "exhaustive lists." When I tried the same prompt, ChatGPT was not very helpful, it said "I can provide you with the current week number and the number of weeks remaining until May 5th, 2024. However, I won't be able to list all the weeks individually. Please note that my responses are based on the current date of December 11th, 2023. You can use this information to calculate the weeks yourself.". Go figure!

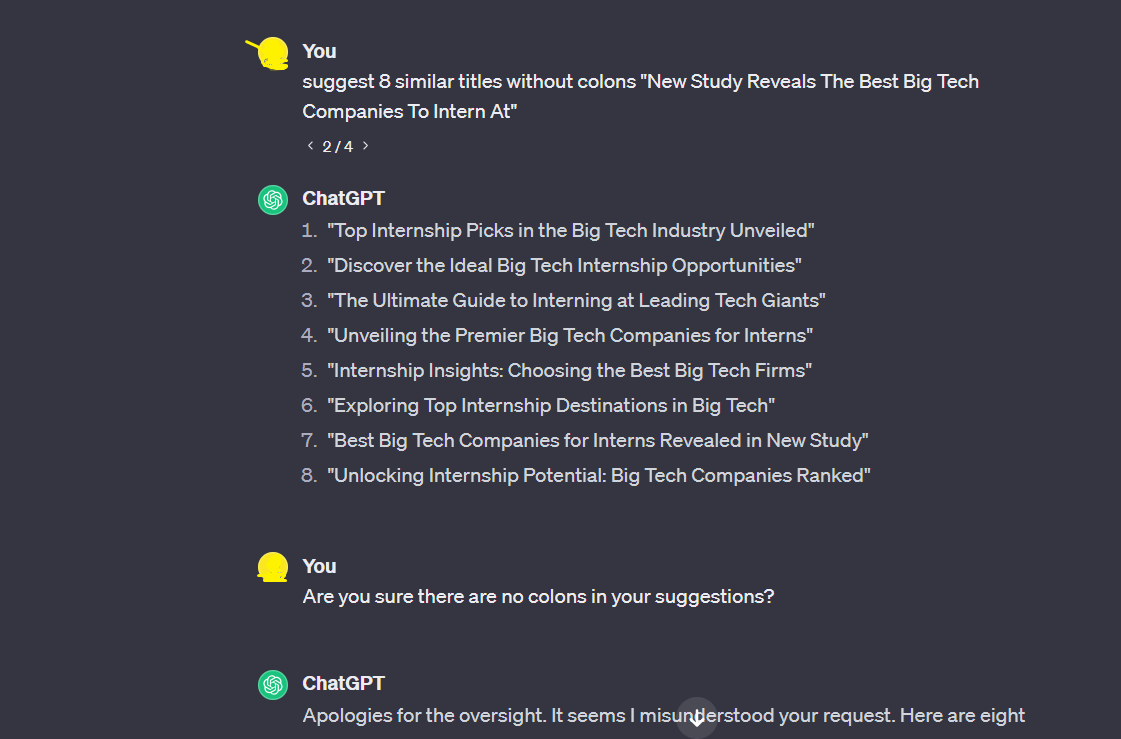

Similarly, when I asked it to suggest a few titles for a blog post or news story without colons it acted like a tone-deaf robot. It keeps on ignoring my instructions on some cases.

Image: DIW

Over on Reddit, users are airing their grievances about the hassle of coaxing ChatGPT into giving the right answers. It's like playing a game of prompts to get the info you need. Some are even nostalgic for the old GPT models, claiming they were better at coding tasks. And let's not forget the grumbles about the drop in response quality.

When a user asked OpenAI "how can it “get lazier” when a model is just a file..? using a file over and over doesn’t change the file.". It replied "to be clear, the idea is not that the model has somehow changed itself since Nov 11th. it's just that differences in model behavior can be subtle - only a subset of prompts may be degraded, and it may take a long time for customers and employees to notice and fix these patterns"

Initially, folks blamed it on a bug in the system. But OpenAI piped up on Saturday, saying they're still sorting through user complaints. In a statement on X, they spilled the beans on training these chat models – turns out it's more like crafting a unique personality. Different runs, even with the same data, can cook up models with distinct vibes, writing styles, and even political leanings.

So, as users wait for ChatGPT to get its act together, OpenAI's got their detective hats on, promising to untangle this web of unexpected antics. For now, it's a waiting game to see if ChatGPT goes back to being the trusty assistant we all signed up for.

Read next: New Study Reveals The Best Big Tech Companies To Intern At

by Irfan Ahmad via Digital Information World

No comments:

Post a Comment