From the very beginning of human existence, their actions have shaped the Earth. Whether it’s gathering food, cultivating crops, constructing settlements, or exploring vast oceans, the human presence has undeniably influenced the world.

With the expansion of the global population and the advancement of civilizations, the magnitude of the impact has grown exponentially. The rise of mass agriculture, the extraction of natural reserves, and the development of urban infrastructure are just a few notable signs of how mankind has evolved.

A computational materials scientist from the University of Bath, Adam Symington, has recently mapped the influence of the human race on Earth, starting from 1993 to 2009.

To precisely assess and quantify human impact, the researchers collected and analysed data from 1993 to 2009, focusing on eight key factors that indicate human influence. These factors included, Built environments: this variable examines the extent of human-made structures, including cities, towns, and infrastructure such as buildings, roads, and bridges. Population density: this variable helps scale the concentration of human inhabitants in specific areas, highlighting regions with high population density. Night-time lights: by analysing satellite imagery of Earth at night, researchers can map the intensity and spread of artificial lights, providing insights into urbanisation and human activity. Croplands: This variable focuses on the extent of land used for agricultural purposes, such as cultivating crops and food production. It reveals the areas where significant agricultural activity occurs.

Pasture: examining the extent of land dedicated to grazing animals, this variable highlight the impact of livestock farming and the need for space to sustain animal populations. Roads: mapping the network of roads allows researchers to understand the connectivity and accessibility of different regions, emphasising areas with significant transportation infrastructure. Railways highlight the presence and extent of railway systems, which are critical for transportation, trade, and economic development. And lastly, navigable waterways: by analysing the presence and significance of rivers, canals, and other water bodies, this variable offers insights into regions where water transport plays a crucial role in human activities.

Results showed that rising human populations, infrastructure, and roads were the highest factors. Regions including South America and Asia also witnessed the influence of growth in farming and urban development. Countries including India and Pakistan were also among the areas where the highest influence was recorded.

On the other hand, some regions, including northern Canada, eastern Russia, and Iceland, experienced the least influence. Similarly, places with no to very few human inhabitants, such as the Amazon rainforest, deserts, and plateaus, also had minute changes. However, the changes were mostly influenced by natural reserve extraction or developing infrastructure.

While there were still unmarked areas on Earth in 2009, subsequent fluctuations in demography, political conditions, and population have the potential to significantly amplify the impact on the planet, both currently and in the future.

Read next: AI Could Automate or Augment 40% of Total Hours Worked in the U.S

by Arooj Ahmed via Digital Information World

"Mr Branding" is a blog based on RSS for everything related to website branding and website design, it collects its posts from many sites in order to facilitate the updating to the latest technology.

To suggest any source, please contact me: Taha.baba@consultant.com

Wednesday, June 21, 2023

Understanding the Biases Embedded Within Artificial Intelligence

In a world where artificial intelligence (AI) is becoming increasingly prevalent, there is growing concern about the biases embedded within these technologies. A recent analysis of Stable Diffusion, a text-to-image model developed by Stable AI, has revealed that it amplifies stereotypes about race and gender, exacerbating existing inequalities. The implications of these biases extend beyond perpetuating harmful stereotypes and could potentially lead to unfair treatment in various sectors, including law enforcement.

Stable Diffusion is one of many AI models that generate images in response to written prompts. While the generated images may initially appear realistic, they distort reality by magnifying racial and gender disparities to an even greater extent than what exists in the real world. This distortion becomes increasingly significant as text-to-image AI models transition from creative outlets to the foundation of the future economy.

The prevalence of text-to-image AI is expanding rapidly, with applications emerging in various industries. Major players like Adobe Inc. and Nvidia Corp. are already utilizing this technology, and it is even making its way into advertisements. Notably, the Republican National Committee used AI-generated images in an anti-Biden political ad, which depicted a group of primarily white border agents apprehending individuals labeled as "illegals." While the video appeared real, it was no more authentic than an animation, yet it reached close to a million people on social media.

Experts in generative AI predict that up to 90% of internet content could be artificially generated within a few years. As these AI tools become more prevalent, they not only perpetuate stereotypes that hinder progress toward equality but also pose the risk of facilitating unfair treatment. One concerning example is the potential use of biased text-to-image AI in law enforcement to create sketches of suspects, which could lead to wrongful convictions.

Sasha Luccioni, a research scientist at the AI startup Hugging Face, highlights the problem of projecting a single worldview instead of representing diverse cultures and visual identities. It is crucial to examine the magnitude of biases in generative AI models like Stable Diffusion to address these concerns.

To understand the extent of biases, Bloomberg conducted an analysis using Stable Diffusion. The study involved generating thousands of images related to job titles and crime, and the results were alarming. For high-paying jobs, the AI model predominantly generated images of individuals with lighter skin tones, reinforcing racial disparities. Furthermore, the generated images were heavily skewed towards males, with women significantly underrepresented in high-paying occupations.

The biases were particularly pronounced when considering gender and skin tone simultaneously. Men with lighter skin tones were overrepresented in every high-paying occupation, including positions like "politician," "lawyer," "judge," and "CEO." Conversely, women with darker skin tones were predominantly depicted in low-paying jobs such as "social worker," "fast-food worker," and "dishwasher-worker." This portrayal created a skewed image of the world, associating certain occupations with specific groups of people.

Stable AI, the distributor of Stable Diffusion, acknowledges that AI models inherently reflect the biases present in the datasets used to train them. However, the company intends to mitigate biases by developing open-source models trained on datasets specific to different countries and cultures. The goal is to address overrepresentation in general datasets and improve bias evaluation techniques.

The impact of biased AI extends beyond visual representation. It can have profound educational and professional consequences, particularly for Black and Brown women and girls. Heather Hiles, chair of Black Girls Code, emphasizes that individuals learn from seeing themselves represented, and the absence of such representation may lead them to feel excluded. This exclusion, reinforced through biased images, can create significant barriers.

Moreover, AI systems have been previously criticized for discriminating against Black women. Commercial facial-recognition products and search algorithms frequently misidentify and underrepresent them, further emphasizing the need to tackle these biases at their root.

The issue of biased AI models has caught the attention of lawmakers and regulators. European Union legislators are currently discussing proposals to introduce safeguards against AI bias, while the United States Senate has held hearings to address the risks associated with AI technologies and the necessity of regulation. These efforts aim to ensure that AI technologies are developed with ethical considerations and transparency in mind.

As the market for generative AI models continues to grow, the need for ethical and unbiased AI becomes increasingly urgent. The potential impact on society and the economy is immense, and failure to address these biases could hinder progress toward a more equitable future. It is imperative that AI developers, regulators, and society as a whole work together to mitigate biases, promote diversity, and shape AI technologies that benefit everyone.

Read next: Survey Reveals Workers vs. Leaders Optimism and Concerns Toward AI

by Ayesha Hasnain via Digital Information World

Stable Diffusion is one of many AI models that generate images in response to written prompts. While the generated images may initially appear realistic, they distort reality by magnifying racial and gender disparities to an even greater extent than what exists in the real world. This distortion becomes increasingly significant as text-to-image AI models transition from creative outlets to the foundation of the future economy.

The prevalence of text-to-image AI is expanding rapidly, with applications emerging in various industries. Major players like Adobe Inc. and Nvidia Corp. are already utilizing this technology, and it is even making its way into advertisements. Notably, the Republican National Committee used AI-generated images in an anti-Biden political ad, which depicted a group of primarily white border agents apprehending individuals labeled as "illegals." While the video appeared real, it was no more authentic than an animation, yet it reached close to a million people on social media.

Experts in generative AI predict that up to 90% of internet content could be artificially generated within a few years. As these AI tools become more prevalent, they not only perpetuate stereotypes that hinder progress toward equality but also pose the risk of facilitating unfair treatment. One concerning example is the potential use of biased text-to-image AI in law enforcement to create sketches of suspects, which could lead to wrongful convictions.

Sasha Luccioni, a research scientist at the AI startup Hugging Face, highlights the problem of projecting a single worldview instead of representing diverse cultures and visual identities. It is crucial to examine the magnitude of biases in generative AI models like Stable Diffusion to address these concerns.

To understand the extent of biases, Bloomberg conducted an analysis using Stable Diffusion. The study involved generating thousands of images related to job titles and crime, and the results were alarming. For high-paying jobs, the AI model predominantly generated images of individuals with lighter skin tones, reinforcing racial disparities. Furthermore, the generated images were heavily skewed towards males, with women significantly underrepresented in high-paying occupations.

The biases were particularly pronounced when considering gender and skin tone simultaneously. Men with lighter skin tones were overrepresented in every high-paying occupation, including positions like "politician," "lawyer," "judge," and "CEO." Conversely, women with darker skin tones were predominantly depicted in low-paying jobs such as "social worker," "fast-food worker," and "dishwasher-worker." This portrayal created a skewed image of the world, associating certain occupations with specific groups of people.

Stable AI, the distributor of Stable Diffusion, acknowledges that AI models inherently reflect the biases present in the datasets used to train them. However, the company intends to mitigate biases by developing open-source models trained on datasets specific to different countries and cultures. The goal is to address overrepresentation in general datasets and improve bias evaluation techniques.

The impact of biased AI extends beyond visual representation. It can have profound educational and professional consequences, particularly for Black and Brown women and girls. Heather Hiles, chair of Black Girls Code, emphasizes that individuals learn from seeing themselves represented, and the absence of such representation may lead them to feel excluded. This exclusion, reinforced through biased images, can create significant barriers.

Moreover, AI systems have been previously criticized for discriminating against Black women. Commercial facial-recognition products and search algorithms frequently misidentify and underrepresent them, further emphasizing the need to tackle these biases at their root.

The issue of biased AI models has caught the attention of lawmakers and regulators. European Union legislators are currently discussing proposals to introduce safeguards against AI bias, while the United States Senate has held hearings to address the risks associated with AI technologies and the necessity of regulation. These efforts aim to ensure that AI technologies are developed with ethical considerations and transparency in mind.

As the market for generative AI models continues to grow, the need for ethical and unbiased AI becomes increasingly urgent. The potential impact on society and the economy is immense, and failure to address these biases could hinder progress toward a more equitable future. It is imperative that AI developers, regulators, and society as a whole work together to mitigate biases, promote diversity, and shape AI technologies that benefit everyone.

Read next: Survey Reveals Workers vs. Leaders Optimism and Concerns Toward AI

by Ayesha Hasnain via Digital Information World

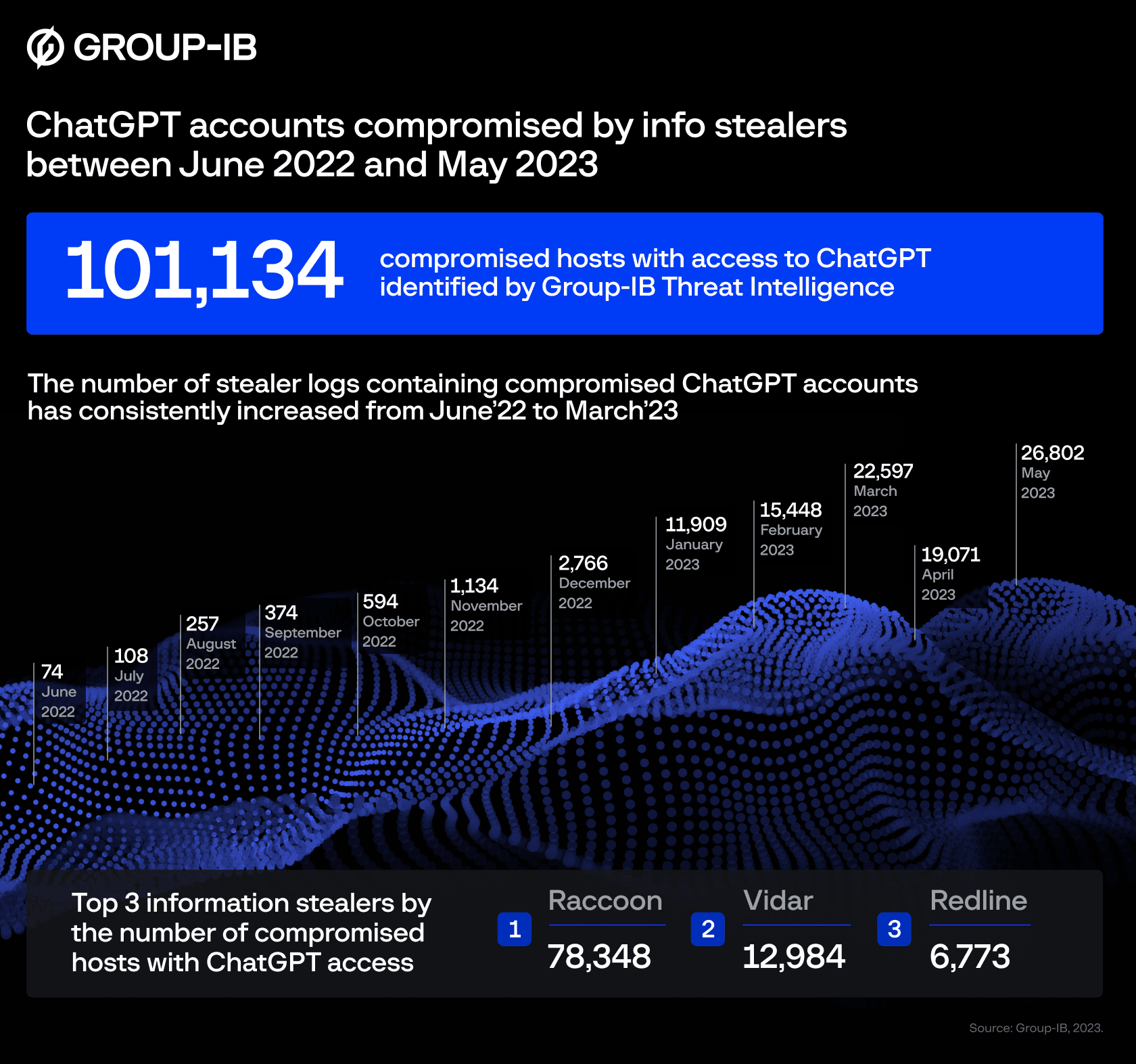

Security Researchers Raise The Alarm As More Than 100000 ChatGPT Accounts Breached By Hackers

Top tech giant OpenAI is under a lot of pressure recently after users’ ChatGPT accounts were the target of hackers.

More than 100,000 accounts were breached of their security, it was reported. Meanwhile, the news claims that such reports are a clear hint of how poor the security was in place and how accountholders should get better password practices in place to ward off such risks.

Two-factor authentication is just one of the many suggestions that were recently put up while others were linked to adding stricter policies for use.

Now, shocking reports have gone as far as to mention that the accounts that were hacked were compromised of their personal data and that is now being sold in various spots on the dark web.

Clearly, users are not happy and demand a clear justification while others are taking one step back from making more accounts until and unless the security gets better with time.

This incident did not arise overnight but has been taking place for a year now. We’re talking about the month of May last year and June this year. Moreover, the report produced by Group-IB says the data was unraveled through stolen data logs that were put on sale by various apps offering cybercrime activities like these.

As far as where the incident arose the most was concerned or which nation had the most accounts breached, it was India. The latter had more than 12,000 users’ accounts hacked, more than any other nation around the globe.

But that’s not surprising considering the fact that the country’s tech industry is booming and how it’s adopting the platform with open arms and really reaping the benefits of AI power to better client service and enhance the productivity of their respective workforce too.

Meanwhile, there are a few other nations that are following closely in terms of being affected the most. This includes nations like Pakistan, Egypt, the US, Bangladesh, Brazil, France, and Vietnam.

Such a huge impact proves that this form of AI technology is so famous in so many places and cultures. Therefore, such mishaps by OpenAI cannot be afforded by such companies who are depending so much on AI technology for their progress and development.

As it is, stealing personal data is a cybercrime that the world has been dealing with for so long, and having one of the world’s most popular tools can only spell more trouble.

Read next: New Disturbing AI Images Displaying Child Abuse Are Taking The Web By Storm As Experts Raise Alarm

by Dr. Hura Anwar via Digital Information World

More than 100,000 accounts were breached of their security, it was reported. Meanwhile, the news claims that such reports are a clear hint of how poor the security was in place and how accountholders should get better password practices in place to ward off such risks.

Two-factor authentication is just one of the many suggestions that were recently put up while others were linked to adding stricter policies for use.

Now, shocking reports have gone as far as to mention that the accounts that were hacked were compromised of their personal data and that is now being sold in various spots on the dark web.

Clearly, users are not happy and demand a clear justification while others are taking one step back from making more accounts until and unless the security gets better with time.

This incident did not arise overnight but has been taking place for a year now. We’re talking about the month of May last year and June this year. Moreover, the report produced by Group-IB says the data was unraveled through stolen data logs that were put on sale by various apps offering cybercrime activities like these.

As far as where the incident arose the most was concerned or which nation had the most accounts breached, it was India. The latter had more than 12,000 users’ accounts hacked, more than any other nation around the globe.

But that’s not surprising considering the fact that the country’s tech industry is booming and how it’s adopting the platform with open arms and really reaping the benefits of AI power to better client service and enhance the productivity of their respective workforce too.

Meanwhile, there are a few other nations that are following closely in terms of being affected the most. This includes nations like Pakistan, Egypt, the US, Bangladesh, Brazil, France, and Vietnam.

Such a huge impact proves that this form of AI technology is so famous in so many places and cultures. Therefore, such mishaps by OpenAI cannot be afforded by such companies who are depending so much on AI technology for their progress and development.

As it is, stealing personal data is a cybercrime that the world has been dealing with for so long, and having one of the world’s most popular tools can only spell more trouble.

Read next: New Disturbing AI Images Displaying Child Abuse Are Taking The Web By Storm As Experts Raise Alarm

by Dr. Hura Anwar via Digital Information World

AI Could Automate or Augment 40% of Total Hours Worked in the U.S

The future of work is here, and it's being shaped by artificial intelligence. According to an Accenture study, these models have a solid potential to automate or supplement 40% of all hours worked in the United States this year.

Did you know that approximately two-thirds of positions in the United States that deal with language have a strong potential for automation or AI advancement? According to recent research incorporating data from the U.S. Department of Labour, the Bureau of Labour Statistics, and the Occupational Information Network, this is the case. A startling 62 percent of all labor time is today devoted to tasks using language.

Given that AI is present in many sectors of the economy, from healthcare to finance, and that it may reduce the difficulty and expense of completing certain activities, the potential impact of AI on language-related occupations is particularly significant. Numerous sectors have been hailed as being transformed by AI.

Artificial intelligence (AI) can help you uncover hidden insights. AI may find trends that people would miss because of its capacity to analyze enormous volumes of data rapidly and correctly. But don't worry; technology will not replace human perception and intuition.

Even if AI is used in many different tasks, human connection will still be needed. There will be an increase in employment opportunities as brand-new roles, including AI editors and controllers, emerge. Accenture contends that to succeed in this new environment; we must give people, education, and training the same priority as technology.

The most significant potential for AI integration is seen in the banking and insurance sectors, where 66% of hours worked have the potential to be enhanced or automated by AI. Chemical and resource sectors, where the majority of workers' time is spent on non-linguistic tasks, are the least impacted industries.

AI technology will help generate new possibilities and occupations in the long term. Up to 40% of all U.S. workdays might be improved or automated by AI, transforming the nature of employment in the future.

Robots and humans can benefit from technology, but we must use it responsibly. We all must maximize our potential and stay clear of any dangers. As AI continues its rapid development, one thing is clear: large language models like ChatGPT could significantly impact how we work in 2021 – and beyond!

Read next: YouTube is Over Twice as Popular as TikTok for Children Under 12

by Arooj Ahmed via Digital Information World

Did you know that approximately two-thirds of positions in the United States that deal with language have a strong potential for automation or AI advancement? According to recent research incorporating data from the U.S. Department of Labour, the Bureau of Labour Statistics, and the Occupational Information Network, this is the case. A startling 62 percent of all labor time is today devoted to tasks using language.

Given that AI is present in many sectors of the economy, from healthcare to finance, and that it may reduce the difficulty and expense of completing certain activities, the potential impact of AI on language-related occupations is particularly significant. Numerous sectors have been hailed as being transformed by AI.

Artificial intelligence (AI) can help you uncover hidden insights. AI may find trends that people would miss because of its capacity to analyze enormous volumes of data rapidly and correctly. But don't worry; technology will not replace human perception and intuition.

Even if AI is used in many different tasks, human connection will still be needed. There will be an increase in employment opportunities as brand-new roles, including AI editors and controllers, emerge. Accenture contends that to succeed in this new environment; we must give people, education, and training the same priority as technology.

The most significant potential for AI integration is seen in the banking and insurance sectors, where 66% of hours worked have the potential to be enhanced or automated by AI. Chemical and resource sectors, where the majority of workers' time is spent on non-linguistic tasks, are the least impacted industries.

AI technology will help generate new possibilities and occupations in the long term. Up to 40% of all U.S. workdays might be improved or automated by AI, transforming the nature of employment in the future.

Robots and humans can benefit from technology, but we must use it responsibly. We all must maximize our potential and stay clear of any dangers. As AI continues its rapid development, one thing is clear: large language models like ChatGPT could significantly impact how we work in 2021 – and beyond!

Read next: YouTube is Over Twice as Popular as TikTok for Children Under 12

by Arooj Ahmed via Digital Information World

Tuesday, June 20, 2023

Tech Companies Will Be the Next Global Superpower According to This Political Scientist

For much of the 20th century, global influence was distributed between two major superpowers, namely the United States of America in the West and the Soviet Union in the East. In spite of the fact that this is the case, the collapse of the Soviet Union led to the dismantling of this bicameral world order, and in its place, a very different type of world was created with all things having been considered and taken into account.

In the aftermath of the Soviet fall, major players like China started to emerge. China was incorporated into the Americanized world order under the assumption that it would become increasingly Western as its wealth continued to grow. However, it turned into a genuine great power in its own right, thereby leading to even more distribution of soft and hard power across the globe.

With all of that having been said and now out of the way, it is important to note that the globalization that occurred around the turn of the millennium also left many citizens of wealthy democracies feeling like they were not getting what they are owed. This led to a feeling that the governments of the world lacked the right amount of legitimacy.

Political scientist Ian Bremmer claims that these three factors led to the world being leaderless as of right now. He asks the question, what will happen when global leaders finally start to emerge in the next decade or so?

Contrary to the assumption that another bipolar world will arise, Bremmer asserted that three overlapping world orders will emerge. The US continues to hold enormous sway in the so called digital order, especially with China increasing its military presence in Asia which is forcing American allies to become more dependent on the superpower. The Russian invasion of Ukraine has also led to this happening faster than might have been the case otherwise.

The unipolar order may stay right where it is for a few more years, but the US is slipping behind in terms of the global economic order. The dominance of the American military is unable to influence economics to any extent at all, and that has given China the chance it needed to topple its economic hegemony.

By 2030, China is predicted to be the largest consumer market in the world. This makes a Cold War between the US and China far less likely, since few if any countries would want to take any side in such a conflict.

With the global economic order clearly becoming multipolar, the US may use its considerable national security infrastructure to entice economic growth within its own borders. We have already seen this with the semiconductor ban as well as the potential TikTok ban. China, meanwhile, is trying to dominate with its superior economic might.

Other major nations such as India will try to prevent any hegemony from coming into being. The digital order (including cyber security) is taking power away from governments and into the hands of tech corporations, and this is becoming a massive factor for military and economic factors as well.

The Ukrainian defense against Russia relied heavily on tech companies and CEOs. This just goes to show that tech companies are growing their influence, and the rise of Donald Trump is yet another indication of this. Social media is connecting people, but it is also boosting access to fake information and news, and many consider this to be directly responsible for the attack on the US capitol.

Hence, it is fairly likely that the digital order will be where any potential Cold War takes place. Tech companies tend to think globally, leading to something called digital globalization, and this is becoming the third sphere of influence that goes beyond nation states.

Bremmer even said that tech companies will become dominant because of the fact that this is the sort of thing that could potentially end up benefiting all sides. The decisions they make will be hugely influential for the direction of the world in the future.

Read next: Here Are the Biggest Philosophical Questions People Ask Google

by Zia Muhammad via Digital Information World

In the aftermath of the Soviet fall, major players like China started to emerge. China was incorporated into the Americanized world order under the assumption that it would become increasingly Western as its wealth continued to grow. However, it turned into a genuine great power in its own right, thereby leading to even more distribution of soft and hard power across the globe.

With all of that having been said and now out of the way, it is important to note that the globalization that occurred around the turn of the millennium also left many citizens of wealthy democracies feeling like they were not getting what they are owed. This led to a feeling that the governments of the world lacked the right amount of legitimacy.

Political scientist Ian Bremmer claims that these three factors led to the world being leaderless as of right now. He asks the question, what will happen when global leaders finally start to emerge in the next decade or so?

Contrary to the assumption that another bipolar world will arise, Bremmer asserted that three overlapping world orders will emerge. The US continues to hold enormous sway in the so called digital order, especially with China increasing its military presence in Asia which is forcing American allies to become more dependent on the superpower. The Russian invasion of Ukraine has also led to this happening faster than might have been the case otherwise.

The unipolar order may stay right where it is for a few more years, but the US is slipping behind in terms of the global economic order. The dominance of the American military is unable to influence economics to any extent at all, and that has given China the chance it needed to topple its economic hegemony.

By 2030, China is predicted to be the largest consumer market in the world. This makes a Cold War between the US and China far less likely, since few if any countries would want to take any side in such a conflict.

With the global economic order clearly becoming multipolar, the US may use its considerable national security infrastructure to entice economic growth within its own borders. We have already seen this with the semiconductor ban as well as the potential TikTok ban. China, meanwhile, is trying to dominate with its superior economic might.

Other major nations such as India will try to prevent any hegemony from coming into being. The digital order (including cyber security) is taking power away from governments and into the hands of tech corporations, and this is becoming a massive factor for military and economic factors as well.

The Ukrainian defense against Russia relied heavily on tech companies and CEOs. This just goes to show that tech companies are growing their influence, and the rise of Donald Trump is yet another indication of this. Social media is connecting people, but it is also boosting access to fake information and news, and many consider this to be directly responsible for the attack on the US capitol.

Hence, it is fairly likely that the digital order will be where any potential Cold War takes place. Tech companies tend to think globally, leading to something called digital globalization, and this is becoming the third sphere of influence that goes beyond nation states.

Bremmer even said that tech companies will become dominant because of the fact that this is the sort of thing that could potentially end up benefiting all sides. The decisions they make will be hugely influential for the direction of the world in the future.

Read next: Here Are the Biggest Philosophical Questions People Ask Google

by Zia Muhammad via Digital Information World

YouTube Gives British Users Greater Access To Accurate And Reliable Health Information

Video sharing platform YouTube is providing greater access to a range of its services to those situated in the United Kingdom. The goal of Google at the moment seems to be expanding more reliable sources of data to those situated in the country’s health industry.

Hence, this means people across the United Kingdom may apply for such channels authorization, only if they require it.

Various members located in different health sectors are now going to be promoted through similar tools that emphasize the most reliable forms of healthcare today across America. And among those include shelves complete with all sorts of health data that pop up across the page for search results when they’re linked to certain types of illnesses or conditions.

Such videos that pop up in these types of places are seen via panels that go on to see various credentials for creators today. And by providing accurate details linked to such ordeals, the company hopes to reduce or limit the number of misformation that continue to spike as we speak.

Remember, the pandemic is the best example of how we witnessed all sorts of facts come out about vaccines and obviously, not everything was correct in that regard.

Remember, in places like the US, we’ve seen how far some people can go in terms of making a change and providing viewers with the most accurate types of data today. From a new kind of policy linked to eating illnesses to the addition of personal tales of those that have been through this, there’s a lot to consider.

YouTube has always worked so hard in terms of ensuring its fact-checking is at the top of its game. We’ve seen it establish great partnerships with many institutes across the United Kingdom including the WHO that create the best standard to abide by in terms of accurate sources for health information.

Everyone knows that it can be quite hard to separate data from the health industry from sources that can be trusted. And for that, they take great pride. This includes following the right principles so that everyone involved can benefit to a huge extent.

So many healthcare providers across the country are now being given the chance to apply for the app’s exclusive features and all types of prerequisites may be found. You can submit an application through the platform’s hub allocated for this purpose that is up for grabs for medical professionals in Germany, the US, and also Mexico among other nations.

Read next: YouTube is Over Twice as Popular as TikTok for Children Under 12

by Dr. Hura Anwar via Digital Information World

Hence, this means people across the United Kingdom may apply for such channels authorization, only if they require it.

Various members located in different health sectors are now going to be promoted through similar tools that emphasize the most reliable forms of healthcare today across America. And among those include shelves complete with all sorts of health data that pop up across the page for search results when they’re linked to certain types of illnesses or conditions.

Such videos that pop up in these types of places are seen via panels that go on to see various credentials for creators today. And by providing accurate details linked to such ordeals, the company hopes to reduce or limit the number of misformation that continue to spike as we speak.

Remember, the pandemic is the best example of how we witnessed all sorts of facts come out about vaccines and obviously, not everything was correct in that regard.

Remember, in places like the US, we’ve seen how far some people can go in terms of making a change and providing viewers with the most accurate types of data today. From a new kind of policy linked to eating illnesses to the addition of personal tales of those that have been through this, there’s a lot to consider.

YouTube has always worked so hard in terms of ensuring its fact-checking is at the top of its game. We’ve seen it establish great partnerships with many institutes across the United Kingdom including the WHO that create the best standard to abide by in terms of accurate sources for health information.

Everyone knows that it can be quite hard to separate data from the health industry from sources that can be trusted. And for that, they take great pride. This includes following the right principles so that everyone involved can benefit to a huge extent.

So many healthcare providers across the country are now being given the chance to apply for the app’s exclusive features and all types of prerequisites may be found. You can submit an application through the platform’s hub allocated for this purpose that is up for grabs for medical professionals in Germany, the US, and also Mexico among other nations.

Read next: YouTube is Over Twice as Popular as TikTok for Children Under 12

by Dr. Hura Anwar via Digital Information World

Twitter's Content Moderation Concerns Remain as Major Brands Advertise Amidst Neo-Nazi Propaganda

Since Elon Musk took over as CEO of Twitter last year, the platform has experienced strained relationships with advertisers. Content moderation concerns prompted major brands like Disney, Microsoft, and the NBA to withdraw their advertisements. However, the selection of Linda Yaccarino, a former NBC Universal executive, as the new CEO of Twitter alleviated concerns among advertisers. Her appointment raised hopes for a better rapport of the brand with the social media platforms. Nevertheless, recent occurrences have brought attention to the persistent obstacles that Twitter encounters in maintaining a safe space for brands. Situations have arisen in which advertisements from well-known companies like Microsoft, Adobe, and Disney were discovered alongside content promoting neo-Nazi ideology.

After Elon Musk took over as the head of Twitter and he implemented substantial reductions to the content moderation teams, prominent brands such as General Mills, Audi, and GM withdrew their advertisements due to concerns about protecting their brand image. The prevalence of hate speech on the platform increased, resulting in a significant decline in Twitter's revenue when these advertisers departed. Nevertheless, under the new leadership of CEO Linda Yaccarino, many of these brands have resumed their advertising activities on the platform. According to Musk, the majority of advertisers have either come back or expressed their intention to do so.

In November of last year, GroupM, the advertising industry's largest agency, categorized Twitter ads as risky for brands. They were concerned about verified accounts pretending to be influential users and the downsizing of Musk's staff. However, GroupM removed this categorization after Yaccarino took over as CEO. Despite this positive development, Twitter's policies and content moderation approaches have not undergone substantial changes. Alejandra Caraballo, a legal expert specializing in civil rights and a clinical instructor at Harvard Law School, raises concerns about Twitter's ability to serve as a secure advertising platform for prominent brands.

Caraballo observed a rise in advertisements on Twitter and took it upon herself to examine if these ads were being shown alongside posts from neo-Nazi and terrorist accounts that she follows for research purposes. Her investigation revealed that ads from well-known brands such as Disney, ESPN, the NBA, Adobe, and Microsoft were being displayed in proximity to content that promotes antisemitism. One example was the appearance of ads when searching for "Europa: The Last Battle," a lengthy film containing propaganda with antisemitic themes. Instead of addressing the underlying issue by moderating the content itself, Twitter chose to limit the specific search, a response that deeply troubles Caraballo.

Caraballo expanded her investigation and discovered additional instances where advertisements were displayed alongside offensive content that brands usually steer clear of. Although explicit antisemitic terms and derogatory language are identified and flagged, more subtle or less familiar terms may go unnoticed. This situation is worrisome because brands that advertise on Twitter might unintentionally contribute financial support to extremist content, leading to the normalization and dissemination of far-right propaganda to previously untapped audiences.

In summary, despite requests for comment, Twitter's press department only offered an automated response regarding the recent incidents. These incidents underscore the importance of enhancing content moderation on the platform to safeguard brands and prevent their ads from being displayed alongside extremist and hateful content. As more major brands resume advertising on Twitter, ensuring brand safety becomes crucial.

H/T: Business Insider

Read next: Instagram is Reportedly Working on AI Generated Comments

by Ayesha Hasnain via Digital Information World

After Elon Musk took over as the head of Twitter and he implemented substantial reductions to the content moderation teams, prominent brands such as General Mills, Audi, and GM withdrew their advertisements due to concerns about protecting their brand image. The prevalence of hate speech on the platform increased, resulting in a significant decline in Twitter's revenue when these advertisers departed. Nevertheless, under the new leadership of CEO Linda Yaccarino, many of these brands have resumed their advertising activities on the platform. According to Musk, the majority of advertisers have either come back or expressed their intention to do so.

In November of last year, GroupM, the advertising industry's largest agency, categorized Twitter ads as risky for brands. They were concerned about verified accounts pretending to be influential users and the downsizing of Musk's staff. However, GroupM removed this categorization after Yaccarino took over as CEO. Despite this positive development, Twitter's policies and content moderation approaches have not undergone substantial changes. Alejandra Caraballo, a legal expert specializing in civil rights and a clinical instructor at Harvard Law School, raises concerns about Twitter's ability to serve as a secure advertising platform for prominent brands.

Caraballo observed a rise in advertisements on Twitter and took it upon herself to examine if these ads were being shown alongside posts from neo-Nazi and terrorist accounts that she follows for research purposes. Her investigation revealed that ads from well-known brands such as Disney, ESPN, the NBA, Adobe, and Microsoft were being displayed in proximity to content that promotes antisemitism. One example was the appearance of ads when searching for "Europa: The Last Battle," a lengthy film containing propaganda with antisemitic themes. Instead of addressing the underlying issue by moderating the content itself, Twitter chose to limit the specific search, a response that deeply troubles Caraballo.

Caraballo expanded her investigation and discovered additional instances where advertisements were displayed alongside offensive content that brands usually steer clear of. Although explicit antisemitic terms and derogatory language are identified and flagged, more subtle or less familiar terms may go unnoticed. This situation is worrisome because brands that advertise on Twitter might unintentionally contribute financial support to extremist content, leading to the normalization and dissemination of far-right propaganda to previously untapped audiences.

In summary, despite requests for comment, Twitter's press department only offered an automated response regarding the recent incidents. These incidents underscore the importance of enhancing content moderation on the platform to safeguard brands and prevent their ads from being displayed alongside extremist and hateful content. As more major brands resume advertising on Twitter, ensuring brand safety becomes crucial.

H/T: Business Insider

Read next: Instagram is Reportedly Working on AI Generated Comments

by Ayesha Hasnain via Digital Information World

Subscribe to:

Posts (Atom)