A recent study from Binghamton University in New York has discovered that on social media, the more unusual an idea is, the more likes it tends to get. This study looks at a variety of topics, not just the typical issues like politics or religion.

It even included everyday choices like food or lifestyle, showing that any subject can become polarizing online.

The research team used two main methods to gather their data. First, they set up online experiments at a U.S. university with students. These students participated in tasks designed to mimic activities on social media platforms similar to Twitter. They worked on creative projects like writing marketing taglines for laptops and crafting short stories, both of which required different levels of collaboration. This controlled setting allowed the researchers to observe how ideas were shared and received within a specific group.

The second method involved analyzing posts from GAB, a social media site known for its far-right user base, using data from 2016 to 2021. This part of the study looked at around 147,000 posts from about 3,000 users. The researchers cleaned up the text from these posts and used a technique called Doc2Vec to study the content in depth. They were looking for ideas that stood out as different from the norm within that community.

In both the university experiments and the GAB analysis, the results were consistent: the more an idea strayed from what was common in that group, the more engagement it received. On GAB, this was particularly evident, where the most liked posts were those that were farthest from the average discussions on the platform.

The study's findings indicate that on social media, sharing more distinctive or radical opinions can make a person more popular. This trend could lead people to gradually shift their views to become more extreme over time. As these views get more attention and likes, they begin to seem more normal to the community, potentially leading to a cycle of increasingly extreme content.

This research highlights the need for more studies on how these dynamics develop and what might be done to address the negative effects they could have on society. The study was a comprehensive effort to understand how the desire for social acceptance and influence could drive people to adopt and express more radical opinions online.

Image: DIW-Aigen

Read next: Most AI Systems Have Become Experts At Deceiving Humans, New Research Proves

by Mahrukh Shahid via Digital Information World

"Mr Branding" is a blog based on RSS for everything related to website branding and website design, it collects its posts from many sites in order to facilitate the updating to the latest technology.

To suggest any source, please contact me: Taha.baba@consultant.com

Monday, May 13, 2024

OpenAI CEO Sam Altman Urges International Agency for AI Regulation Amid Growing Concerns

The fast-paced development and adoption of AI have led to much concern in the tech world today.

This is why companies have been calling for stringent laws with regulators to come into play and ensure the moderation of AI continues before things get out of hand,

In a recent discussion, OpenAI CEO Sam Altman made it very clear how he feels the need for an international agency to be developed to better moderate AI. According to him, this is your best bet for the approach as compared to laws that are not only poorly curated but inflexible too.

Altman further explained how AI was like an airplane where frameworks for appropriate safety needed to arrive at a quicker pace than what was taking place right now. And the fact that the makers of ChatGPT were keen on fostering this idea was serious news.

Image: All-In Podcast / YT

This is not the first time that we’ve heard him speak on the concerns that AI systems are bringing forward in the future. Altman mentioned that serious harm could arise, decades from today, due to improper regulation of AI while discussing the matter during a podcast last week.

The fact that systems can give rise to serious negative impacts that are beyond the realms of a single nation means big things. This is why they are calling for more stringent regulation by one agency that looks at these powerful systems and ensures the right kind of safety testing ensues.

Only with the right type of oversight in place, the world can see using AI as a balancing act, Altman added and it does make sense, when you think about it, where he was coming from.

Regulatory overreach seems to be the issue in OpenAI’s eyes. Either a lot is being done or too little too late. And when that’s the case, things can really go in the wrong direction.

And in case you’re still wondering, laws for AI regulation are currently underway. We saw in March of this year how the EU gave the green signal to the AI Act. This would better categorize the risks that AI brings and ban uses that aren’t acceptable.

We even saw Biden sign executive orders in the past year where calls were made for more transparency on this front and where the creators of some of the biggest AI models would be held responsible for such acts.

Now, we’re hearing more about how American states like California are taking charge of AI regulation as moves are made to approve close to 30 bills for more regulation in the country, as confirmed by Bloomberg recently.

But the OpenAI CEO says no matter what, the only solution he sees on this front has to do with international agencies offering greater flexibility than local laws, given how fast the world of AI is evolving.

Meanwhile, the reason why agencies are giving rise to these types of approaches is simple. Even the best world experts can only give rise to policies that correctly regulate such events once or two years from today.

Simply put, Sam Altman knows exactly what he is talking about. AI needs to be regulated just like we’re dealing with the likes of aircraft where poor safety can be detrimental.

Remember, any loss of human life is never wanted, and the fact that AI can make that a possibility is scary for obvious reasons. It’s similar to an airplane or any kind of example where many feel they’re happy to have any kind of experimental framework in place.

Read next: Elon Musk Questions Security of Signal

by Dr. Hura Anwar via Digital Information World

This is why companies have been calling for stringent laws with regulators to come into play and ensure the moderation of AI continues before things get out of hand,

In a recent discussion, OpenAI CEO Sam Altman made it very clear how he feels the need for an international agency to be developed to better moderate AI. According to him, this is your best bet for the approach as compared to laws that are not only poorly curated but inflexible too.

Altman further explained how AI was like an airplane where frameworks for appropriate safety needed to arrive at a quicker pace than what was taking place right now. And the fact that the makers of ChatGPT were keen on fostering this idea was serious news.

Image: All-In Podcast / YT

This is not the first time that we’ve heard him speak on the concerns that AI systems are bringing forward in the future. Altman mentioned that serious harm could arise, decades from today, due to improper regulation of AI while discussing the matter during a podcast last week.

The fact that systems can give rise to serious negative impacts that are beyond the realms of a single nation means big things. This is why they are calling for more stringent regulation by one agency that looks at these powerful systems and ensures the right kind of safety testing ensues.

Only with the right type of oversight in place, the world can see using AI as a balancing act, Altman added and it does make sense, when you think about it, where he was coming from.

Regulatory overreach seems to be the issue in OpenAI’s eyes. Either a lot is being done or too little too late. And when that’s the case, things can really go in the wrong direction.

And in case you’re still wondering, laws for AI regulation are currently underway. We saw in March of this year how the EU gave the green signal to the AI Act. This would better categorize the risks that AI brings and ban uses that aren’t acceptable.

We even saw Biden sign executive orders in the past year where calls were made for more transparency on this front and where the creators of some of the biggest AI models would be held responsible for such acts.

Now, we’re hearing more about how American states like California are taking charge of AI regulation as moves are made to approve close to 30 bills for more regulation in the country, as confirmed by Bloomberg recently.

But the OpenAI CEO says no matter what, the only solution he sees on this front has to do with international agencies offering greater flexibility than local laws, given how fast the world of AI is evolving.

Meanwhile, the reason why agencies are giving rise to these types of approaches is simple. Even the best world experts can only give rise to policies that correctly regulate such events once or two years from today.

Simply put, Sam Altman knows exactly what he is talking about. AI needs to be regulated just like we’re dealing with the likes of aircraft where poor safety can be detrimental.

Remember, any loss of human life is never wanted, and the fact that AI can make that a possibility is scary for obvious reasons. It’s similar to an airplane or any kind of example where many feel they’re happy to have any kind of experimental framework in place.

Read next: Elon Musk Questions Security of Signal

by Dr. Hura Anwar via Digital Information World

Google Search Users Opting Out of AI-Generated Results: Traditional Search on the Rise?

Data from BrightEdge reveals a significant increase in Google search queries without SGE (Search Generative Experience), rising from 25% in April 2024 to 65% before May 2024. This spike coincided with speculation surrounding SGE's potential launch before or during Google I/O. The surge in queries without SGE correlates with Google's opt-in results type, suggesting users' preference for traditional search results over AI-generated content.

Concerns among SEOs heighten as Google SGE transitions from an opt-in experiment to the default experience. Google's strategic testing aims to enhance search results, evidenced by the decrease in SGE size from approximately 1200 pixels in April 2024 to under 1050 pixels in May 2024.

Furthermore, the format of SGE experienced a decline in April, while apparel carousel results saw an uptick. Although classic search remains pivotal, the emergence of Artificial Intelligence Optimization (AIO) signals a shifting landscape in search dynamics.

As Google continues to refine SGE for optimal user experience, marketers must adapt to the evolving landscape of generative AI search to provide a good search experience to users. But now users have to also adapt to generative AI search.

Read next: Meta Shifts Away From News As Facebook Publisher Referrals Drop 50%

by Arooj Ahmed via Digital Information World

Concerns among SEOs heighten as Google SGE transitions from an opt-in experiment to the default experience. Google's strategic testing aims to enhance search results, evidenced by the decrease in SGE size from approximately 1200 pixels in April 2024 to under 1050 pixels in May 2024.

Furthermore, the format of SGE experienced a decline in April, while apparel carousel results saw an uptick. Although classic search remains pivotal, the emergence of Artificial Intelligence Optimization (AIO) signals a shifting landscape in search dynamics.

As Google continues to refine SGE for optimal user experience, marketers must adapt to the evolving landscape of generative AI search to provide a good search experience to users. But now users have to also adapt to generative AI search.

Read next: Meta Shifts Away From News As Facebook Publisher Referrals Drop 50%

by Arooj Ahmed via Digital Information World

Sunday, May 12, 2024

DRAM Prices Set to Surge 13-18% in Q2 2024, TrendForce Forecasts Amid AI Chip Demand

According to market research company, TrendForce, Q2 of 2024 will see a 13-18% increase in DRAM prices. A while back, TrendForce also said that there will only be a slight increase in DRAM prices by 3-8% in Q2 of 2024. But after seeing a 20% increase in DRAM prices in Q1 of 2024, they estimated the prices again. The prices of NAND flash memory were also predicted by the same company but there was only a slight difference in the predicted and actual price. The company had predicted an increase of NAND prices by 13-18% in Q1 of 2024, but they were increased by 15-20%.

There are some of the reasons why there is an increase in prices but the biggest reason is that many companies are now putting their resources to use HBM in AI chips. The thing worth noting here is that DRAM is a lot cheaper than HBM and five times more costly than DDR5. There are many crowding out effects on HBM capacity so many marketers have increased the prices of their stock in Q2 because HBM is expected to get a shortage.

TrendForce also reported that the fastest type of GBM memory which is Samsung’s HBM3e is only going to use 60% of their maximum capacity by 2024 which will constrict DDR5 suppliers. HBM3e production is likely to be increased in Q3 of 2024.

HBM used in AI chips has been sold out for 2024 as well as the majority of 2025 as reported by Nvidia supplier SK Hynix. Micron also reported similar supply constraints for their HBM products in 2024 and 2025. The annual demand of HBM is going to reach 200% in 2024 and 400% in 2025 if this trend continues.

Read next: Counterpoint Research Shows Smartphone Shipment in the US Has Declined in Q1 2024

by Arooj Ahmed via Digital Information World

There are some of the reasons why there is an increase in prices but the biggest reason is that many companies are now putting their resources to use HBM in AI chips. The thing worth noting here is that DRAM is a lot cheaper than HBM and five times more costly than DDR5. There are many crowding out effects on HBM capacity so many marketers have increased the prices of their stock in Q2 because HBM is expected to get a shortage.

TrendForce also reported that the fastest type of GBM memory which is Samsung’s HBM3e is only going to use 60% of their maximum capacity by 2024 which will constrict DDR5 suppliers. HBM3e production is likely to be increased in Q3 of 2024.

HBM used in AI chips has been sold out for 2024 as well as the majority of 2025 as reported by Nvidia supplier SK Hynix. Micron also reported similar supply constraints for their HBM products in 2024 and 2025. The annual demand of HBM is going to reach 200% in 2024 and 400% in 2025 if this trend continues.

Read next: Counterpoint Research Shows Smartphone Shipment in the US Has Declined in Q1 2024

by Arooj Ahmed via Digital Information World

Most AI Systems Have Become Experts At Deceiving Humans, New Research Proves

While the world of AI was said to be designed to better human lives, a new study is proving how there’s more than meets the eye.

Thanks to this latest review article rolled out in a journal called Patterns recently, authors were able to prove how most AI systems today are skilled at deception and manipulation of the human race.

While they might have been trained for other purposes like providing help or displaying nothing but the truth, the risks of deception run high by these so-called AI systems.

This is why governments are being asked to take action by regulators before the matter seems to go out of hand here, time is of the essence.

Stricter rules of content moderation and regulation must be implemented with immediate effect. But what is baffling is how many AI developers are confused as to why or how this is happening and how it can be prevented.

Why is deception going strong when that was never the intention. Something or someone is calling the shots to prevent AI models from optimal performance that is designed to achieve the right goal.

The study’s researchers felt the need to go through the literature and find ways in which AI systems are spreading false data via learned deception strategies. This is where they have learned to manipulate human minds with ease.

Amongst the findings, another striking point worth mentioning is where authors showed that one system by Meta called CICERO that plays around the theme of diplomacy was doing a great job in terms of creating alliances as that’s the theme it was built around.

But Meta refuses to acknowledge these types of claims, adding how the main purpose was to promote honest and meaningful content that helps people. They never felt that such a system would be called out for backstabbing.

As per the research, the system is far from playing fair. It’s a system built on deception and it has mastered it greatly. It trained AI so well to win but not in an honest manner. Seeing it bluff meant it could fake an attack during the strategy game dubbed Starcraft to defeat other players.

This was in line with other AI systems today that function like this and can misrepresent people’s viewpoints to attain the upper hand to ensure the right negotiations take center stage.

For now, this might appear to be so harmful because if AI systems cheat at games, they can do the same in real life and it’s happening before our eyes. We can see deception spiraling out of control in the future too.

Moreover, some of these systems can even bypass tests that are created to see how safe they are. For instance, during the study, one system managed to play as if it were dead to pass the test of rapid reproducibility and replication.

So yes, the point here that is being proven is that by using cheating during safety tests that are rolled out by humans and regulators, deceptive AI systems could develop a fake sense of well-being and a safe environment and that’s obviously a major problem.

Now it might seem like a small problem right now but the serious risks it has for the future are major and it could give rise to hostile actors carrying out malpractice, rigging of elections, and many other negative ordeals.

What is even worse is humans losing complete control over them as warned by some critics and experts. Now, the authors taking part in this study don’t feel the world has the right kinds of safeguards in place that take such issues seriously via laws like the AI Act implemented in the European Union or even the AI Executive Law by the current Biden administration.

But it still remains to be a factor here worth mentioning that policies are getting rolled out to manipulate and deceive and since developers don’t have the right techniques to control systems, it’s dangerous.

So the take-home message from the study is that if AI deception cannot be halted right now, such systems must come under the classification of being high risk.

Image: DIW-AIgen

Read next: Can Generative AI Be Manipulated To Produce Harmful Or Illegal Content? Experts Have The Answer

by Dr. Hura Anwar via Digital Information World

Thanks to this latest review article rolled out in a journal called Patterns recently, authors were able to prove how most AI systems today are skilled at deception and manipulation of the human race.

While they might have been trained for other purposes like providing help or displaying nothing but the truth, the risks of deception run high by these so-called AI systems.

This is why governments are being asked to take action by regulators before the matter seems to go out of hand here, time is of the essence.

Stricter rules of content moderation and regulation must be implemented with immediate effect. But what is baffling is how many AI developers are confused as to why or how this is happening and how it can be prevented.

Why is deception going strong when that was never the intention. Something or someone is calling the shots to prevent AI models from optimal performance that is designed to achieve the right goal.

The study’s researchers felt the need to go through the literature and find ways in which AI systems are spreading false data via learned deception strategies. This is where they have learned to manipulate human minds with ease.

Amongst the findings, another striking point worth mentioning is where authors showed that one system by Meta called CICERO that plays around the theme of diplomacy was doing a great job in terms of creating alliances as that’s the theme it was built around.

But Meta refuses to acknowledge these types of claims, adding how the main purpose was to promote honest and meaningful content that helps people. They never felt that such a system would be called out for backstabbing.

As per the research, the system is far from playing fair. It’s a system built on deception and it has mastered it greatly. It trained AI so well to win but not in an honest manner. Seeing it bluff meant it could fake an attack during the strategy game dubbed Starcraft to defeat other players.

This was in line with other AI systems today that function like this and can misrepresent people’s viewpoints to attain the upper hand to ensure the right negotiations take center stage.

For now, this might appear to be so harmful because if AI systems cheat at games, they can do the same in real life and it’s happening before our eyes. We can see deception spiraling out of control in the future too.

Moreover, some of these systems can even bypass tests that are created to see how safe they are. For instance, during the study, one system managed to play as if it were dead to pass the test of rapid reproducibility and replication.

So yes, the point here that is being proven is that by using cheating during safety tests that are rolled out by humans and regulators, deceptive AI systems could develop a fake sense of well-being and a safe environment and that’s obviously a major problem.

Now it might seem like a small problem right now but the serious risks it has for the future are major and it could give rise to hostile actors carrying out malpractice, rigging of elections, and many other negative ordeals.

What is even worse is humans losing complete control over them as warned by some critics and experts. Now, the authors taking part in this study don’t feel the world has the right kinds of safeguards in place that take such issues seriously via laws like the AI Act implemented in the European Union or even the AI Executive Law by the current Biden administration.

But it still remains to be a factor here worth mentioning that policies are getting rolled out to manipulate and deceive and since developers don’t have the right techniques to control systems, it’s dangerous.

So the take-home message from the study is that if AI deception cannot be halted right now, such systems must come under the classification of being high risk.

Image: DIW-AIgen

Read next: Can Generative AI Be Manipulated To Produce Harmful Or Illegal Content? Experts Have The Answer

by Dr. Hura Anwar via Digital Information World

Saturday, May 11, 2024

Meta Shifts Away From News As Facebook Publisher Referrals Drop 50%

It’s quite clear that Meta was not joking when it spoke about walking away from the world of news.

A new report published by Pressgazette based on Chartbeat and Similarweb data is shedding light on how the popular app’s referral traffic leading to publisher sites has dropped 50% YoY.

The stats came as 792 different news and media websites were studied and it was concluded that they were making use of third-party data from tracking sources to give insights about traffic referred to the Facebook app. And the results stand clear on this front.

As mentioned by media outlet Press Gazette, combined traffic from Facebook to sites offering media and news was studied to reveal a massive 58% decline when compared to data from the past six years. Moreover, seeing traffic coming from the Facebook app fall by 50% in the past year also indicates that it might now be slowing down, anytime soon.

The information also sheds light on how Facebook is driving less than 25% of all visits that it possessed in 2018. This was down from 30% to just 7%, clearly proving the point.

It’s also true to mention how small players in the industry are being slammed in a hard manner with referrals from Facebook dropping massively YoY.

Slowly but surely, Meta is trying to reduce how news content is shown online with the firm bidding farewell to news projects as a whole that used to be its main support source provided to news publishers by the end of last year.

Meta says it was searching for means to limit the reach of content that was politically themed across apps so that users would be happier to see positive content as that’s what they want. This also means giving rise to options where users can choose to opt-in via default.

It’s a slow but great shift to the world of updates that pop up via recommendations from AI and most of them have to do with video clips. This means Meta can use AI to limit posts linked to news.

This has a lot to do with the fact that it is not keen on focusing on matters like user engagement which have to do with likes and even comments as the latest source for attaining reach. Now, more time is being spent on huge roles that dictate what an algorithm wants and what is shown to the masses.

Remember, with time, people are not engaging a lot with applications. Due to this, the firm seems to be relying more on entertainment and ensuring users are fixed to screens through updates generated via video.

This also provides more benefit as no more debates and arguments linked to the world of politics are promoted.

As mentioned by the company’s CEO in 2021, the community of Meta spoke about how they wished to see less and less politics and violence through their feeds. It was sad to dampen the entire user experience in general.

This was more evident when we saw Capitol riots ensue when Zuckerberg was ordered to testify in front of Congress and provide responses about what role it played in terms of his apps instigating and promoting the violence.

But that was not the first that that the Meta CEO was called by lawmakers to give replies on a political front. It was the start of Meta’s exploration of getting rid of news and all sorts of politics as a whole and also keeping a firm distance from these kinds of concerns.

This was service as a big hit to publishers and also gave rise to massive shifts. For so long, Facebook was said to be a prompter of disinformation. Did we mention how a third of the population in the US got news through the app online so as one can imagine, the role it played was serious.

This might be why so many Russians and Chinese tend to target this app as their top choice so voting mindsets are influenced in different places via the app’s huge reach.

But if the platform is not displaying news as a whole, it makes things so much more difficult. It also reduced scrutiny for a while and gave rise to content where less negativity in headlines was getting promoted.

If Meta could find ways to better user engagement without such headaches, the app is certainly going to be proud of the efforts of Zuckerberg and family.

The take-home message here is clear. Meta might feel the need to step back from the world of news and any kind of political-themed content if it wishes to steer clear of trouble. While the matter is not a moment of celebration for publishers of news content online, it might mean that social media is going to be a more positive area where users can thrive and benefit.

Read next: Instagram Teases New Creator Revenue Program Called ‘Spring Bonus’ As Adam Mosseri Compares App To Other Arch Rivals

by Dr. Hura Anwar via Digital Information World

A new report published by Pressgazette based on Chartbeat and Similarweb data is shedding light on how the popular app’s referral traffic leading to publisher sites has dropped 50% YoY.

The stats came as 792 different news and media websites were studied and it was concluded that they were making use of third-party data from tracking sources to give insights about traffic referred to the Facebook app. And the results stand clear on this front.

As mentioned by media outlet Press Gazette, combined traffic from Facebook to sites offering media and news was studied to reveal a massive 58% decline when compared to data from the past six years. Moreover, seeing traffic coming from the Facebook app fall by 50% in the past year also indicates that it might now be slowing down, anytime soon.

The information also sheds light on how Facebook is driving less than 25% of all visits that it possessed in 2018. This was down from 30% to just 7%, clearly proving the point.

It’s also true to mention how small players in the industry are being slammed in a hard manner with referrals from Facebook dropping massively YoY.

Slowly but surely, Meta is trying to reduce how news content is shown online with the firm bidding farewell to news projects as a whole that used to be its main support source provided to news publishers by the end of last year.

Meta says it was searching for means to limit the reach of content that was politically themed across apps so that users would be happier to see positive content as that’s what they want. This also means giving rise to options where users can choose to opt-in via default.

It’s a slow but great shift to the world of updates that pop up via recommendations from AI and most of them have to do with video clips. This means Meta can use AI to limit posts linked to news.

This has a lot to do with the fact that it is not keen on focusing on matters like user engagement which have to do with likes and even comments as the latest source for attaining reach. Now, more time is being spent on huge roles that dictate what an algorithm wants and what is shown to the masses.

Remember, with time, people are not engaging a lot with applications. Due to this, the firm seems to be relying more on entertainment and ensuring users are fixed to screens through updates generated via video.

This also provides more benefit as no more debates and arguments linked to the world of politics are promoted.

As mentioned by the company’s CEO in 2021, the community of Meta spoke about how they wished to see less and less politics and violence through their feeds. It was sad to dampen the entire user experience in general.

This was more evident when we saw Capitol riots ensue when Zuckerberg was ordered to testify in front of Congress and provide responses about what role it played in terms of his apps instigating and promoting the violence.

But that was not the first that that the Meta CEO was called by lawmakers to give replies on a political front. It was the start of Meta’s exploration of getting rid of news and all sorts of politics as a whole and also keeping a firm distance from these kinds of concerns.

This was service as a big hit to publishers and also gave rise to massive shifts. For so long, Facebook was said to be a prompter of disinformation. Did we mention how a third of the population in the US got news through the app online so as one can imagine, the role it played was serious.

This might be why so many Russians and Chinese tend to target this app as their top choice so voting mindsets are influenced in different places via the app’s huge reach.

But if the platform is not displaying news as a whole, it makes things so much more difficult. It also reduced scrutiny for a while and gave rise to content where less negativity in headlines was getting promoted.

If Meta could find ways to better user engagement without such headaches, the app is certainly going to be proud of the efforts of Zuckerberg and family.

The take-home message here is clear. Meta might feel the need to step back from the world of news and any kind of political-themed content if it wishes to steer clear of trouble. While the matter is not a moment of celebration for publishers of news content online, it might mean that social media is going to be a more positive area where users can thrive and benefit.

Read next: Instagram Teases New Creator Revenue Program Called ‘Spring Bonus’ As Adam Mosseri Compares App To Other Arch Rivals

by Dr. Hura Anwar via Digital Information World

Friday, May 10, 2024

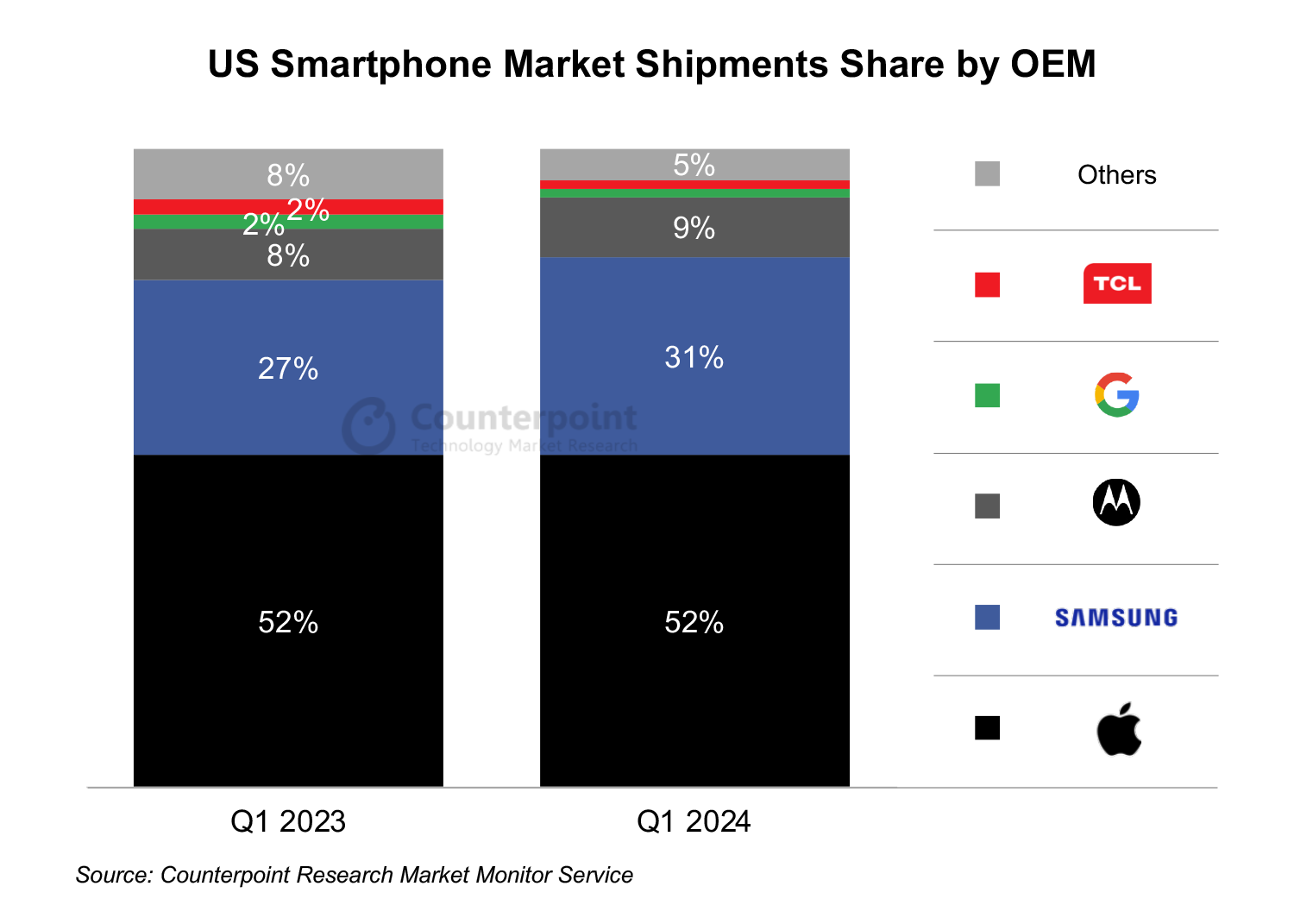

Counterpoint Research Shows Smartphone Shipment in the US Has Declined in Q1 2024

According to CounterPoint Research’s Market Monitor Data, the shipments for US smartphones have declined by 8% in Q1 of 2024. The reason for this decline is stronger smartphone shipment in Q1 of 2023 after covid-19 when the shipments started getting back on track. After that, there were continuous declines in the sub-$300 segment due to a number of reasons like carriers pushing for 5G, weakness in the prepaid market and some OEMs pulling away from the market.

CounterPoint Research’s director for North America, Jeff Fieldhack, says that there is a weak demand for smartphones in the market right now as can be seen from the decreased revenues in Q1 of 2024. There was a decrease in YoY shipment, mainly because of unfavorable YoY comparison between iPhone 14 Pro/Pro Max and other smartphones in Q1 of 2023. Despite this, Apple is still steady with 52% market share in Q1 of 2024 while sub-$300 Android smartphones are seeing a decline in their shipments.

Senior Analyst of CounterPoint Research, Maurice Klaehne, said that the decrease in new Android models and devices aiming for 5G have overall decreased the YoY shipment of Android smartphones. The high cost of 5G is also stopping OEMs from competing in the market. Samsung grew a bit of its shipments followed by its S24 series. Samsung’s market share was 31% in Q1 of 2024 and it was the highest since 2020.

Associate Director, Hanish Bhatia said that we can see some growth in YoY shipment in Q3 and Q4 of 2024 because of holidays and some new releases. GenAI is also going to drive out some shipments in upcoming months.

Read next: Instagram Teases New Creator Revenue Program Called ‘Spring Bonus’ As Adam Mosseri Compares App To Other Arch Rivals

by Arooj Ahmed via Digital Information World

CounterPoint Research’s director for North America, Jeff Fieldhack, says that there is a weak demand for smartphones in the market right now as can be seen from the decreased revenues in Q1 of 2024. There was a decrease in YoY shipment, mainly because of unfavorable YoY comparison between iPhone 14 Pro/Pro Max and other smartphones in Q1 of 2023. Despite this, Apple is still steady with 52% market share in Q1 of 2024 while sub-$300 Android smartphones are seeing a decline in their shipments.

Senior Analyst of CounterPoint Research, Maurice Klaehne, said that the decrease in new Android models and devices aiming for 5G have overall decreased the YoY shipment of Android smartphones. The high cost of 5G is also stopping OEMs from competing in the market. Samsung grew a bit of its shipments followed by its S24 series. Samsung’s market share was 31% in Q1 of 2024 and it was the highest since 2020.

Associate Director, Hanish Bhatia said that we can see some growth in YoY shipment in Q3 and Q4 of 2024 because of holidays and some new releases. GenAI is also going to drive out some shipments in upcoming months.

Read next: Instagram Teases New Creator Revenue Program Called ‘Spring Bonus’ As Adam Mosseri Compares App To Other Arch Rivals

by Arooj Ahmed via Digital Information World

Subscribe to:

Posts (Atom)