This article is part of a series on building a sample application --- a multi-image gallery blog --- for performance benchmarking and optimizations. (View the repo here.)

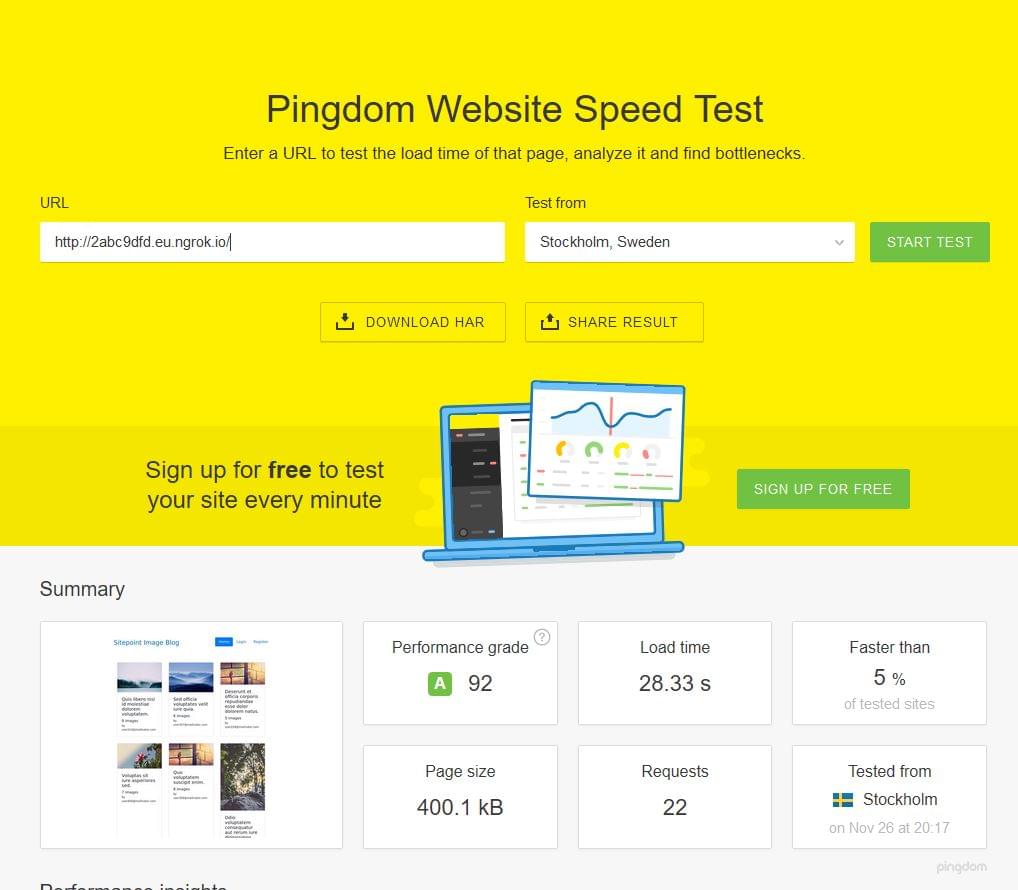

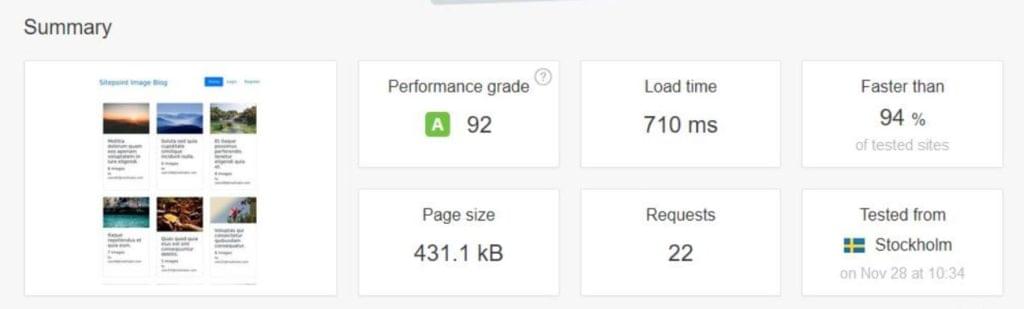

Let's continue optimizing our app. We're starting with on-the-fly thumbnail generation that takes 28 seconds per request, depending on the platform running your demo app (in my case it was a slow filesystem integration between host OS and Vagrant), and bring it down to a pretty acceptable 0.7 seconds.

Admittedly, this 28 seconds should only happen on initial load. After the tuning, we were able to achieve production-ready times:

Troubleshooting

It is assumed that you've gone through the bootstrapping process and have the app running on your machine --- either virtual or real.

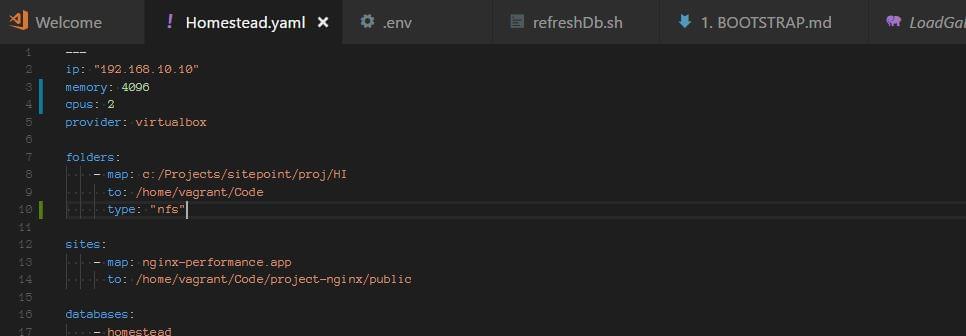

Note: if you're hosting the Homestead Improved box on a Windows machine, there might be an issue with shared folders. This can be solved by adding type: "nfs" setting to the folder in Homestead.yaml:

You should also run vagrant up from a shell/powershell interface that has administrative privileges if problems persist (right-click, run as administrator).

In one example before doing this, we got 20 to 30 second load times on every request, and couldn't get a rate faster than one request per second (it was closer to 0.5 per second):

The Process

Let's go through the testing process. We installed Locust on our host, and created a very simple locustfile.py:

from locust import HttpLocust, TaskSet, task

class UserBehavior(TaskSet):

@task(1)

def index(self):

self.client.get("/")

class WebsiteUser(HttpLocust):

task_set = UserBehavior

min_wait = 300

max_wait = 1000

Then we downloaded ngrok to our guest machine and tunneled all HTTP connections through it, so that we can test our application over a static URL.

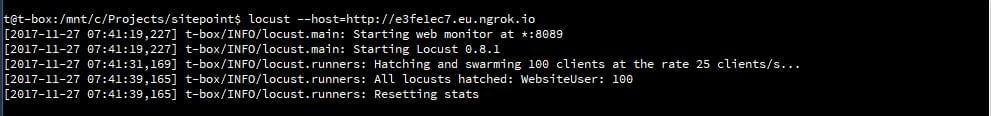

Then we started Locust and swarmed our app with 100 parallel users:

Our server stack consisted of PHP 7.1.10, Nginx 1.13.3 and MySQL 5.7.19, on Ubuntu 16.04.

PHP-FPM and its Process Manager Setting

php-fpm spawns its own processes, independent of the web-server process. Management of the number of these processes is configured in /etc/php/7.1/fpm/pool.d/www.conf (7.1 here can be exchanged for the actual PHP version number currently in use).

In this file, we find the pm setting. This setting can be set to dynamic, ondemand and static. Dynamic is maybe the most common wisdom; it allows the server to juggle the number of spawned PHP processes between several settings:

pm = dynamic

; The number of child processes to be created when pm is set to 'static' and the

; maximum number of child processes when pm is set to 'dynamic' or 'ondemand'.

; This value sets the limit on the number of simultaneous requests that will be

; served.

pm.max_children = 6

; The number of child processes created on startup.

; Note: Used only when pm is set to 'dynamic'

; Default Value: min_spare_servers + (max_spare_servers - min_spare_servers) / 2

pm.start_servers = 3

; The desired minimum number of idle server processes

; Note: Used only when pm is set to 'dynamic'

; Note: Mandatory when pm is set to 'dynamic'

pm.min_spare_servers = 2

; The desired maximum number of idle server proceses

; Note: Used only when pm is set to 'dynamic'

; Note: Mandatory when pm is set to 'dynamic'

pm.max_spare_servers = 4

The meanings of these values are self-explanatory, and the spawning of processes is being done on demand, but constrained by these minimum and maximum values.

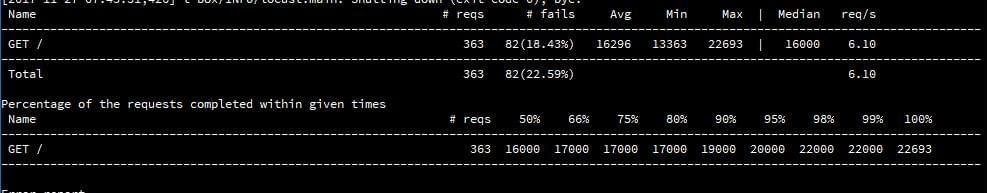

After fixing the Windows shared-folders issue with nfs, and testing with Locust, we were able to get approximately five requests per second, with around 17–19% failures, with 100 concurrent users. Once it was swarmed with requests, the server slowed down and each request took over ten seconds to finish.

Then we changed the pm setting to ondemand.

Ondemand means that there are no minimum processes: once the requests stop, all the processes will stop. Some advocate this setting, because it means the server won't be spending any resources in its idle state, but for the dedicated (non-shared) server instances this isn't necessarily the best. Spawning a process includes an overhead, and what is gained in memory is being lost in time needed to spawn processes on-demand. The settings that are relevant here are:

pm.max_children = 6

; and

pm.process_idle_timeout = 20s;

; The number of seconds after which an idle process will be killed.

; Note: Used only when pm is set to 'ondemand'

; Default Value: 10s

When testing, we increased these settings a bit, having to worry about resources less.

There's also pm.max_requests, which can be changed, and which designates the number of requests each child process should execute before respawning.

This setting is a tradeoff between speed and stability, where 0 means unlimited.

ondemand didn't bring much change, except that we noticed more initial waiting time when we started swarming our application with requests, and more initial failures. In other words, there were no big changes: the application was able to serve around four to maximum six requests per second. Waiting time and rate of failures were similar to the dynamic setup.

Then we tried the pm = static setting, allowing our PHP processes to take over the maximum of the server's resources, short of swapping, or driving the CPU to a halt. This setting means we're forcing the maximum out of our system at all times. It also means that --- within our server's constraints --- there won't be any spawning overhead time cost.

What we saw was an improvement of 20%. The rate of failed requests was still significant, though, and the response time was still not very good. The system was far from being ready for production.

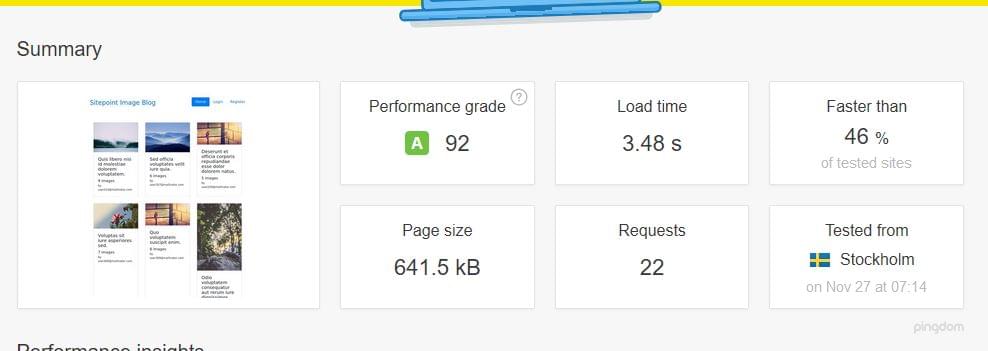

However, on Pingdom Tools, we got a bearable 3.48 seconds when the system was not under pressure:

This meant that pm static was an improvement, but in the case of a bigger load, it would still go down.

In one of the previous articles, we explained how Nginx can itself serve as a caching system, both for static and dynamic content. So we reached for the Nginx wizardry, and tried to bring our application to a whole new level of performance.

And we succeeded. Let's see how.

The post Server-side Optimization with Nginx and pm-static appeared first on SitePoint.

by Tonino Jankov via SitePoint

No comments:

Post a Comment