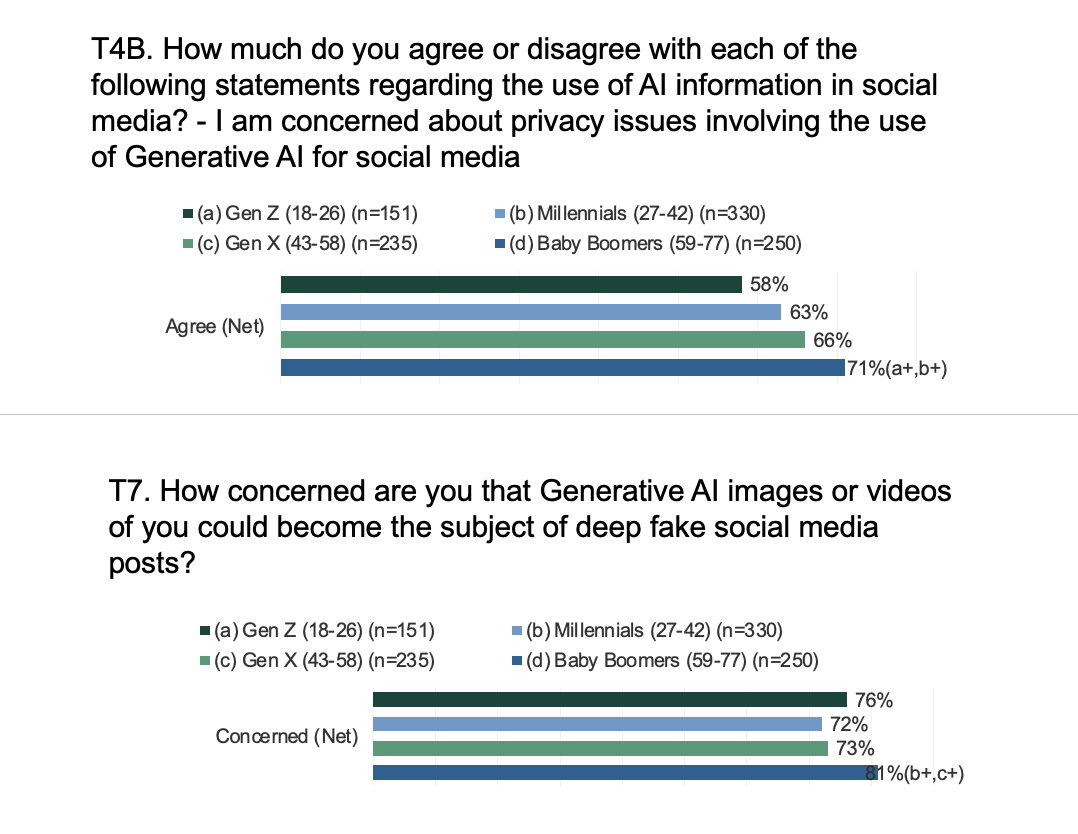

Generative AI has been one of the biggest leaps forward for tech in recent memory, but in spite of the fact that this is the case a lot of consumers seem to be approaching it with caution. A study from Big Village just revealed that as many as 76% of consumers are worried that images produced using generative AI could end up being abused, with 19% concerned that these abuses could be quite significant.

With all of that having been said and now out of the way, it is important to note that this could make the use of generative AI in social media advertising less useful than might have been the case otherwise. 66% of consumers are worried about how the use of generative AI could impact their privacy, and 60% don’t have all that good of an idea regarding how it can even work in a social media context.

However, it should also be mentioned that 48% of the consumers who responded to this survey have already witnessed generative AI on social media in the past. 48% of them never want to use AI generated faces in social media advertising, going so far as to say that even photoshopped images should be banned with all things having been considered and taken into account.

Fake faces can be harmful because of the fact that this is the sort of thing that could potentially end up giving an unfair representation of the products that are being depicted. That’s why only 25% of consumers said that they are comfortable with both photoshopped as well as AI generated faces in all of the social advertising that they are faced with.

15% said that generative AI is fine but photoshopping isn’t, and 13% said that photoshopped images are acceptable but refused to accept AI generated images. All in all, this is going to prove to be a huge challenge for the burgeoning AI industry to surmount. Marketers have been looking forward to using this tech for social marketing, but studies like this suggest that that might not be a good idea.

Read next: Most SEOs won’t recommend using AI to write their content

by Zia Muhammad via Digital Information World

No comments:

Post a Comment