As AI continues to rise, brands are starting to adopt it left, right and center. Generative AI has already had an enormous impact on the world around us because of the fact that this is the sort of thing that could potentially end up levelling up productivity and output. In spite of the fact that this is the case, there has been a 26% uptick in fears surrounding AI in the US alone, and this raises the question: how can brands use AI ethically?

With all of that having been said and now out of the way, it is important to note that transparency is quickly becoming the name of the game so to speak. Generative AI mines massive quantities of data, and this might concern consumers about how their own data might end up in the wrong hands.

There is still a long way to go before governments can agree upon widespread regulations for AI. Until that time comes, brands must play their part in maintaining a reasonable code of ethics with all things having been considered and taken into account.

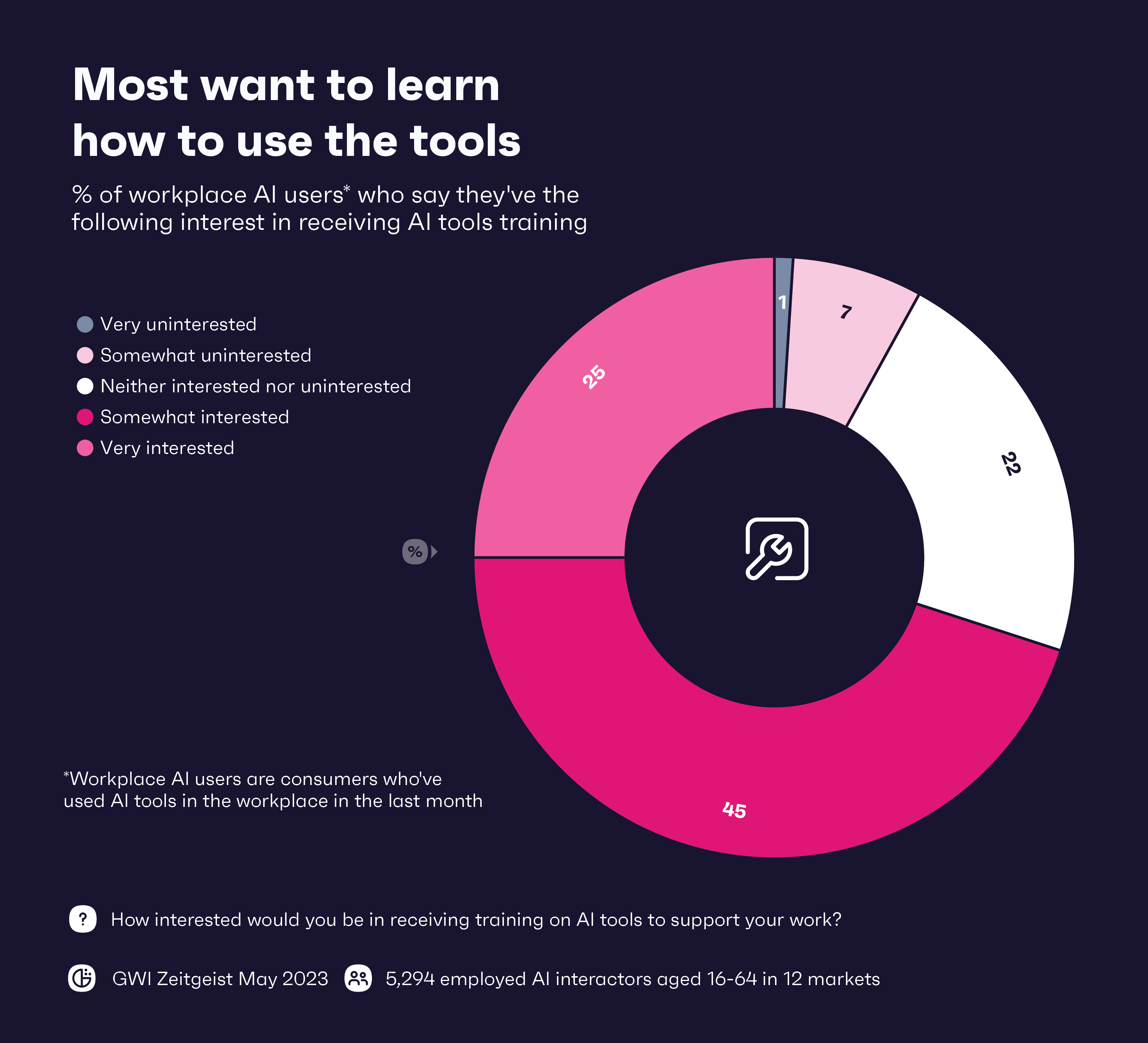

The key to creating an air of security for the data that is being used to fuel generative AI such as ChatGPT is to train staff on how to use it properly. It turns out that as many as 71% of workers are willing to undergo this type of training. Doing so can leave them better equipped to manage said data than might have been the case otherwise.

Another major concern when it comes to AI is how quickly it is growing. Facebook took around four and a half years to reach the hundred million user mark, but ChatGPT achieved the same feat in just 2 months. Such an exponential growth rate has led to a rise in fake news. Just 29% of consumers stated that they trust the news that they see, since there is always a chance that it is a product of generative AI.

Overall, 64% of consumers indicated that they are worried about the various unethical ways in which generative AI might be utilized. Deepfakes is just one of the many examples of how AI can be used maliciously, since it blurs the line between fiction and reality to the point where they may even become indistinguishable in the near future.

Furthermore, AI is by no means free of bias. The biases present within the mental framework of AI coders is present within the AI itself. Not to mention, the data that is being mined is also full of biases and human error, so it is imperative that brands factor this into the equation moving forward.

If brands want to use AI to obtain information, they need to fact check this information multiple times. ChatGPT and other Large Language Models are prone to hallucinations, which is when they put out information that is entirely false.

Providing this fake information without verifying it first could create a climate of distrust between brands and their customers. Finally, 25% of workers are worried they might lose their jobs to AI, and this is yet another concern that must be addressed without delay lest the implications become ever more dire.

Source: Global Web Index

Read next: AI: The Future of Hacking and How to Stay One Step Ahead!

by Zia Muhammad via Digital Information World

No comments:

Post a Comment