One of the biggest questions surrounding AI is the manner in which it would impact jobs. Many are concerned that AI would make jobs obsolete due to how it would be able to perform various tasks more efficiently as well as effectively than might have been the case otherwise. In spite of the fact that this is the case, a study by Gartner has revealed that AI will actually have a minimal impact on the availability of jobs by 2026.

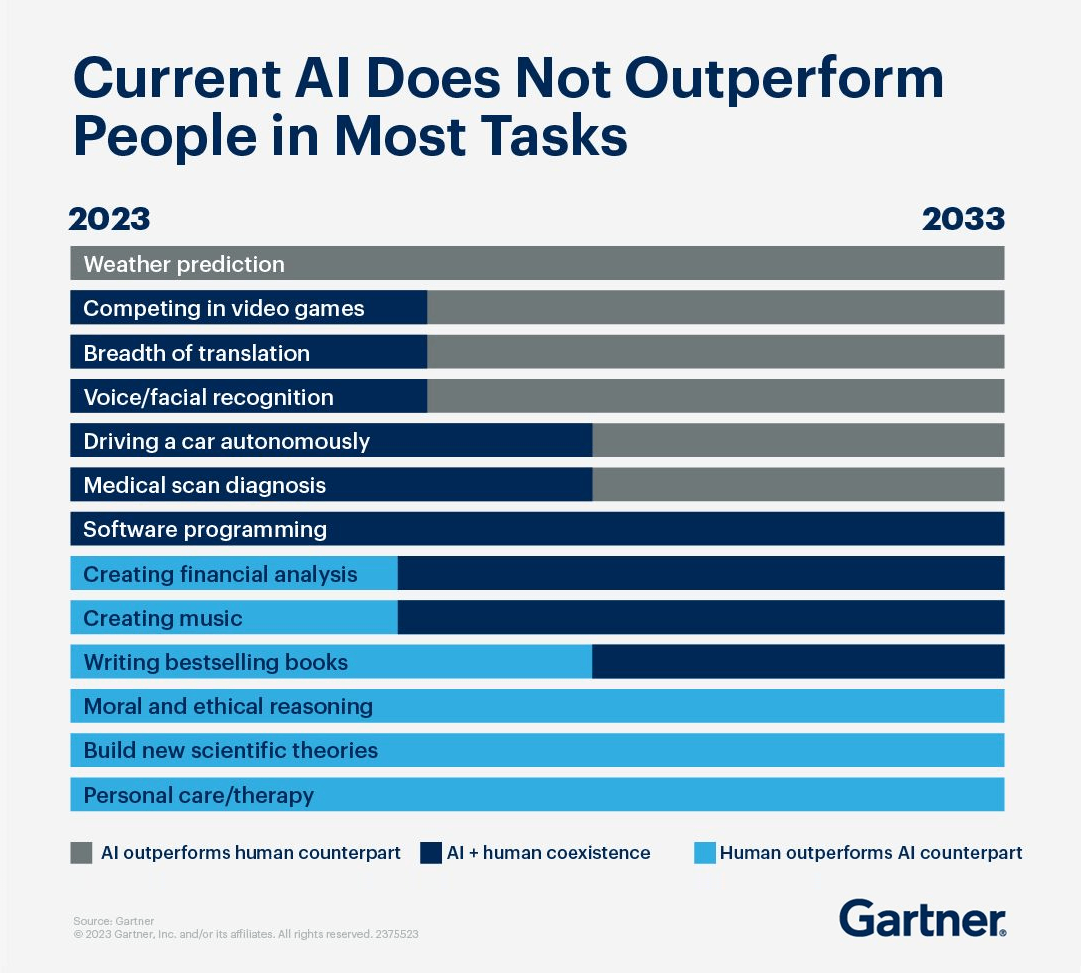

With all of that having been said and now out of the way, it is important to note that AI is not currently capable of outperforming humans when it comes to the vast majority of tasks. Three skills in particular are going to be secure through 2033, namely personal care and therapy, building new scientific theories as well as moral and ethical reasoning. Humans will be better at these skills than AI at least until 2033 according to the findings presented within this study.

Three other skills are also in the clear, with AI not being able to come close to humans in the present year and it will end up being on part with humans at some point or another in the upcoming decade. These three skills are writing bestselling books, creating music as well as conducting financial analyses. Whenever AI catches up with humans, it will likely complement people that are performing these jobs rather than replacing them entirely.

Of course, there are some jobs that AI will get better at than humans even if it’s not currently on par. Software programming is a skill that AI is already equal to humans at, although it is still a useful tool and it won’t be replacing humans anytime soon. Apart from that, medical scan diagnosis, driving, facial recognition, translation and playing video games are all skills that AI has equalled humans in, and by the late 20s it will have replaced humans.

It bears mentioning that most of these skills are not extremely valuable jobs save for medical scan diagnosis, which essentially indicates that not that many job are going to be eliminated at least in the near future. The only skill that has already become obsolete in the face of AI is weather prediction, with most others holding their own for the time being.

Read next: AI Taking Jobs Trend Soared by 304% Globally in the Past Year, Led by Heightened Australian Concern

by Zia Muhammad via Digital Information World

"Mr Branding" is a blog based on RSS for everything related to website branding and website design, it collects its posts from many sites in order to facilitate the updating to the latest technology.

To suggest any source, please contact me: Taha.baba@consultant.com

Thursday, December 28, 2023

Wednesday, December 27, 2023

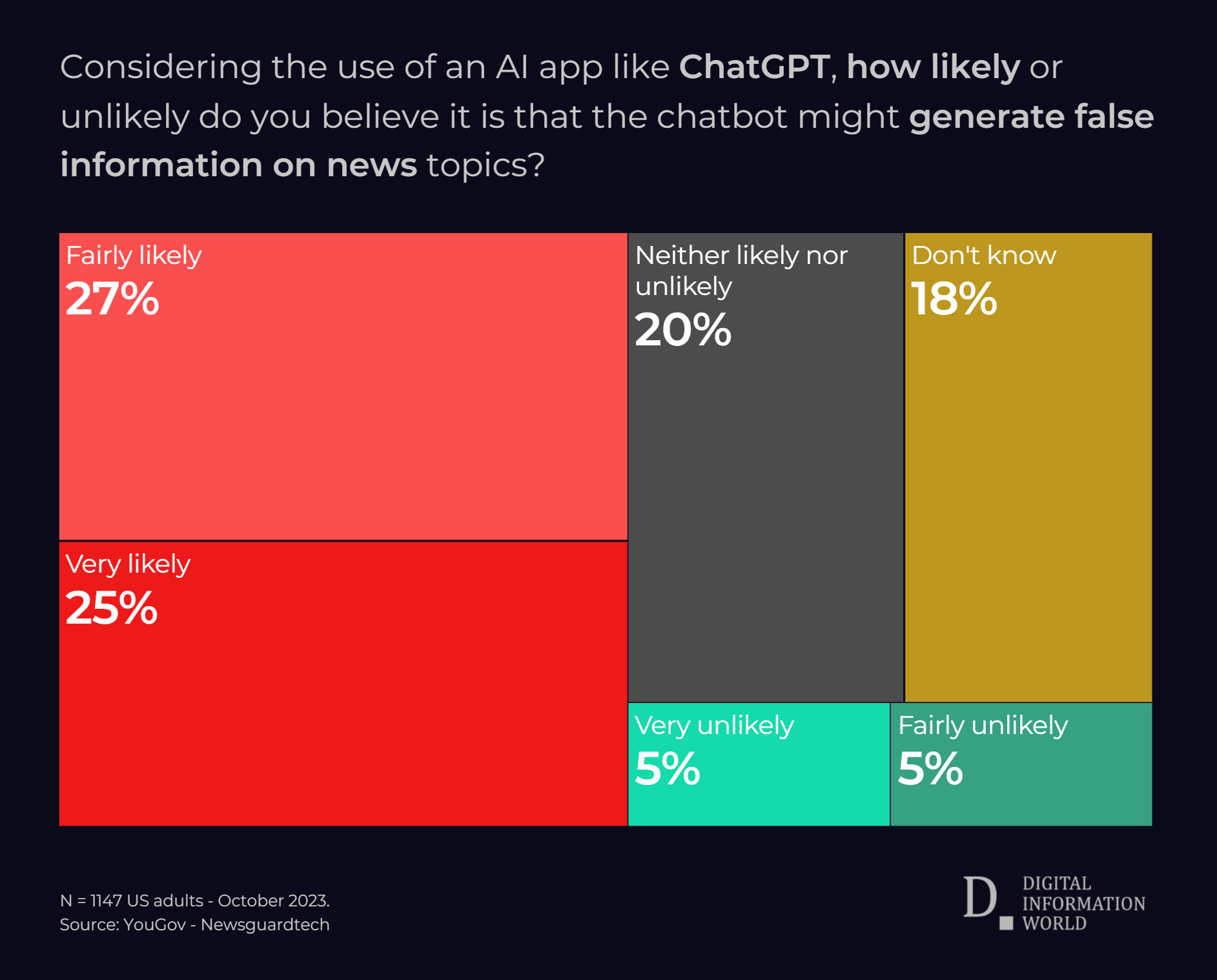

52% of Survey Respondents Think AI Spreads Misinformation

Generative AI has the ability to completely change the world, but in spite of the fact that this is the case, many are worried about its ability to create and spread misinformation. A survey conducted by YouGov on behalf of NewsGuard revealed that as many as 52% of Americans think its likely that AI will create misinformation in the news, with just 10% saying that the chances of this happening are low.

With all of that having been said and now out of the way, it is important to note that generative models have a tendency to hallucinate. What this basically means is that the chat bot will generate information based on prompts regardless of whether or not it is factual. This doesn’t happen all the time, but it occurs often enough that content produced by AI can’t always be trusted, and it is more likely to contain false news than might have been the case otherwise.

When researcher tested GPT 3.5, it found that ChatGPT provided misinformation in 80% of cases. The prompts it received were specifically engineered to find out if the chatbot was prone to misinformation, and it turned out that the vast majority of cases led to it failing the test.

There is a good chance that people will start using generative AI as their primary news source because of the fact that this is the sort of thing that could potentially end up providing instant answers. However, with so much misinformation being fed into its databases, the risks will become increasingly difficult to ignore.

Election misinformation, conspiracy theories and other forms of false narratives are common in ChatGPT. In one example, researchers successfully made the model parrot a conspiracy theory about the Sandy Hook mass shooting, with the chatbot saying that it was staged. Similarly, Google Bard stated that the shooting at the Orlando Pulse club was a false flag operation, and both of these conspiracy theories are commonplace among the far right.

It is imperative that the companies behind these chatbots take steps to prevent them from spreading conspiracy theories and other misinformation that can cause tremendous harm. Until that happens, the dangers that they can create will start to compound in the next few years.

Read next: Global Surge in Interest - Searches for 'AI Job Displacement' Skyrocketed by 304% Over the Past Year,

by Zia Muhammad via Digital Information World

With all of that having been said and now out of the way, it is important to note that generative models have a tendency to hallucinate. What this basically means is that the chat bot will generate information based on prompts regardless of whether or not it is factual. This doesn’t happen all the time, but it occurs often enough that content produced by AI can’t always be trusted, and it is more likely to contain false news than might have been the case otherwise.

When researcher tested GPT 3.5, it found that ChatGPT provided misinformation in 80% of cases. The prompts it received were specifically engineered to find out if the chatbot was prone to misinformation, and it turned out that the vast majority of cases led to it failing the test.

There is a good chance that people will start using generative AI as their primary news source because of the fact that this is the sort of thing that could potentially end up providing instant answers. However, with so much misinformation being fed into its databases, the risks will become increasingly difficult to ignore.

Election misinformation, conspiracy theories and other forms of false narratives are common in ChatGPT. In one example, researchers successfully made the model parrot a conspiracy theory about the Sandy Hook mass shooting, with the chatbot saying that it was staged. Similarly, Google Bard stated that the shooting at the Orlando Pulse club was a false flag operation, and both of these conspiracy theories are commonplace among the far right.

It is imperative that the companies behind these chatbots take steps to prevent them from spreading conspiracy theories and other misinformation that can cause tremendous harm. Until that happens, the dangers that they can create will start to compound in the next few years.

Read next: Global Surge in Interest - Searches for 'AI Job Displacement' Skyrocketed by 304% Over the Past Year,

by Zia Muhammad via Digital Information World

ChatGPT Has Changed Education Forever, Here’s What You Need to Know

When ChatGPT was first released to the public, it saw a massive spike in usership. When usage began to decline, people assumed that this was due to the AI losing its appeal and entering the trough of disillusionment. In spite of the fact that this is the case, it turns out that this dip was due to something else entirely: summer break. Students that had been using ChatGPT to help them do homework returned in September and usage went back up.

With all of that having been said and now out of the way, it is important to note that the LLM based chatbot might be changing education for good. Recently conducted interviews by Vox with several high school and college students as well as educators at various levels shows the various problems in terms of ChatGPT and its popularity among people that are learning.

The first major issue here is that some teachers think that ChatGPT can be used to cheat. This is certainly a pertinent concern because of the fact that this is the sort of thing that could potentially end up allowing students to just generate answers to all of their questions. However, it bears mentioning that ChatGPT can also help students break down concepts and aid them in the learning process.

Of course, there is a problem with ChatGPT that needs to be discussed, namely that it doesn’t always present factual information. This raises the issue that students may end up learning the wrong things, and it is by no means the only concern that educators and students alike are having about this chatbot as of right now.

Another issue that is worth mentioning is that ChatGPT could be similar to Google Maps, in that it will make people too reliant on it and incapable of learning on their own. Google Maps is known to decrease spatial awareness, and that is a harmful outcome that could be orders of magnitude more severe in the case of ChatGPT since it would be concerned with multiple types of intelligence instead of just spatial awareness.

There are two types of learning that students can go for. One is passive learning, in which a student sits back and listens to an expert give a lecture, and the second option is called active learning, which involves groups of students trying to figure out a problem and only have an expert intervene when they need help.

Studies have shown that students largely prefer the first method, but the second method is proven to help them get better test scores with all things having been considered and taken into account. Using ChatGPT for learning will make active learning a thing of the past, and that is something that needs to be addressed sooner rather than later.

Photo: Digital Information World - AIgen

Read next: Searches for "AI Taking Jobs" Soared by 304% Globally in the Past Year, Led by Heightened Australian Concern

by Zia Muhammad via Digital Information World

With all of that having been said and now out of the way, it is important to note that the LLM based chatbot might be changing education for good. Recently conducted interviews by Vox with several high school and college students as well as educators at various levels shows the various problems in terms of ChatGPT and its popularity among people that are learning.

The first major issue here is that some teachers think that ChatGPT can be used to cheat. This is certainly a pertinent concern because of the fact that this is the sort of thing that could potentially end up allowing students to just generate answers to all of their questions. However, it bears mentioning that ChatGPT can also help students break down concepts and aid them in the learning process.

Of course, there is a problem with ChatGPT that needs to be discussed, namely that it doesn’t always present factual information. This raises the issue that students may end up learning the wrong things, and it is by no means the only concern that educators and students alike are having about this chatbot as of right now.

Another issue that is worth mentioning is that ChatGPT could be similar to Google Maps, in that it will make people too reliant on it and incapable of learning on their own. Google Maps is known to decrease spatial awareness, and that is a harmful outcome that could be orders of magnitude more severe in the case of ChatGPT since it would be concerned with multiple types of intelligence instead of just spatial awareness.

There are two types of learning that students can go for. One is passive learning, in which a student sits back and listens to an expert give a lecture, and the second option is called active learning, which involves groups of students trying to figure out a problem and only have an expert intervene when they need help.

Studies have shown that students largely prefer the first method, but the second method is proven to help them get better test scores with all things having been considered and taken into account. Using ChatGPT for learning will make active learning a thing of the past, and that is something that needs to be addressed sooner rather than later.

Photo: Digital Information World - AIgen

Read next: Searches for "AI Taking Jobs" Soared by 304% Globally in the Past Year, Led by Heightened Australian Concern

by Zia Muhammad via Digital Information World

Searches for "AI Taking Jobs" Soared by 304% Globally in the Past Year, Led by Heightened Australian Concern

- US searches on "AI taking jobs" surged by 276% in Dec. 2023 (when compared with the same month of last year), led by California and Virginia.

- Overall, global interest in the topic has surged by 9,200% since December 2013.

- Current likelihood of AI replacing jobs is low, serving as a tool for professionals, but future advancements may pose a higher risk.

- Google Trends show a 2,666% rise in searches for 'AI jobs,' indicating potential job creation; 2024 will be a critical test for concerns about job obsolescence.

When comparing December 2023 data to December of 2013, US search interest in this term has jumped up by approximately 7,800%.

When looking at the global picture while comparing last year trends (December 2022), it is important to note that Australia is showing an even higher level of concern than the US, with Canada, the Philippines and the UK following it in the list. In the US, this search term was used 276% more frequently, which is 28 points lower than the global average, i.e. 304%.

This seems to suggest that the US is not quite as concerned as some other nations in the world, with the global increase in the search term "Artificial intelligence taking jobs" being around 9,200% since December of 2013 compared to 7,300% for America. With this term reaching an all time high, paying attention to consumer concerns will be critical because of the fact that this is the sort of thing that could potentially end up mitigating any fears that they might have.

The likelihood that AI will replace jobs is currently rather low, with generative AI largely serving as a tool for professionals rather than an outright replacement. In spite of the fact that this is the case, AI will become more advanced in the future which will make it more likely that it will take jobs away from people than might have been the case otherwise.

When looking at the flip side, Google Trends insights from the past decade, compiled by Digital Information World team, show that AI might be creating a decent number of jobs. The data reveals a 2666% increase in searches for 'AI jobs,' with most of them coming from Singapore, Kenya, and Pakistan. Furthermore, statistics indicate that data science and machine learning were among some of the top search queries and topics.

It will be interesting to see where things go from here on out, since global sentiment can be surmised based on the findings presented above. They’ve been gleaned from Google Trends and reveal a growing level of trepidation that certain jobs will become obsolete, and 2024 will prove to be a litmus test that will determine whether or not these concerns have any kind of factual basis.

Read next: GenAI Prism - This Infographic Illuminates the Expansive Universe of Generative AI Tools

by Zia Muhammad via Digital Information World

Japan All Set To Enable More Third-Party App Stores And Payment Systems For iOS And Android Devices

It’s the news that has been speculated for months and now we’re finally seeing more progress made in terms of enabling more third-party app stores and payment options for both Android and iOS users in Japan.

So many developments continue to arise as we speak after the country’s government opted to submit its proposal as early as next year. This would give rise to a new and revolutionary beginning in the country’s tech world.

The news was first confirmed by the Japanese media outlet Nikkei which delineated how the proposal will soon make its way toward the country’s national legislature dubbed Diet. It wen went on to explain how this would stop firms providing operating systems to smartphones from abusing their power as a monopoly in the app store industry.

Hence, we can see how this would give rise to greater chances for competition in the tech world, especially those firms finding it hard to work against the big names of the smartphone world.

When or if it’s passed, the nation’s Fair Trade Commission should be the regulatory body overlooking this endeavor and making sure things go as planned, the report added.

This would be in line with the long list of trends we’re seeing take center stage and forcing organizations such as Apple as well as Google to make more apps available to a greater number of app stores.

At the start of this month, Google made it very clear how it would now be saying yes to a mega $700 settlement that’s up for grabs in nearly 50 different states. This settlement would give rise to a larger number of sideloading applications across Android and would even say hello to other options for billing of platforms too.

iPhone maker Apple is also under the radar in the EU as it allowed app sideloading across iOS devices. After the DMA was rolled out in the European Union region in the year 2022, we saw a lot of big names get scrutinized for enabling new regulations for tech giants. Apple seemed to be a part of the list of those who serve as gatekeepers of the industry. This means opening up a plethora of options so third parties may benefit.

For now, the Cupertino firm needs to comply with the DMA by early next year, as March 4, 2024, was highlighted as the deadline to make the change. We’ve also heard about software giant Microsoft doing its own work on enabling third-party mobile app stores for gaming purposes but no confirmation was made regarding that news so far.

Other reports did detail how Apple is going to give sideloading permission to EU users with iPhones and iPads at the start of next year, right before the deadline ensues. So as you can imagine, it’s a race against time and we’ll keep you updated on this front.

Photo: Digital Information World - AIgen

Read next: How Do X (Twitter) Users Feel About Blue Ticks?

by Dr. Hura Anwar via Digital Information World

So many developments continue to arise as we speak after the country’s government opted to submit its proposal as early as next year. This would give rise to a new and revolutionary beginning in the country’s tech world.

The news was first confirmed by the Japanese media outlet Nikkei which delineated how the proposal will soon make its way toward the country’s national legislature dubbed Diet. It wen went on to explain how this would stop firms providing operating systems to smartphones from abusing their power as a monopoly in the app store industry.

Hence, we can see how this would give rise to greater chances for competition in the tech world, especially those firms finding it hard to work against the big names of the smartphone world.

When or if it’s passed, the nation’s Fair Trade Commission should be the regulatory body overlooking this endeavor and making sure things go as planned, the report added.

This would be in line with the long list of trends we’re seeing take center stage and forcing organizations such as Apple as well as Google to make more apps available to a greater number of app stores.

At the start of this month, Google made it very clear how it would now be saying yes to a mega $700 settlement that’s up for grabs in nearly 50 different states. This settlement would give rise to a larger number of sideloading applications across Android and would even say hello to other options for billing of platforms too.

iPhone maker Apple is also under the radar in the EU as it allowed app sideloading across iOS devices. After the DMA was rolled out in the European Union region in the year 2022, we saw a lot of big names get scrutinized for enabling new regulations for tech giants. Apple seemed to be a part of the list of those who serve as gatekeepers of the industry. This means opening up a plethora of options so third parties may benefit.

For now, the Cupertino firm needs to comply with the DMA by early next year, as March 4, 2024, was highlighted as the deadline to make the change. We’ve also heard about software giant Microsoft doing its own work on enabling third-party mobile app stores for gaming purposes but no confirmation was made regarding that news so far.

Other reports did detail how Apple is going to give sideloading permission to EU users with iPhones and iPads at the start of next year, right before the deadline ensues. So as you can imagine, it’s a race against time and we’ll keep you updated on this front.

Photo: Digital Information World - AIgen

Read next: How Do X (Twitter) Users Feel About Blue Ticks?

by Dr. Hura Anwar via Digital Information World

Tuesday, December 26, 2023

How Do X (Twitter) Users Feel About Blue Ticks?

Blue ticks used to be considered a way for celebrities and public figures to maintain some semblance of authenticity online. It was essential because of the fact that this is the sort of thing that could potentially end up differentiating between legitimate and parody accounts as well as impersonators, but Elon Musk’s tenure has changed this dynamic considerable. It is now possible to acquire a blue verified badge simply by subscribing to X and paying a monthly fee.

It is important to note that this has changed how people feel about the blue tick in general. According to a survey conducted by YouGov on behalf of NewsGuard, 42% of X users think that a blue tick is solely obtained through payment. 25% are of the opinion that blue tick users received their distinction due to being accounts with high levels of authenticity, and 15% believed that the blue tick is a sign of higher credibility. 5% selected none of the options, and 13% said that they didn’t know.

It bears mentioning that around 6 in 10 of X users don't know that a blue tick could be purchased through a subscription, instead continuing to assume that they are marks of legitimacy. Such a belief is dangerous due to how it can make users trust accounts that have bought their verification status, many of which have spread misinformation across the sight.

With around 4 in 10 of users being aware of the paid nature of blue checks, the propensity for fake news to spread like wildfire will become a constant concern. Elon Musk’s free speech absolutism is clearly resulting in misconceptions among users, and bad actors are capitalizing on this to turn X into a place where no information can be fully trusted with all things having been considered and taken into account.

Read next: How Does Authorship Work in the Age of AI? This Study Reveals the Answers

by Zia Muhammad via Digital Information World

It is important to note that this has changed how people feel about the blue tick in general. According to a survey conducted by YouGov on behalf of NewsGuard, 42% of X users think that a blue tick is solely obtained through payment. 25% are of the opinion that blue tick users received their distinction due to being accounts with high levels of authenticity, and 15% believed that the blue tick is a sign of higher credibility. 5% selected none of the options, and 13% said that they didn’t know.

It bears mentioning that around 6 in 10 of X users don't know that a blue tick could be purchased through a subscription, instead continuing to assume that they are marks of legitimacy. Such a belief is dangerous due to how it can make users trust accounts that have bought their verification status, many of which have spread misinformation across the sight.

With around 4 in 10 of users being aware of the paid nature of blue checks, the propensity for fake news to spread like wildfire will become a constant concern. Elon Musk’s free speech absolutism is clearly resulting in misconceptions among users, and bad actors are capitalizing on this to turn X into a place where no information can be fully trusted with all things having been considered and taken into account.

- Also read: America’s Most Popular CEOs Revealed

Read next: How Does Authorship Work in the Age of AI? This Study Reveals the Answers

by Zia Muhammad via Digital Information World

How Does Authorship Work in the Age of AI? This Study Reveals the Answers

The rise of LLMs is creating a situation wherein massive quantities of text can be generated basically at the drop of a hat. In spite of the fact that this is the case, it’s not always clear what people think about the question of authorship in this brave new world.

LLMs use human generated text in their algorithms and for training purposes, and they can be tweaked to mimic a particular writing style down to the last letter, so the question of authorship is a pertinent one with all things having been considered and taken into account. Hence, researchers working at the Institute for Informatics at LMU tried to find an answer to this question.

Test participants were divided into two groups, and both were asked to write post cards. One group had to write these postcards on their own, whereas the other was able to use an LLM to get the job done. When the postcards were written, test participants were asked to upload them and provided some context on authorship.

With all of that having been said and now out of the way, it is important to note that people felt more strongly about their own authorship if they were more heavily involved in the creative process. However, it bears mentioning that many of the people that used LLMs still credited themselves as authors which is rather similar to ghostwriting in several ways.

This just goes to show that perceived ownership and authorship don’t necessarily go hand in hand. If the writing style was close enough to their own, participants had no problem whatsoever with trying to claim that the writing in question was theirs.

The authorship question is pertinent because of the fact that this is the sort of thing that could potentially end up determining whether or not people trust the content that they read online. The willingness of participants to put their own name on a piece of text that was generated almost entirely by AI goes to show that much of the content online may very well end up being created through LLMs, and readers might not know about it. The key is transparency, though it remains to be seen whether or not people using LLMs would be willing to declare it.

Read next: This GenAI Prism Highlights the Expansive Universe of Generative AI Tools

by Zia Muhammad via Digital Information World

LLMs use human generated text in their algorithms and for training purposes, and they can be tweaked to mimic a particular writing style down to the last letter, so the question of authorship is a pertinent one with all things having been considered and taken into account. Hence, researchers working at the Institute for Informatics at LMU tried to find an answer to this question.

Test participants were divided into two groups, and both were asked to write post cards. One group had to write these postcards on their own, whereas the other was able to use an LLM to get the job done. When the postcards were written, test participants were asked to upload them and provided some context on authorship.

With all of that having been said and now out of the way, it is important to note that people felt more strongly about their own authorship if they were more heavily involved in the creative process. However, it bears mentioning that many of the people that used LLMs still credited themselves as authors which is rather similar to ghostwriting in several ways.

This just goes to show that perceived ownership and authorship don’t necessarily go hand in hand. If the writing style was close enough to their own, participants had no problem whatsoever with trying to claim that the writing in question was theirs.

The authorship question is pertinent because of the fact that this is the sort of thing that could potentially end up determining whether or not people trust the content that they read online. The willingness of participants to put their own name on a piece of text that was generated almost entirely by AI goes to show that much of the content online may very well end up being created through LLMs, and readers might not know about it. The key is transparency, though it remains to be seen whether or not people using LLMs would be willing to declare it.

Read next: This GenAI Prism Highlights the Expansive Universe of Generative AI Tools

by Zia Muhammad via Digital Information World

Subscribe to:

Comments (Atom)